John Carmack on Virtual Reality - Uncut

By popular demand, a complete transcript of our E3 interview.

At E3 last month, I got the chance to interview John Carmack and try out his prototype virtual reality headset, using the device to play the forthcoming Doom 3: BFG Edition. It was, as I wrote at the time, a memorable experience:

"As a 3D viewer, it's striking. But the head-tracking is something else. It's no exaggeration to say that it transforms the experience of playing a first-person video game. It's beyond thrilling... Doom 3 is an eight-year-old shooter, but in this form playing it is as visceral and exciting as it must have been to play the first Doom the day it was released."

Since we published my original report - and some follow-up from Digital Foundry's Rich Leadbetter in his article What Went Wrong with Stereo 3D? - we've had a number of requests to run the 30-minute interview in its entirety; such is your appetite for every nerdy nugget that spills from the lips of this remarkable man. We aim to please, so here's a completely uncut and mostly unvarnished transcript.

Carmack talks fast and, as you'll see, has a tendency to follow his own tangents; it wasn't until eight minutes in that I realised I was just going to have to interrupt his stream of consciousness or he would quite happily fill our half-hour slot with a monologue. But this wasn't bluster. A glint in his eye betrayed a boyish enthusiasm for virtual reality and for games technology in general. He has an old-school hacker attitude, a hunger for problems to solve, and his passion for games programming is clearly undimmed after 23 years in the business. He was obviously delighted with his creation.

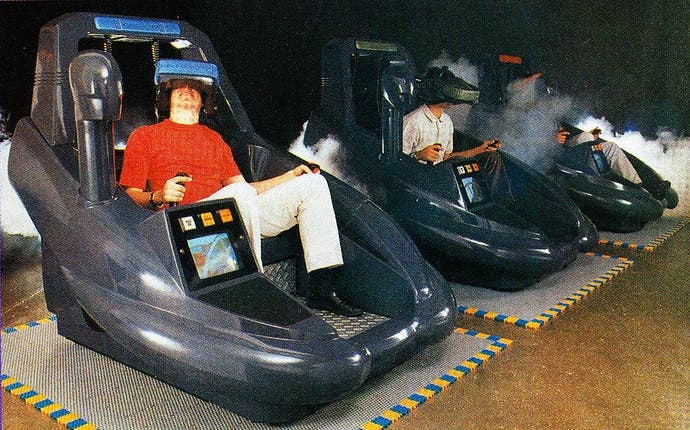

JC: This has turned out to be a really wonderful thing for me. After we shipped Rage, as a treat to myself, I went out to see what the state of the head-mounted display world was. We were involved in this back in the early '90s, the first heyday of VR - we had licensed Wolfenstein, Doom and Quake to a bunch of different companies. They were all loser bets. It was too early technology-wise, but also they weren't the right companies. You had people excited about it rather than the technical people that were necessary to make things work the way they needed to.

So I'd only kept a little bit of an eye on the VR world over the last 15 years. Every time I went to a trade show or something, I'd try on one and say, this still sucks, this is no good. Have you tried a head-mounted display anywhere?

EG: Not for a really, really long time.

"I went out and I spent 1500 dollars on a head-mounted tracker - and it was awful. Just awful in a lot of ways."

John Carmack

JC: They haven't gotten much better, that's the frustrating thing. I tried some a year or two ago at the Game Developers Conference and thought, 'These are still no good.' Awful field of views, awful latency in tracking, all these problems out there.

But I thought, maybe, things have gotten better. Because I've run across a lot of people that have been around a long time, they think VR... they get this idea, well it's been 20 years, surely we can just go out and buy that head-mount that we always wanted back in the '90s. And the sad truth is that no, you can't, that they're really not that good, what you can buy for consumer availability now.

So I went out and I spent 1500 dollars on a head-mounted tracker - and it was awful. Just awful in a lot of ways. I wrote a little technology demo to drive it on there; it had long latencies and bad display characteristics, all sorts of stuff. I might have just chucked it out and said, oh well, I'll go back onto something else. But I decided to push through a little bit and started taking things apart, both literally and figuratively, and find out what is wrong with this - why is it as bad as it is? And is it something that we could actually do things about?

I started at the sensor side of things. There's lots of companies that make these little gyros and accelerometers, the same type of thing that's in your phones. Like Hillcrest Labs. Most of their business is for things like hands-free, open-space mice - presenters' mice, things like that where you can wave 'em around. But there's a little niche business in the VR space of making head-trackers.

But if you use it in the standard way of 'tell me what my orientation is', there's a lot of work that you have to do to filter and bias the sensors and drift it to a gravity vector. There's a lot of different ways of doing that, but their baseline way of doing it had about 100 milliseconds of latency. Huge extra lag on the sensors, there.

So what I did is I went down to the raw sensor input, and I actually took the orientation code I did at Armadillo Aerospace years ago for our rocket ships. We use expensive fibre-optic drivers on there, but the integration's the same stuff. So I pulled that raw code over and it got a lot better.

Then I contacted Hillcrest Labs directly and said, you know, it's kind of unfortunate that you have a 125Hz update, either being at 120 or much higher would be better for syncing with v-sync. And that was one of those 'it's good to be me' days where they knew who I was and they were kind of excited that somebody was paying this much picky attention to the details. Because most people, OK, you really don't care if your presenter mouse has a bit of lag or filtering on that - but I was down there saying 120 would be much better than 125 updates a second.

And they made some custom firmware for me. They said well, the way the USB stuff works, we can't do 120 but we can double it to 250Hz. They burned custom firmware and sent me some modules with the new firmware on it.

At that point, then, I had a four-millisecond update rate on there. Windows still adds eight, 12 milliseconds of queuing as you go across the USB and through the operating system and drivers before it finally gets to the user program. I was damn tempted to go write a Linux kernel mode driver to try and bypass all that, but I wasn't able to dedicate enough time to go do that.

"I went down to the raw sensor input, and I actually took the orientation code I did at Armadillo Aerospace years ago for our rocket ships... I pulled that raw code over and it got a lot better."

So I cut all that down, and of course I know how to do efficient simulation rendering and keep the drivers from messing things up on there - all that stuff worked fine. But then I was left with... coming out of the computer you've still got a pretty poor video display device.

There's three things that are bad on displays in general. The most obvious one is the field of view. Cheap consumer head-mounts, they talk about them in terms of how big of a TV it is at some arbitrary distance - you know, it's a 120-inch TV that's 20 feet away from you. It's a really bogus way to measure something. If you go away and measure something on the wall there and find the distance where it matches, you find that they have about a 26 degree field of view, which is like looking at the world through toilet paper tubes. There's no way that that's the immersive virtual reality of our dreams.

So field of view is the obvious thing in there. Then there's a couple of not obvious but still important things that still get messed up on displays. A very good LCD panel, like a Retina display on an iOS device, that has a switching time of about 4 milliseconds on there, and it varies based on what you're doing. It's actually faster to go white to black than it is to go grey to grey on there. But a lot of LCDs, a lot of the small ones that would wind up in head-mount displays, have more traditional times of 20 to 30 milliseconds. You used to see this on PC monitors, where there'd be kind of a smearing change of view if you spin around in a first-person shooter. That's still prevalent on a lot of small LCDs.

And then the other thing that happens, if it comes from sort of a consumer TV heritage, is - it's the bane of the gaming industry - all the consumer TVs buffer, add latency for features. They go, 'we want to be able to take different resolutions, we want to take different 3D formats, we want to do motion interpolation, we want to do deblocking and content protection'. And in theory all of these can be combined into one massive, streaming pipeline on there that doesn't add much latency. But realistically, the way they get it developed is they say, 'OK, you did HDCP, you did 3D format conversion, you did this,' and there's a buffer in between each one. Some TVs offer a gaming mode that cuts some of that down but even there it's still...

I actually had somebody dig out an old Sony Trinitron CRT monitor. I'm doing all these comparisons with a high-speed camera; I've got this bulky old 21-inch monitor, but I can run it at 170hz. And when voltage comes out of the back of a computer, within a nanosecond it's driving the electron beam. Or a couple of nanoseconds - certainly far less than a microsecond. Photons are coming off the screen less than a microsecond after it says that! While many things, like this Sony head-mount display which came out in February... [Indicates a Sony HMZ-T1 he has lying on the table]

EG: I was going to ask you about that...

JC: So in many ways, this was a huge advance over what was before. The thing that I bought that so angered me last year was twice the cost of this, it had 640 by 480 displays on there, awful filtering on everything. This comes out, it has 720p OLEDs per eye. OLED really is the technology that's ideal for a head-mount, because it's almost as fast as a CRT. It'll switch in under a microsecond, where even the best LCDs are four milliseconds or something, and variable. So OLEDs are great.

And in fact, it's interesting, I was able to see some new artifacts that I wasn't able to see in the other devices. One of the things I was doing in my R&D test bed was, if you've only got a 60Hz refresh, you can't refresh the whole screen cleanly, but you can still render more times and just get tear lines. I was at the point where I can render 1000 frames per second on a high-end PC on what I was doing, which meant that you would have like 15 bands on the screen. And they're only slightly separated, because a normal tear-line in a game might be one tear line and you've got this big shear, and that looks horrible. I campaign against tear lines a lot.

But when I was working at 1000 frames per second, it almost looked seamless - it's more like a rolling shutter with an LCD, but with the OLEDs they're so fast and crisp, it's back to seeing 15 lines.

EG: So why not add the motion detection you were working on directly to what Sony was doing?

JC: I'm actually in contact with Sony now about some other stuff. I was hoping to repeat my thing with Hillcrest Labs, you know, 'If you could just give me a different firmware that's hard-coded to one mode that bypasses all of this...' In fact I offered a hardware bounty if someone would reverse engineer this Sony box [indicates the HMZ-T1's processor unit] that's doing all the bad things I don't like and give a direct drive into here.

We had somebody at least that took these apart enough to know that they're using a display port driver chip between their box and here. It's probably possible to reverse-engineer it, but it would be an FPGA challenge. It would have been worth $10,000 to me to make an open-source way to do that, but I didn't find any takers on that. Hopefully I'll be able to get Sony to do that now anyways.

EG: Your passion for this is obvious...

JC: Really, all we've been doing in first-person shooters since I started is trying to make virtual reality. Really that's what we're doing with the tools we've got available. The whole difference between a game where you're directing people around and an FPS is, we're projecting you into the world to make that intensity, that sense of being there and having the world around you.

And there's only so much you can do on a screen that you're looking at. In the very best game experiences, if you're totally in the zone and totally into the game that you're doing, you might sort of fade the rest of the world out and just focus on that. But if you're wandering around E3 and stopping to look at a game, it's so clear that you're looking at something on a screen and you're looking at it in a detached way. Even the best, most advanced FPSes. So the lure of virtual reality is always there, since the '90s.

EG: And it hasn't faded for you?

JC: Well, you know I didn't think about it much in the interim times. I would talk about it at QuakeCon, and direct laser retinal scanners - but really all those years passed before I finally said, I'm going to go buy one and I'm going to see what I can do with this. But this was still like my toy pet project, I was just tinkering with this a little bit.

When we decided we were going to do the Doom 3 BFG Edition and bring it to the modern consoles, reissued, put some new levels on, it was one of those things where, sure, we'll make it run fast, I'll get 60 frames per second out of the consoles - which, by the way, was not nearly as easy as I thought. I thought, eight-year-old PC game, surely it'll just be a slam dunk to put it on there... I sweated a lot in the last couple of months working on that. But it turned out really good, I'm pleased with how it plays, it looks good.

So, we had to figure out something to differentiate ourselves, and I said, well, I've been doing all this stereoscopy and VR work, we can at least do 3DTV support for consoles on there. And both Sony and Microsoft are kind of pushing that as one of the new things they can do. I'm not the world's biggest 3DTV booster, I think that it's... it's a little bit of a dubious feature on there, especially if you have to trade frame rate away for the 3D effect.

"Really, all we've been doing in first-person shooters since I started is trying to make virtual reality."

It turned out a little neater than I expected - interestingly, I actually learned some things about the right way to do that. I had done shutter-glass stuff back in the Quake 3 and Doom 3 days originally, and I just felt very, very unimpressed by it. I didn't think it was all that compelling. You'd still find ghosting... That was another one of those things that I thought, surely 10 years later there's no ghosting on 3D displays, but it's still there, you still fight with that.

But I realised I was doing some things wrong all those years ago, where I would render the two separate views, but I didn't have the adjustment in image space on the screen that also needed to be there, which is actually screen-dependent. That's one of those things that's not immediately obvious. You know you're rendering these things that are two and a half inches apart in your game world, and when you display them on a head-mounted display that's exactly where you display, you've got a separate screen for each eye. But if you've only got one 2D screen, you actually want it to have at something like infinity, the dot should be where your eyes are, so your eyes stare straight ahead at that, so there's extra real depth.

That made a bit of a difference, and then changing the game by putting in the laser sight, taking out some of the 2D effects that would detract from it...

EG: So for you, this is a better application of stereo 3D than a screen?

JC: That's what Sony sells [the HMZ-T1] as - this is a 3DTV, a personal 3DTV. It doesn't have any head-tracking, I've hot-glued my own head tracker on there as a sensor. I'm not sure that that's a marketable... They've done better than they expected I think, because it was kind of a pet project for them.

But it's sort of frustrating watching a movie or playing a game in that, because you have to hold completely still. Because if you move your head and everything moves with you, it's disorienting. And that's actually somewhat sickness-inducing. If you're playing a game on there, driving around, and you move your head in another way, it really messes with you. Much more so than on a screen, because if you move your head - even though the 3D projection on the screen is only really right for one eyeball location, and almost nobody's at that location, very few people even have their screens at the right height - but when you move your head, it's not horribly bad for what's on the screen, you still see roughly the right stuff. But when you move your head and the entire world moves with you, that's really not what your brain wants to see.

So, I don't think personal TV viewers are going to be a huge deal. Yes, maybe your if head's going back on an easy recliner or something...

EG: So do you think of [your device] as potentially a commercial product?

JC: The path on this that we took... I had justification then for spending a little bit of real time on it. No longer just tinkering in the late night hours or something, but I could spend some time integrating support into the game, doing a good job on this, instead of it just being a hacker tech demo. It's a real game that you can play in this.

I was pursuing three different directions at least on the head mounts. I've got five head mounts in various pieces, some I've built myself, some that other people have built or that are commercial products.

One direction that I was pursuing was extremely high update rates. I have gear to make a 120 frames per second OLED display, with zero latency, no processing. And I'm trying to convince Sony to rip the firmware on this to potentially get some of that going, because that makes a big difference.

With a game controller, in many ways it's surprising how skilled people can get, because manoeuvring with a game controller is not at all a natural thing. You have to choose a thumb position and then integrate over time, which is this two-steps-removal process. I still think that 60 frames per second was the right thing to do on Rage, I think it's a good deal for consoles on there, it's important to go from 30 to 60, but when I took out my old CRT monitor, I was doing tests at 120, 170 frames per second - with a game controller, it doesn't make a damn bit of difference. Maybe for some freak of nature...

But with a mouse, it's directly proportional, you're one step removed, you're mapping your hand's position, your head's position... All serious mouse players can tell the difference between 60 and 120Hz feedback rate. That was always one of the great things with Quake Arena running at 125 frames per second there. But still your average Joe gamer, set him down there, it's not going to make that much of a difference.

But in a head mount, everybody can tell the difference between 60 and 120. That's one of the reasons I've been pushing for it.

EG: How small and light can this get?

JC: Feel this... It's really light. [It is.] You're actually much more restricted by the cable. So the future is getting the cables off, it's being wireless and self-contained.

The other direction that was important - you've got frame rate on there and response to the pixels, total latency. And then field of view is the really important thing. These original 26 degree ones: completely useless. 45 degrees on this [indicates the HMZ-T1], it's just at the edge of useful.

Have you tried one of these on? I'll just power this up. I can run the game on this, but it's a pale shadow of what I've got there, so you won't want to see that. But just for comparison purposes here, to look at... I always have to give a lecture on this when somebody tries one of these on, it's very finicky in its optics. You find the right vertical spot and there's interocular adjustment on here... So it'll always be blurry somewhat around the edges but you try and find a sweet spot. But just to give you a sense of how big the desktop is on there... [We fiddle around getting the HMZ-T1 on my head.] It's an ergonomic nightmare. But just to look at it and have some sense of how much of your eye the screen fills up.

EG: Gotcha.

JC: So that's a 45 degree field of view. Clearly you're still seeing space around you, that's not cutting you off. What you want is something that covers your view. This [indicating his device] is a 90 degree horizontal field of view, 110 degree vertical. You can see I've actually masked off the display in front of the lenses here, because if that wasn't there you could see the edge of the display warped out in optics land, just like [on the HMZ-T1] you can see that you're looking at a little screen.

But the way this is set up, with this masked off here [pointing to masking tape running up the edge of the lenses], you don't ever see the edges of the screen. All you see is the game world, and it completely covers your view.

Now, I was playing around with laser projectors on hemispherical screens and a bunch of other things, but the freight train of technology that we want to hitch a ride on is mobile devices. There's so much effort going into Retina displays, all this stuff, there's billions and billions of dollars being spent there. OLED micro displays, they've got some advantages, but there's like three companies in the world that make them and they're for niche products. There's reasons to expect much faster advances in mobile phone technology.

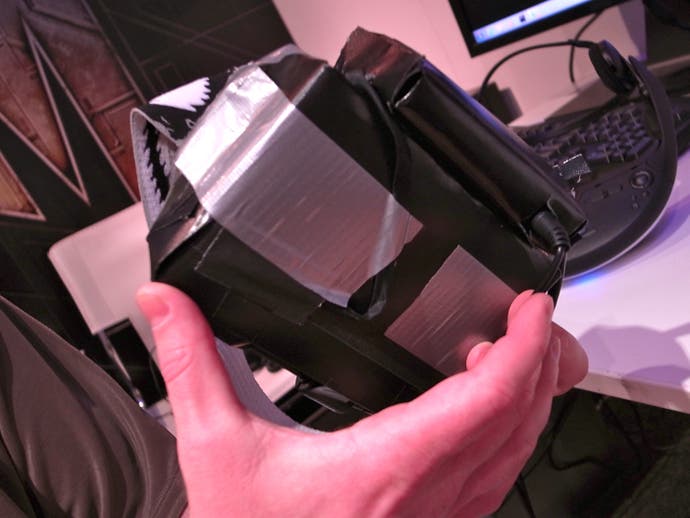

This [headset] was actually made by another guy named Palmer Luckey. I contacted him about this and he sent me one of his two prototypes. The idea here is that this is going to be a kit that people can put together. He did the optics and the display screen. It's got a 6-inch LCD panel back here, and then there's a pair of lenses per eye stretching it out to this high field of view. I added my sensors with the custom firmware and straps to hold it on your head.

But the magic on this - the reason why people didn't just do something simple like this 20 years ago for VR - is that if you do simple optic lenses like this, it fish-eyes the view a lot, so it's very distorted coming out. But what we can do now is, we have so much software power that I can invert that distortion on the computer. I render my normal view and then I reverse the eye warping in the pixel shader, and then when it gets piped out to this, it reverses it and it comes out all straight and square. So this is a huge field of view on this.

Now, the trade-off we have with this is device is that it's low resolution. It's a single 1280 by 800 panel on the back here, and it's split between the two eyes, so you've only got 640 by 800 per eye. But it's obvious we're going to have 1080p panels by the end of the year, and next year 2.5K panels, so that's one thing that's going to be happening no matter what we do.

The sensor that I've got on here is a weight gyro - so it can determine orientation, but it cannot determine horizontal position. I have another demo that I can do with a Razer Hydra that can have position on there, which is reall,y really neat, but unfortunately it's got such a lumpy configuration space that it can be really great over here, but if you look over here you're tilted at the wrong angle... It's not quite good enough, but that is one of the major steps to take on this, the integrated position tracker.

EG: Do you see crossover between this technology and the wearable computing stuff that Google and Valve are working on?

"I'm not the world's biggest 3DTV booster, I think that it's a little bit of a dubious feature, especially if you have to trade frame rate away for the 3D effect."

JC: Yeah, I'm going up to meet with Valve next month. I actually think augmented reality has more commercial potential. You can imagine everyone with a smartphone five years from now having an AR goggle on there - it's something that really can touch a lot more people's lives.

But my heart's still a lot more with the immersive VR, creating virtual worlds and putting people in them. If anything, this is a little closer to reality than the AR goggles - the Google Glass vision video, that's not reality yet. That's where reality may get. But there are overlapping problem spaces. I'm looking forward to chatting with those guys about what they've done.

EG: I've only got a few minutes left, can I play?

[I play.]

Fabulous. That was really entertaining! Thank you very much.

JC: That's the vision of what VR was always supposed to be like. This is most impressive for people that have actually tried other head-mounted displays, which aren't that cool.

No matter what you do on a screen, you can't have that same level of impact. You could be rendering movies in real time, but if you're just watching the screen it's not the same thing as being in there, in the same world.

EG: And fantastic gameplay applications as well, because you can quickly see what's around you...

JC: Yeah. This one doesn't have position tracking on there, but we can do that today, it's just a different integration problem. And then you can totally imagine, you want to be able to dodge, shield yourself... If you don't have the wires on there, being able to literally move around different things. The thing that people try on there, which unfortunately doesn't work on that, is you want to be able to lean around corners... All that's possible! We can do all that stuff right now.

EG: There is a slight disconnect with playing with a controller...

JC: Yeah. I may yet take the Razer Hydra and use that for gun control, because really what you want is one more degree of freedom than we've got there, because you still need to turn yourself with the controller. You actually could play doing all your turning in the real world if I suspended the wires or something, or if it was wireless, and that's kind of interesting doing that. But you still probably want axes of movement, axes of turning, but you want to position the gun separately and actually fire over your shoulder and stuff. [Giggles delightedly.]

EG: Looks like our time is up now. That was awesome. Thanks!