Nvidia G-Sync review

The future of display technology.

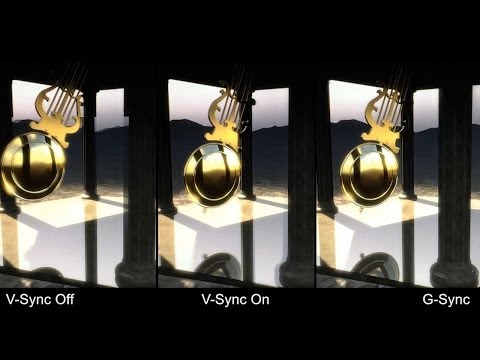

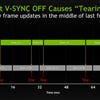

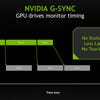

We've been analysing gameplay frame-rates since 2008, so trust us when we say that the introduction of Nvidia's G-Sync is a radical development - the next logical step in display technology. The way games are presented on-screen changes dramatically, producing a fundamentally different, better experience. Screen-tear is a thing of the past, while the off-putting stutter that accompanies a v-sync game operating with a variable frame-rate is significantly improved.

We first demoed G-Sync at a specially convened Nvidia launch event towards the end of last year, but we've been fortunate enough to be playtesting G-Sync for the last few weeks - and it's truly impressive stuff.

We're using a converted Asus VG248QE - an off-the-shelf 24-inch 1080p TN monitor, supplied by Nvidia and retrofitted with the G-Sync module, replacing the standard scaler inside the unit. The Asus is a decent enough screen, but on first inspection it falls well short of the 2560x1440 27-inch IPS loveliness we enjoy on our standard Dell U2713HM display that we use for PC gaming. In truth, losing that extra resolution and screen-size is a bit of a comedown - initially at least. Then you engage G-Sync, load up a game, and you realise that it's going to be very, very difficult to go back. Thankfully, Nvidia tells us that G-Sync can inserted into virtually any conventional PC monitor technology available on the market today - a state of affairs that could mean big things for mobile, laptop and desktop displays, and perhaps even HDTVs. In the short term, we can expect an array of monitors encompassing a range of sizes and resolutions hitting retailrs within the next couple of months.

Perhaps the biggest surprise to come out of our hands-on testing sessions with G-Sync is the way it redefines frame-rate perception. We can tell when a game targeting 60fps falls short, but now we realise that this is mostly judged not just by the amount of unique frames generated, but also by the amount of duplicates and hence, the amount of stutter. G-Sync changes things up in that every frame is unique and there are no more dupes - it's just the frames are delivered pretty much as soon as the GPU is done processing them.

"Perhaps the biggest surprise to come out of our hands-on testing sessions with G-Sync is the way it redefines frame-rate perception. A consistent 45fps can look uncannily close to how we perceive 60fps gaming."

With that in mind, there's no more judder induced by your PC waiting for the screen to begin its next refresh, and no more rendering of the image when the screen is in the process of displaying the next frame (the cause of tearing). It's the biggest change to the interface between games machine and display since progressive scan became the standard.

But how good is it? At Nvidia's Montreal launch event at the tail-end of last year, it's fair to say that virtually everyone in attendance was exceptionally impressed by the technology. Nvidia had seemingly achieved the impossible in making lower frame-rates appear as smooth as 60fps, with just a small amount of ghosting manifesting according to how low the frame-rate dipped. Tomb Raider's panning benchmark sequence at 45fps looked very, very good - almost as good as the 60Hz experience. Even at 40fps, the experience still looked highly presentable.

The possibilities are mouthwatering. Why target 60fps if 40fps could look so impressive? John Carmack told us how he wished this technology had been available for Rage, so certain gameplay sections could have look more impressive visually, running at a lower frame-rate while still retaining the game's trademark fluidity. Epic's Mark Rein asked us to imagine how much more developers could push the envelope visually if they did not have to doggedly target a specific performance level. We came away from the event convinced that we were looking at something genuinely revolutionary. Now, with a G-Sync monitor in our possession, the question is to what extent the tech holds up in real-life gameplay conditions - something that couldn't really be put to the test at the Nvidia gathering.

"G-Sync can inserted into virtually any conventional PC monitor technology available on the market today - a state of affairs that could mean big things for mobile, laptop and desktop displays - and perhaps even HDTVs."

Test #1: Tomb Raider

- GPU: GeForce GTX 760

- Settings: Ultimate (TressFX Enabled)

We replicated Nvidia's Montreal Tomb Raider set-up and found that the initial outdoor area the firm chose to demonstrate G-Sync really does showcase the technology at its best. The game is set to ultimate settings (essentially ultra, with TressFX enabled) producing frame-rates that smoothly transition between 45-60fps, the consistency of the refresh working beautifully in concert with the new display technology, producing a nigh-on flawless experience.

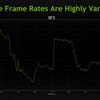

However, before we reached the first outdoor area, we had to contend with the game's initial stages, where a bound Lara frees herself from a cave-like dungeon, committing various acts of arson in order to move from one area to the next, before finally scrambling through the tunnels to freedom. It's at this point where we encountered G-Sync's ultimate nemesis - a remarkable lack of consistency in the frame-rate generated by the game. Suffice to say that TressFX remains a huge drain on resources, resulting in reported frame-rates of anywhere between 20fps and 60fps, Lara's "survival instincts" post-processing in combination with TressFX in particular proving to be the nadir of the experience.

When the game operates in a 45-60fps "sweet spot", G-Sync gameplay is exceptional, but the variation in performance overall just didn't work for us when we moved out of this window. Turning TressFX off gave us a locked 60fps experience from start to finish in the same area, producing the optimal way to play the game - but not exactly the kind of G-Sync stress test we were hoping for.

"We replicated Nvidia's Montreal Tomb Raider set-up and found that the initial outdoor area the firm chose to demonstrate G-Sync really does showcase the technology at its best."

Test #2: Battlefield 4

- GPU: GeForce GTX 760

- Settings: Ultra

For a state of the art, cutting-edge title, Battlefield 4's ultra settings aren't actually as taxing as you might think, with only one tweakable in particular truly impacting performance to a noticeable degree: deferred multi-sample anti-aliasing. Ultra sees this ramped up to the 4x MSAA maximum, incurring the kind of GPU load that the GTX 760 can't really handle without dropping frames. Dipping into the second BF4 campaign level - Shanghai - we instantly see variable frame-rates in the 40-60fps range, exactly the kind of spectrum where, theoretically, G-Sync works best.

Despite an improved level of consistency compared to Tomb Raider on ultimate settings, a lot more is being asked of G-Sync here - a first-person perspective invariably sees a greater degree of movement on-screen, with much more in the way of fast panning - exactly the kind of action that makes v-sync judder that much more noticeable. G-Sync reduces that judder significantly but the fact that frames aren't being delivered in an even manner produces observable stutter.

To be clear, the experience is clearly and noticeably better than running the game on standard v-sync, and vastly superior to putting up with screen-tear, but the notion that G-Sync approximates the kind of consistency we get from a locked 60fps frame-rate doesn't really hold true when the underlying frame-rate can vary so radically, so quickly. We move down to 2x MSAA to improve matters and end up turning off the multi-sampling altogether as we reach the end of the Shanghai shoot-out. Frame-rates stay closer to our target 60fps, and inevitable dips in performance appear far less noticeable - G-Sync irons out the inconsistencies nicely, providing just the kind of consistent presentation we want.

"Our Battlefield 4 testing leaves us with the overall impression that G-Sync isn't a cure-all for massively variable frame-rates, but rather a technology that works best within a certain performance window."

Again, our Battlefield 4 testing leaves us with the overall impression that G-Sync isn't a cure-all for massively variable frame-rates, but rather a technology that works best within a certain performance window. On Tomb Raider, 45-60fps looks really good, but the presentation of a twitch-style first-person shooter closes up that window - we were pleased with the gameplay at anything between 50-60fps, but below that, the effect - while preferable to the alternatives - isn't quite as magical.

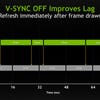

What's also evident from our BF4 experience is the importance of input latency. G-Sync improves the visual presentation of the game, but it is a display technology only - it can't mitigate the increased lag in controller response from lower frame-rates. Input lag should be reduced compared to standard v-sync and more in the ballpark of v-sync off, but with the visual artefacts removed, inconsistencies in the rest of the experience come to the fore. With that in mind, it's going to be down to the developer to keep input latency as low as possible so when performance does dip, the impact to controller response doesn't feel as pronounced.

Test #3: Crysis 3

- GPU: GeForce GTX 780

- Settings: Very High, 2x SMAA

For a short time last year, we were fortunate enough to have a six-core Intel PC on test equipped with three GeForce GTX Titans in SLI. Crysis 3 - a game we still rate as the most demanding workout for PC gaming technology - ran almost flawlessly at 60fps on very high settings at 2560x1440, producing one of the most visually overwhelming gameplay experiences we've ever enjoyed. We've since attempted to replicate that experience with solus cards - the GTX 780 and GTX 780 Ti, specifically - but the fluidity of the gameplay just doesn't compare. Even at 1080p, Crysis 3 incurs too much of a load upon a single GPU to match that beautiful, super-smooth experience we enjoyed on that absurdly powerful, cripplingly expensive PC.

G-Sync definitely helps to improve Crysis 3 over the existing options, but again, ramping things up to the very high settings we crave simply causes the delta between lowest and highest frame-rates to expand, encompassing a range that sits outside of the window Nvidia's new tech works best within. The situation is perhaps best exemplified by the initial level, which sees the player moving between internal and external environments, the latter saturated in a taxing stormy weather effect that can see frame-rate halve - even on a graphics card as powerful as a GTX 780. The jump between frame-rates in this instance is just too jarring for the G-Sync effect to truly work its magic. Also noticeable are caching issues - where performance dips moving into new areas as new assets stream in the background from storage into RAM. It's worth remembering that G-Sync is a display technology only - bottlenecks elsewhere in your system can still limit performance.

"If the GPU power's not there for 60fps, why not 40, 45 or 50fps? G-Sync offers that flexibility, but frame-rate limiters built into PC graphics menus would really need to become the standard."

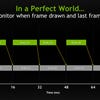

Crysis 3 retains its reputation for bringing even high-end PC hardware to its knees - G-Sync in combination with a high-end SLI set-up is required to get the kind of smooth frame-rates we want from a game as taxing as this one at its highest settings. However, one thing that we should note is that G-Sync truly thrives on consistency - the notion of frames being delivered at an even pace. When frame-rates remain in the 40fps area and stay there, the fluidity really is quite remarkable - easily as impressive as anything we saw at the Montreal reveal, if not more so with a game as beautiful as this one. We are automatically programmed to believe that higher frame-rates are better, but there's a reason why the vast majority of consoles titles are locked at 30fps - a mostly unchanging frame-rate is easier on the eye, and provides a consistency in input lag that goes out of the window when performance fluctuates with an unlocked frame-rate.

Conventional monitor technology operates at 60Hz, so a 30fps lock makes sense in providing that consistency. G-Sync should, in theory, allow for a lock at virtually any frame-rate you want. If the GPU power's not there for a sustained 60fps experience or something close to it, why not target 40, 45 or 50fps instead? Based on what we've seen in our G-Sync testing across all of these titles, plus the various gaming benchmarks we usually deploy in GPU testing (Sleeping Dogs looked especially good at 45fps), it could work out very, very nicely.

Nvidia G-Sync: the Digital Foundry verdict

G-Sync is the best possible hardware solution to the age-old problems of screen-tear and v-sync judder. By putting the graphics card fully in charge of the screen refresh, effectively we have the visual integrity of v-sync along with the ability to run at unlocked frame-rates - something previously only possible by enduring ugly screen-tear. Out of the box, G-Sync provides a clearly superior experience, but it is not quite the magic bullet that solves all the issues of fluidity in PC gaming - something needs to change software-side too.

When we first looked at G-Sync at Nvidia's Montreal launch event, we marvelled at the sheer consistency of the experience in the pendulum and Tomb Raider demos. A drop down to 45fps incurred a little ghosting (frames were displayed on-screen for longer than the 60Hz standard 16.67ms, after all) but the fluidity of the experience looked very, very similar to the same demos running at 60fps - a remarkable achievement. However, the reason they looked so good was because of the regularity in the frame-rate - and that's not something typically associated with PC gaming. By running games completely unlocked, actual consistency while you play remains highly variable. G-Sync can mitigate the effects of this - but only to a certain degree.

There's a frame-rate threshold where the G-Sync effect begins to falter. It'll change from person to person, and from game to game, but across our testing, we found the sweet spot to be between 50-60fps in fast action games. Continual fluctuations beneath that were noticeable and while the overall presentation is preferable to v-sync, it still looked and felt not quite right. Owing to the nigh-on infinite combination of different PC components in any given unit, the onus will be on the user to gauge his quality settings effectively to hit the window, and equally importantly, the developer should aim for a consistent performance level across the game. It's no use tweaking your settings for optimal gameplay, only to find that the next level of the title incurs a much heavier GPU load. And if our G-Sync testing has taught us anything, it's that - within reason - consistent frame-rates are more important than the fastest possible rendering in any given situation.

That being the case, with careful application, G-Sync opens up a lot more possibilities. In the era of the 60Hz monitor, the most consistent, judder-free experience we can get is either with a locked 60fps, or else the console standard 30fps. As we've discussed, theoretically, with G-Sync, target frame-rate could be set anywhere (40fps for example) and locked there without the stutter you'd have on a current display. To make that possible, ideally we really need to see is the introduction of frame-rate limiters in PC graphics settings. This has interesting implications for benchmarking GPUs because suddenly, lowest frame-rates suddenly become that much more important than the review-standard averages.

Overall, G-Sync is a hardware triumph, but the quest for a consistent, enjoyable gameplay experience is far from over. By eliminating the video artefacts, G-Sync lays bare the underlying problems of wildly variable gameplay frame-rates in PC gaming and highlights the problems of inconsistent input latency. If the hardware issue is now fixed, what's required now are software solutions to make the most of this exceptional technology.