The evolution of Xbox One - as told by the SDK leak

19 months of behind-the-scenes engineering encompassing the rise and fall of Kinect and a revised focus on gaming performance.

The recent leak of the Xbox One development tools - along with the accompanying documentation - gives us a fascinating insight into the creation and evolution of Microsoft's latest console. Recent innovations, such as the release of a seventh CPU core for game developers, have come to light owing to the leak, but the docs contain much more in the way fascinating background information. In fact, they give us an entire timeline of the system's development from the moment alpha devkits first arrived with developers way back in April 2012, all the way through to refinements and enhancements added as recently as November 2014.

While we can't dig into every specific API and optimisation created and added across this 19-month period, thankfully the work of summarising Xbox One's key additions has mostly been done for us. The 'What's New' section of the documentation doesn't just highlight the most recent changes to the system, it incorporates links to the equivalent section from each and every SDK revision from the system's inception, highlighting milestones and changes that tell us how the system came to be, how the system was improved - and hinting at features yet to be.

What's also fascinating is the change in focus as we progress through the timeline, reflecting the change in marketing and the loss of Kinect as an in-the-box staple - engineering effort on the motion control 'NUI' natural user interface falls off a cliff in favour of GPU and performance profiling optimisations, many of which actually come at the expense of the camera's feature-set.

What's also clear is that Microsoft's GPU issues were very much a known quantity internally - even before the launch. Perhaps the biggest surprise of the SDK notes - beyond the seventh CPU core revelation - is the existence of two separate graphics drivers for the Xbox One's onboard Radeon hardware: we know about the mono-driver - Microsoft's GPU interface designed to offer the best performance from the hardware, but there was also the user-mode driver (UMD) - something that you'll see referenced throughout this piece.

A well-placed source informs us that while it was an Xbox One-specific driver, it had a lot of additional checking and error-catching, designed to help debugging and to get software up and running on the console as soon as possible - at the expense of raw performance. But we're getting ahead of ourselves here. Let's begin at the beginning.

Alpha hardware: April 2012 to February 2013

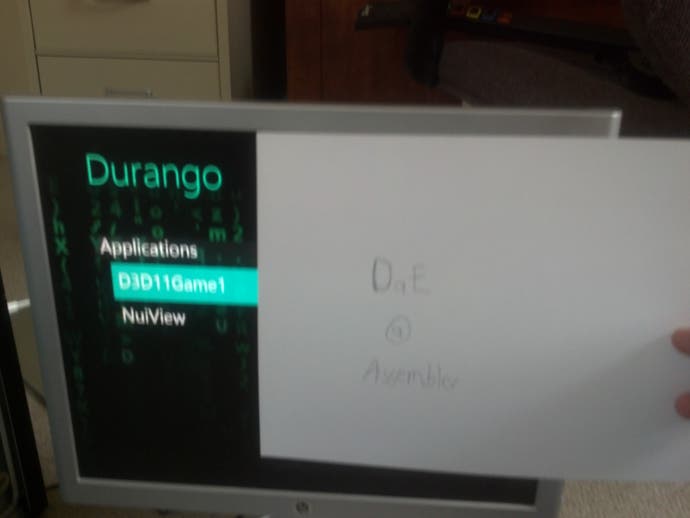

As the first alpha development kits made their way to developers, there was no Xbox One as we know it - Microsoft's next-gen console project was known only by its codename, Durango (a Mexican state, if you're interested). Indeed, at this point, there was no actual console hardware in existence - developers were provided with what was basically a generic-looking PC, broadly equivalent to Microsoft's vision for technical capabilities of the retail console. It's this machine that noted hacker SuperDAE acquired, presumably via the platform holder's developer portal, eventually ending up for sale on eBay. At this time, the basics of the machine became evident - Durango was based on low-power 64-bit x86 cores, and would use DirectX 11-class graphics hardware.

So what was the state of the actual Xbox One silicon at this point? Well, the APU containing the CPU, GPU, Move Engines and ESRAM would have been designed, but the physical hardware would still have been in development in Microsoft labs. However, software development continued apace on the alpha platform. What's clear is that much of the coding effort was still concentrated on multimedia features. Connectivity to other devices using SmartGlass, natural user interface features based on Kinect 2.0, and a focus on multimedia as a whole are themes that many of the updates seem to focus on during this period.

Natural User Interface: Remember Input One and the Kinect/TV focus? During the alpha hardware period, the oft-maligned motion sensor was shaping up as a central pillar of the system, with developers starting to get an idea of what could be achieved with the second generation hardware. The earliest versions of the SDK offered some interesting tools including the ability to compare the original Kinect output with the pre-Alpha Durango sensor output. At this point the hardware was still far from final and only the resulting IR and depth data streams were made available for planning purposes.

Looking at later release notes, we see a huge selection of changes and improvements to support the new sensor, via the NUI 2.0 API. Advanced skeletal tracking, hand state detection, expression tracking, identification services, and new speech systems are all introduced in August 2012 with many additional features introduced over the following months. At this point, it's clear that Kinect 2.0 was a huge feature for the Xbox One and developers were provided plenty of new toys to play with. What's interesting is that by 2012, Microsoft would have been aware of the swift drop-off in interest in Kinect for Xbox 360, yet the focus on the motion sensor continued nonetheless. Perhaps the platform holder thought that lightning would strike twice, but equally as likely is that all the key decisions on the hardware make-up had already been taken - particularly the focus on the 'living room of the future' - and the company was essentially locked in.

Graphics: The original development PCs shipped with a standard AMD PC graphics card and developers were provided with a generic DirectX 11 "manufacturer supplied driver". As early as April 2012, however, Microsoft began to roll out a Durango specific driver with limited functionality known as the "Durango user-mode driver" or UMD. Additional features were added regularly to the UMD including hardware video encoding and decoding, D3D 11.1 tessellation functionality, changes to shader handling and more. By August, the user-mode driver becomes the default; two months later, support for the generic legacy driver is removed altogether. Driver updates continue to appear regularly, as one might expect, but it isn't until the later release of the D3D Monolithic driver - the highly optimised GPU interface that Microsoft continued to work on until the UMD was eventually deprecated - that things started to really take off.

Development tools: In May of 2012 Microsoft reintroduces the excellent PIX performance profiler, which has long served the Xbox development community for analysing and understanding the performance characteristics of in-development code. The initial release seems rather basic and improvements to PIX come fast and heavy following its introduction. This manifests itself with the addition of a resource browser with views into render targets and the depth buffer, a visual timeline of GPU draw events, an evolving user interface and the ability to capture low-overhead run-time timing data from CPU and GPU events for more detailed analysis. On top of that there's user-defined event support, and the initial release of a system monitor used for real-time profiling, capable of displaying performance counters streamed from the devkit in real-time. Additionally, plenty of additional tools are added and improved throughout this early period, including changes to the Visual Studio coding environment, additional templates, faster deployment for testing, architecture changes, and improved code generation.

Input: Companion app support, at least at a basic level, is made available right out of the gate, renamed as SmartGlass in August. Just prior, support for the controller's impulse triggers is introduced, suggesting that work on the make-up of the new gamepad is nearing completion at this point. The APIs for input are in a state of flux throughout this period.

Audio: Audio doesn't seem to play a massive role in these early days without the final hardware in place (Xbox One has rather powerful internal hardware dedicated to audio in the APU, very similar to AMD's TrueAudio block found in its most recent PC graphics cards). Basic functionality is available early on but audio hardware emulation is added to the mix in August, allowing developers to test code designed for sound applications.

Beta hardware - February 2013 to August 2013

By early 2013, we're fast approaching the official reveal of the console. By this point the design of the system finally comes together. Somewhere around February, beta devkits are in circulation with developers, based on the retail console form-factor and using final silicon - although the supporting software was still very much in development at this time. The early beta kits are described as 'zebra' hardware - white consoles with black livery. Contemporary reports suggest that the black patterns vary from kit to kit in order to identify the source of any hardware leaks - a faintly amusing state of affairs given that SuperDAE and VGLeaks had already revealed virtually everything there was to know about Durango's technological make-up. It's interesting to note that some of these old leaks still exist almost verbatim in the leaked documentation.

By March 2013, all support for the original alpha hardware is gone, and the Zebra beta hardware becomes the standard box for developers to work with. Across the following months, these kits are eventually replaced by production Xbox One hardware, differentiated from the Zebra kits not just by their more standard physical appearance, but also in their ability to adapt to the final, enhanced CPU and GPU clock-speeds (1.75GHz and 853MHz respectively). During this period, development in the software side continued:

GameDVR: Obviously in development for some time, it's nevertheless curious that the GameDVR's appearance in the development environment as of March 2013 coincides with the announcement of PlayStation 4 and its very similar feature-set. Sharing of the gameplay experience was a key consideration for both Sony and Microsoft, and doubtless would have been informed by the very similar hardware architecture both companies opted to license from AMD.

Graphics: Changes continue to pile up for the user-mode driver but by July 2013, Microsoft begins to introduce a preview version of the Monolithic Direct3D driver (known as the mono-driver when mentioned publicly), designed to evolve the stock D3D features to be more console-specific by eliminating unnecessary features and reducing unnecessary overheads. Yes, remarkably Microsoft had two GPU drivers in circulation, all the way up to May 2014 when the user-mode driver was finally consigned to the dustbin. The mono-driver becomes the key to improved performance for future Xbox One games but the version utilised for launch titles would have been somewhat sub-optimal compared to the version in circulation today. One section in the SDK in this period gleefully exclaims "Tear No More!" - a feature that seems to see the introduction of v-sync and adaptive v-sync support. In addition, support for 720p output is added, but it appears that output is simply downscaled from 1080p.

Xbox Live: Many of the features associated with Xbox One come online in 2013 and improved Xbox Live Services APIs are introduced, including profile service, social leaderboards, and 'rich presence', displaying what a user might be doing at any point, and real-time activity.

Natural User Interface: Further enhancements stack up for Kinect, as the arrival of final silicon is joined by production-level camera hardware. At this time, updates are focused on providing additional granularity for Kinect to work with. Hand tip and thumb joint detection, joint orientation, mouth and eye state detection, improved seated detection, lean detection and various other APIs designed to support interaction are all added. There's the sense from the documentation here that full Kinect functionality didn't arrive until rather late in the day. This would have made camera-specific titles difficult to develop, perhaps explaining why even Microsoft itself failed to support Kinect properly at launch.

The final update before launch appears to take place in August 2013, where the third service pack (or QFE3) for the Xbox One OS is released. Interestingly, there is also a section in the documentation dedicated to preparing titles for the Xbox One launch. One of the more interesting elements contained within this section is the case for using dynamic resolutions. The document specifically notes that if you're having trouble hitting your target frame-rate at the target resolution, developers should consider resizing resolution based on GPU load. What's fascinating is that Microsoft provides a method for doing just that, allowing the system to adjust resolution on a frame-by-frame basis. It's interesting to note that the only title we're aware of that actually uses this technology is Wolfenstein: The New Order, but it's unclear whether the Microsoft API is utilised, or whether id Tech 5's custom approach is used instead. As the same effect is in use on the PS4 version, we suspect it's the latter.

By this point, Microsoft is fully aware that many titles (including several of its own) will launch at sub-native resolutions, and the documentation points out the strengths of the Xbox One's scaler, mentioning that it's better than the Xbox 360's, which the platform holder points out was already a very good piece of technology. This may be true but at launch, the Xbox One had some pretty nasty scaling issues (not least of which was the artificially intense sharpening filter, removed in early 2014) leaving us to wonder how Microsoft could call the tech a "great scaler".

The platform holders also make the case for using AMD's proprietary EQAA anti-aliasing tech, which had been added a few months prior to launch - though we're not aware of any shipping title that actually uses it. The case is also made for using 4x MSAA if a title is already using the 2x variant, due to the low GPU overhead by storing the first two fragments of every pixel in ESRAM with the last two in main memory (which are not accessed frequently due to compression). It's an interesting theory, and may have been utilised in Forza Horizon 2 - the only AAA Xbox One title we're aware of that actually uses 4x multi-sampling anti-aliasing.

February to November 2014 - and beyond

There's a big hole in the Xbox One documentation, with no additional "What's New" notes posted between August 2013 and February 2014. Whether they were simply omitted or do not exist at all isn't clear. However, early 2014 is a crucial period for Microsoft, as it attempts to correct its wonky launch strategy and to address the GPU differential with PlayStation 4 as best as it can. Straight away we see notes indicating that developers now have more control over ESRAM resource management - apparently a bottleneck for many launch titles.

Graphics: Throughout the coming months there are plenty of updates corresponding to the low-level D3D monolithic runtime. Hardware video encoding/decoding is added in March, along with asynchronous GPU compute support. By May, support for the user-mode driver is completely removed in favour of the mono-driver, explaining (in part at least) the marked improvement in Xbox One GPU performance in shipping titles from Q2 2014 onwards. The focus on the mono-driver appears to pay off as throughout 2014, Microsoft posts GPU performance improvements nearly every month, including some remarkable increases in draw call efficiency in July.

Much of the improvement to GPU performance stems from the resources pulled from Kinect functionality - the June XDK and its impact were widely documented at the time. On top of the GPU resources made available by turning off Kinect features, titles that opt not to use Kinect Speech, depth, and IR processing are afforded additional processing time on a CPU core. Additionally, cutting out customised voice commands helps increase GPU performance by freeing up an additional 1GB/s of bandwidth.

Scaler improvements: Scaler quality is also given a bump in April with new controls available for developers. According to the update it's possible to choose from seven fixed types of upscaling including bilinear, four-tap sinc, plus four/six/eight/ten-tap Lanczos, along with six-tap band-limited Lanczos scaling. This additional level of control significantly improves image quality on sub-1080p titles and may explain why the impact of the 900p vs 1080p resolution differential diminished in shipping titles released later in 2014.

Audio: By May 2014 we see the inclusion of background music support when using a snapped app, something the PS4 still isn't capable of. A proper sound mixer is also made available for adjusting the volume of snapped apps or when using Kinect for chat. In July there are further refinements to audio hardware/CPU interaction, with the transitioning of memory used by the audio control processor (ACP) from cached to uncached memory with a reduction on CPU costs when managing and updating the ACP. USB microphone support is also added into the mix.

Development tools: Microsoft's profiler, PIX, continues to receive updates and in September, Microsoft introduces an ESRAM viewer into the system designed to help developers maximise usage of the ultra-fast scratchpad. It's interesting to note that throughout the docs, there's discussion of a dedicated screenshot function available to developers - something that would certainly be useful for gamers as well as part of the main OS. The continuous A/V capture feature available on PIX for Xbox 360 (which records a constant 60-second stream of gameplay output while PIX monitors the game) is also made available for Xbox One in August. On Xbox One, the system is able to continuously record this stream, unlike the 360.

Input and NUI: Most of the changes made to Kinect functionality - aside from stealing away processing time - seem to focus more on bug fixes. Tracking errors are tackled and general improvements are made, but there is clearly less of a focus placed on getting more from the sensor. Improvements are also made to controller input, such as determining exactly when controller input processing happens, providing greater control over the updating of input and on which cores to execute processing. There's also the inclusion of keyboard support for "exclusive apps in retail" suggesting that apps can now make use of keyboard input.

Multiplayer changes: One of the largest changes presented in these documents concerns the way multiplayer is handled. Labeled as "Multiplayer 2014" and "Multiplayer 2015" respectively, there are two different approaches presented here in relation to how players are connected to one another for online play. The existing multiplayer design is based on the concept of the game party, where titles use a system-level construct to manage an active group of users when playing a game together. All functions surrounding joining and matchmaking are routed through this game party. The document describes how players within a party are placed into "multiplayer session directory (MPSD)" sessions, the cloud service used to store and retrieve multiplayer sessions, which are responsible for party management.

However, for Multiplayer 2015 Microsoft has removed this barrier by eliminating the need to access multiplayer functions through the party system. Now, multiplayer related functions are directly accessed using MPSD sessions, completely bypassing the need for the party system at a lower level. This appears to streamline the process by requiring fewer back and forth calls that can potentially cause performance issues for a particular session. The 2015 Multiplayer design and APIs are made available in preview form back in September 2014, but it's not yet clear when we will begin to see titles utilising this design. There has been discussion as to whether Halo: The Master Chief Collection uses the 2015 design, but with the new technology introduced (in preview form no less) so close to the game's launch, this seems rather unlikely.

Wrap-up: evolving from multimedia centre to an out-and-out games machine

At Digital Foundry, we have something of a burning need to understand how gaming hardware works, and the SDK leak offers us the largest mine of information we have on developing for the Xbox One since we interviewed the hardware architects way back in October 2013. The technical minutiae is often inconsequential, sometimes revelatory, but always fascinating, providing plenty of insight into the direction Microsoft has been moving across a hectic 19 months of console development.

It's fascinating to compare the timeline of improvements, optimisations and new features, almost all of which seem to come at the expense of the launch unit's extensive Kinect functionality. With the seventh CPU core made available to developers along with additional GPU resources, plus continuing optimisations to the mono-driver, there's a clear trajectory of behind-the-scenes improvements that may explain how Xbox One has managed to remain competitive with PS4 in several high-profile titles. But plenty of important information remains unknown - Microsoft has pared back the system reservation CPU-side, but what about the mammoth 3GB of memory kept back from developers?

Of course, it's worth noting that improvement doesn't happen in a vacuum. It's difficult to imagine that Sony hasn't boosted the capabilities of its hardware in a similar fashion. There's no doubt that Sony is slower to roll out feature updates to consumers but the development side remains a mystery at this time. Information tends to trickle out slowly and conservatively, usually via GDC/Siggragh developer presentations (it took around eight months for our story on the PS4 system memory reservation to be confirmed officially), but maybe the Xbox One leak may be matched with a Sony equivalent at some point soon. Regardless, the major takeaway is that titiles should improve in 2015 - not just because of refined development tools, but also because game-makers will be embarking on their second-gen PS4/Xbox One projects - and we can't wait to see those in action.