Inside Killzone: Shadow Fall

Digital Foundry analyses Guerrilla's own post mortem of its PlayStation 4 reveal.

Guerrilla Games has released its own post-mortem for the Killzone: Shadow Fall demo revealed at the PlayStation Meeting, giving a range of insights into the power of the PlayStation 4 and its fundamental approach to the new hardware. A range of stats is included, including the fact that the demo used around 4.6GB of memory, with 3GB of that reserved exclusively for graphics.

The talk begins with a few basic facts - namely that Killzone: Shadow Fall is indeed a PlayStation 4 launch title, and that target - for the announcement demo, at least - is a "solid" 30 frames per second at native 1080p resolution. The studio also reveals that this is just one of two internal projects it is working on, the other described as an "unannounced new IP".

What follows is a complete breakdown of almost every technical facet of the demo, but what stood out to us was the lavish use of the GDDR5 memory in the PlayStation 4 - 3GB of RAM is reserved for the core components of the visuals, including the mesh geometry, the textures and the render targets (the composite elements that are combined together to form the whole). Guerrilla also reveals that it is using Nvidia's post-process FXAA as its chosen anti-aliasing solution, so that 3GB isn't artificially bloated by an AA technique like multi-sampling. There is mention that there's no MSAA "yet" but Guerrilla specifically mentions it is working with Sony's Advanced Technology Group (ATG) on a new form of anti-aliasing, tantalisingly dubbed "TMAA".

Factoring in that this is just a demo of a first-gen launch title, 3GB is an astonishing amount of memory being used to generate a 1080p image - as developers warned us recently, lack of video RAM could well prove to be a key issue for PC gaming as we transition into the next console era.

Guerrilla also outlines its philosophy for utilising the eight-core CPU in the PlayStation 4, again corroborating the views of other developers in how best to extract the best performance from the AMD set-up. Guerrilla has evolved the model it developed for PlayStation 3 - it has one thread set up as an "orchestrator" (this would have been the PPU on the PS3), scheduling tasks which are then parallelised over every core. This is the so-called "jobs-based" technique that was used in a great many current-gen titles in order to make the most of the 360's six threads and the PS3's six available SPUs. In going "wide" across many cores, Guerrilla has upped the ante: 80 percent of rendering code was "jobified" on PS3, 10 per cent of game logic and 20 per cent of AI code. On PS4, those stats rise to 90 per cent, 80 per cent and 80 per cent respectively.

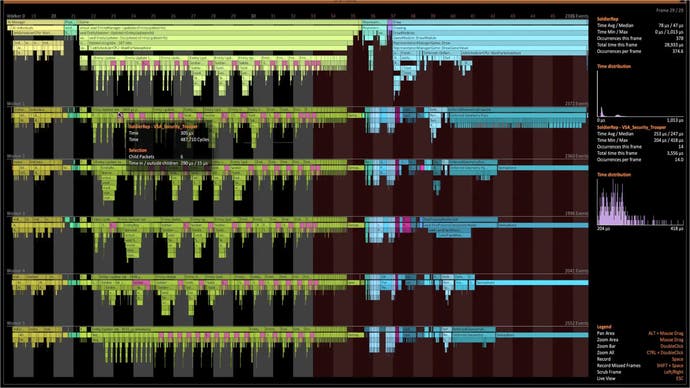

Interestingly, Guerrilla's presentation explicitly refers to "every" core being used, but the screenshots of the profiling tools - developed by the team itself owing to the work-in-progress nature of Sony's own analysis software - only seems to be explicitly identifying five worker threads. As of right now, we have no real idea of how much CPU time the PS4's new operating system sucks up and how much is left to game developers, and we understand that the system reservation is up in the air. However, the profiling tool shows that in the here and now there are indeed five workers threads, plus the "orchestrator" and each of them is locked to a single core. The inference we can draw right now is that while OS reservation hasn't been locked down, developers have access to at least six of the eight cores of the PS4's CPU.

In terms of actual optimisation, "thread contention" - the process of one CPU thread being held up waiting for the results of another - proved to be something of an issue for Guerrilla. Rather than going down to the low level and addressing the CPU directly, the developer points to high-level optimisations as the key to best performance.

In terms of graphics, this is where the enhancements Guerrilla has made over Killzone 3 are perhaps best appreciated. For in-game characters, the PS3 game used three different LOD models (more polygons used the closer you are to the character in question), up to 10,000 polygons and 1024x1024 textures. Things have changed significantly for PlayStation 4 with seven LOD models and a maximum of 40,000 polygons and up to six 2048x2048 textures.

The geometry pass vacuums up more GPU processing time than any other system, but Guerrilla readily admits that the extra detail only offers up an "incremental quality improvement" over Killzone 3, with the vast bulk of the visual boost coming from the variety of materials employed, the lighting model and all the other engine changes. There's also the sense from the presentation that Guerrilla is still finding its feet in terms of optimisation - specifically in terms of pixel and vertex shaders, where there's discussion on streamlining here potentially opening the door to double the polygon count.

Lighting looks simply phenomenal in Killzone: Shadow Fall (to the point where Guerrilla released an entirely separate presentation on how it works) with a full HDR, linear space system in place that's a clear evolution of the techniques used in Killzone 2 and its sequel. A key new feature is something similar to what we see in Kojima Productions' FOX engine and Unreal Engine 4 - a move to physical based lighting. In the past, in-game objects would have a certain degree of their lighting "baked" into the object itself. Now, the physical properties of the object itself - its composition, its smoothness/roughness etc - are variables defined by the artist, and the way they are lit depends on the actual light sources in any given scene.

It's a massive, fundamental shift in the way that in-game assets are created and required a large degree of training on the part of the Guerrilla staff, with the system gradually evolving to the point where both rendering specialists and artists were happy with the degree of control they had, and the results on-screen. During this evolution all lights in any given scene became "area lights" - able to influence the world around them, and all light sources have actual volume to them too. Everything on-screen has a real-time reflection that considers all the appropriate light sources. A mixture techniques including ray-casting and image-based lighting produces some exceptional results.

We can only really begin to cover the basics in this presentation (the lighting techniques in themselves are immensely complex) - there's a sense that Guerrilla is striking out into unknown territory to a certain extent and surprising itself with the results from the learning process. The overall takeaway we have here is that next-gen development is very much in the early stages, and as impressive as it is, the Killzone: Shadow Fall demo is still very much work-in-progress stuff and we should expect plenty of improvement for the final game. The team itself is clearly very happy with the ease of development for PlayStation 4, and has identified jobs-based parallelism as the best way to get the most from the multi-core architecture, while the GPU is considered to be very fast, but shader optimisation seems to be the key to getting the most out of it.

What is very interesting is that the developer says that the GDDR5 memory really gives the system "its wings", and lauds the immense 176GB/s of bandwidth available. However, it's not a bottomless pit of unlimited throughput - efforts still need to be made to use small pixel formats in order to maximise performance. Bearing in mind that the vast, unified memory pool of high-bandwidth RAM is one of the key advantages PS4 has over PC and the next-gen Xbox, it'll be interesting to see how multi-platform projects cope.

Also fascinating is that by its own admission Guerrilla is not using much of the Compute functionality of the PS4 graphics core - in its conclusion to the presentation it says that there's only one Compute job in the demo, and that's used for memory defragmentation. Factoring in how much Sony championed the technology in its PS4 reveal, it's an interesting state of affairs and perhaps demonstrates just how far we have to go in getting the most out of this technology - despite its many similarities with existing PC hardware.