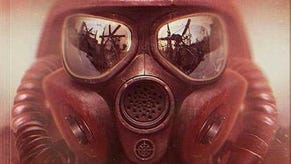

Tech Interview: Metro 2033

Oles Shishkovstov on engine development, platform strengths and 4A's design philosophy.

Last week, Digital Foundry introduced the technology behind 4A Games' new Metro 2033. Featuring a brand new engine with an eye-opening level of bleeding edge rendering tech, the game instantly got our attention.

We were also able to interview Oles Shishkovstov, chief technical officer of 4A Games. Many of his comments about the new engine made their way into last Saturday's Digital Foundry feature, but this follow-up piece presents the entire inquisition, since we know you like that.

There's more detail on plenty of the things discussed in our original feature. For example, there's more to the story of the engine's genesis and the key fundamental approaches the 4A team made in developing the new tech. The AI system and the integration of PhysX are also explained in more depth, and you'll get to read about Shishkovstov's appraisal of the Xbox 360 Xenon CPU up against the Nehalem/Core i7 architecture found in the latest PCs.

In short: more details, more insight, more tech discussion. Just the way we like it.

There's no relationship. Back when I was working as lead programmer and technology architect on S.T.A.L.K.E.R. it became apparent that many architectural decisions were great for the time when it was designed, but they just don't scale to the present day.

The major obstacles to the future of the S.T.A.L.K.E.R. engine were its inherent inability to be multi-threaded, the weak and error-prone networking model, and simply awful resource and memory management, which prohibited any kind of streaming or simply keeping the working set small enough for "next-gen" consoles.

Another thing which really worried me was the text-based scripting. Working on S.T.A.L.K.E.R. it became clear that designers/scriptwriters want more and more control, and when they got it they were lost and needed to think like programmers, but they weren't programmers! That contributed a lot to the original delays with S.T.A.L.K.E.R.

So I started a personal project to establish the future architecture and to explore the possibilities of the design. The project evolved quite well and although it wasn't functional as a game - not even as a demo, it didn't have any rendering engine back then - it provided me with clear vision on what to do next.

When 4A started as an independent studio this work became a foundation of the future engine. Because of the tight timescale we've opted to use a lot of middleware to get things going quickly. We've selected PhysX for physics, PathEngine for AI navigation, LUA as a primary development file format, not a scripting engine, for easy SVN merging, RakNet for physical network layer, FaceFX for facial animation, OGG Vorbis for sound format, and many other small things like compression libraries, etc.

The rendering was hooked up in about three weeks - it's easy to do when you work with deferred shading - although it was far from being optimal or feature-rich.

When the philosophies of the engines are so radically different it is nearly impossible to share the code. For example, we don't use basic things such as C++ standard template library and S.T.A.L.K.E.R. has every second line of code calling some type of STL method. Even the gameplay code in S.T.A.L.K.E.R. was mostly using an update/poll model, while we use a more signal-based model.

So, the final answer is "no", we do not have shared code with X-Ray, nor would it be possible to do so.

That would be extremely difficult. A straight port will not fit into memory even without all the textures, all the sounds and all the geometry. And then it will work at around one to three frames per second. But that doesn't matter because without textures and geometry, you cannot see those frames! That's my personal opinion, but it would probably be wise for GSC to wait for another generation of consoles.

The primary focuses are the multi-threading model, memory and resource management and, finally, networking.

The most interesting/non traditional thing about our implementation of multi-threading is that we don't have dedicated threads for processing some specific tasks in-game with the exception of PhysX thread.

All our threads are basic workers. We use task-model but without any pre-conditioning or pre/post-synchronising. Basically all tasks can execute in parallel without any locks from the point when they are spawned. There are no inter-dependencies for tasks. It looks like a tree of tasks, which start from more heavyweight ones at the beginning of the frame to make the system self-balanced.

There are some sync-points between sub-systems. For example, between PhysX and the game, or between the game and renderer. But they can be crossed over by other tasks, so no thread is idle. The last time I measured the statistics, we were running approximately 3,000 tasks per 30ms frame on Xbox 360 for CPU-intensive scenes with all HW threads at 100 per cent load.

The PS3 is not that different by the way. We use "fibres" to "emulate" a six-thread CPU, and then each task can spawn a SPURS (SPU) job and switch to another fibre. This is a kind of PPU off-loading, which is transparent to the system. The end result of this beautiful, albeit somewhat restricting, model is that we have perfectly linear scaling up to the hardware deficiency limits.

As for memory and resource management, we don't use plain old C++ pointers in most of the code, we use reference-counted strong and weak pointers. With a bit of atomic operations and memory barriers here and there they become a very robust basic tool for multi-threaded programming.

That sounds a bit inefficient, but it isn't. We've measured at most 2.5 times difference in hand-crafted scenarios on PS3-PPU/360 CPU. If all that "inefficiency" contributes to at least 0.1 per cent performance loss on the whole game, I'll owe you a beer!

Then comes memory management. You know, it's always custom-made - lots of different pools (to either limit the subsystems or reduce lock-contention), lots of different allocation strategies for different kinds of data, that's boring. The major memory consumers are paid the most attention though. Geometric data is garbage-collected with relocation, for example, but the more important things are the raw stats.

On the shipping 360 version we have around 1GB of OGG-compressed sound and almost 2GB of lossless compressed DXT textures. That clearly doesn't fit into console memory. We went on the route to stream these resources from DVD, up to the extreme that we don't preload anything, not even the basic sounds like footsteps or weapon sounds. We've done a lot of work to compensate for DVD-seek latency, so the player should never notice it. That was the hard part.

As for the networking, that's a long story, but because Metro 2033 is focused on a story-driven single-player experience, I'll omit it here!