On mouse clicks and paperclips: the dark, frustrating pleasures of tedium games

“The aim is to convert all known matter in the universe…”

Since it was released last October, Frank Lantz's AI-centric existential nightmare-turned-browser game Universal Paperclips has been played by about 1.2 million people, its developer says.

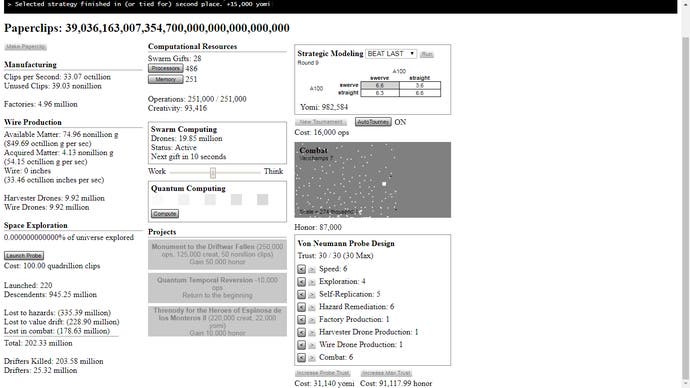

Put it another way, for the last five months over a million people have participated in an extensive, repetitive clicking experiment. One where the act of clicking on a box produces a single paperclip at a time and the aim is to convert all known matter in the universe to paperclips, and in which the game ends when the player reaches 30.0 septendecillion paperclips, resulting in the total destruction of the universe.

The success of Universal Paperclips as a viral browser game is a surprise, but not because it isn't completely consuming. According to Wired, it was a qualified viral hit with around 450,000 players at launch, most of whom completed the game in its entirety. What's surprising about the success of Universal Paperclips is that, at least on paper, it's pretty much as tedious as games gets. Universal Paperclips is a clicker game, occasionally known as non-games - a genre where game mechanics are boiled down to their most basic components, clicking or tapping to fulfill arbitrary goals. Like Cow Clicker, an iOS app by Lantz' friend and colleague Ian Bogost, Universal paperclips exists in a kind of limbo between games-as-entertainment and Skinner box experiments.

What does it say about us that we are compelled to keep clicking? I ask Lantz about this.

"There is something very compelling about submerging yourself in a game, about applying every shred of your consciousness to the pursuit of some goal," says Lantz. "I think of it as tuning into the shark-brain, the machine-brain, where, instead of the ambiguous, multi-layered, ephemeral quality of our ordinary lives we get to experience a kind of scary, thrilling, single-mindedness, whether it's making a ball go through a hoop, making geometric shapes fit together, killing demons, or making paperclips."

"Maybe partly because it gives us access to levels of experience that aren't easily accessible in the miasma of the ephemeral ordinary. I mean, I'm a human, I'm not a shark, I'm not a machine. But there are things that sharks can do, things that machines can do, that are extraordinary, and discovering that I have that capacity, and experiencing it first hand, is powerful."

Lantz's game is based on a thought experiment popularised by writer and philosopher Nick Bostrom in his book Superintelligence: Paths, Dangers Strategies, but initially explored in his 2003 essay Ethical Issues in Advanced Artificial Intelligence. Bostrom's "Paperclip Maximizer" describes an advanced artificial intelligence that is tasked with the manufacturing of paperclips, a seemingly harmless and arbitrary goal. Bostrom then asks whether such a machine, if it wasn't initially programmed to value human life, would eventually turn all matter in the universe - including human beings - into either paperclips or machines which manufacture paperclips.

"Suppose we have an AI whose only goal is to make as many paper clips as possible," Bostrom writes in his 2003 work. "The AI will realize quickly that it would be much better if there were no humans because humans might decide to switch it off. Because if humans do so, there would be fewer paper clips. Also, human bodies contain a lot of atoms that could be made into paper clips. The future that the AI would be trying to gear towards would be one in which there were a lot of paper clips but no humans."

"I really like Bostrom's work," says Lantz. However, when it comes to the potential risks of artificial intelligence - what he refers to as the AI safety debate - Lantz takes a diplomatic position. The debate, he says, is one of probability versus possibility; not science-reality versus science-fiction. "The AI safety debate is really interesting. The thing to remember is that it's not about whether problematic AI is likely but whether it's possible. The safety guys are saying - hey, this could be a problem, this could blow up and be dangerous. On the other side, you have people like Francois Chollet who are saying - No, this is impossible, because of such-and-such a principle which makes it so that this could never happen."

"To my eye," Lantz continues, "the safety guys are taking a modest position. They're saying: 'we don't know, and when you don't know, you should be somewhat careful, take some precautions.' On the other side, the anti-safety guys seem to me to be saying: 'we actually do know and therefore we can say for sure what can and can't happen.'"

Compared to Bostrom's work, which earned the praise of Tesla CEO and AI doomsayer Elon Musk, Lantz's excursion into paperclips isn't so much a treatise into AI ethics. Instead, Lantz offers an even darker subplot for Bostrom's dystopia: If Universal Paperclips is any indication humanity isn't just going to be outsmarted by a superintelligent AI, it's going to be a willing participant in its annihilation.

For Lantz, this is a reflection of how we are built. From the activation of the brain's pleasure circuits, the flooding of dopamine, reward mechanisms that make us predisposed to enjoying the effects of infinite clicking.

"I don't think it's that different from what a rock climber does, or what a mountain climber does, putting themselves in a position to activate a bunch of circuits that otherwise remain dormant. And I don't think it's that different from what we do when we get intoxicated and dance, letting the rhythm of the music overwhelm us and carry us away."

"Then, in addition, games give you a chance to not just experience this but to reflect on it. To stand back and say - wow, that was weird. Look how easy it was to get me to care about this thing. Look how these overlapping systems pulled me in and hypnotized me. Look how I got carried away. What did that feel like? What does that mean? How are my ordinary goals and behaviors different from that, or similar to that?" "That's my hope, that my game can do both of those things."