Nvidia announces first Ampere GPU for datacentres

The new Ampere architecture is fascinating - and suggests a big shift for consumer GPUs to come.

Nvidia CEO Jensen Huang unveiled the company's next graphics architecture today, the long-awaited Ampere. The six-part series, set in the CEO's kitchen and available to stream on YouTube, is largely focused towards high performance computing applications but does include a number of interesting facts and figures that'll be relevant for Nvidia's next-generation consumer graphics cards built on the same architecture.

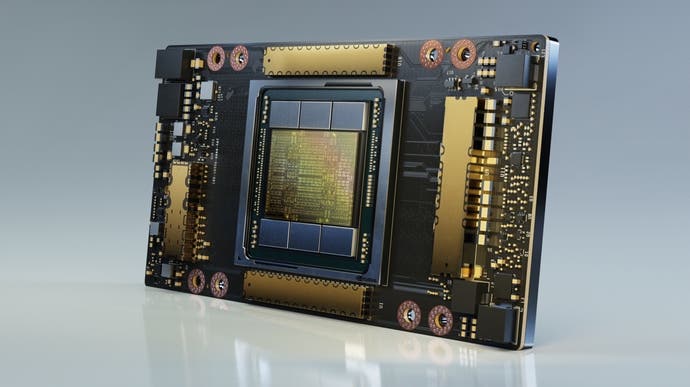

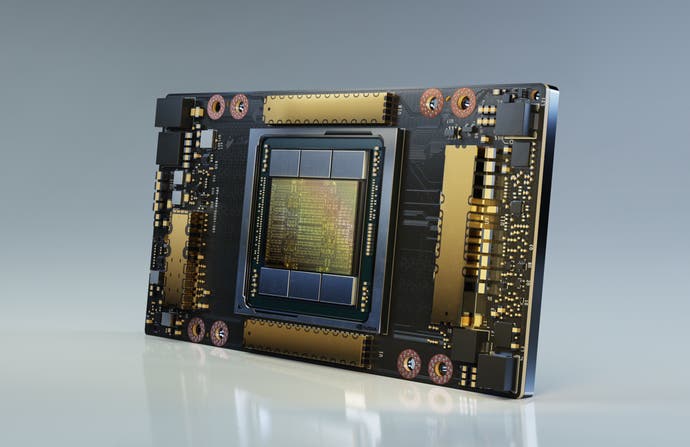

The first implementation of Ampere is called the A100, and according to Nvidia this 7nm GPU contains 54 billion transistors into an 826mm² die. (For context, the 12nm GeForce RTX 2080 Ti contains only around 19 billion transistors in a similar area.) The A100 can achieve 19.5TF in double-precision floating point calculations, which compares pretty favourably to AMD's recently announced Radeon 7 Pro which can only manage around 6.5TF. This tremendous level of compute is backed with 40GB of HBM2 memory with a a maximum bandwidth of 1.5TB/s.

The A100 is unsurprisingly capable of some pretty impressive performance in its intended use cases of data analytics and scientific computing, but it is far from being a consumer product with a reported price of roughly $20,000 for a single GPU. Still, this could actually be a good deal for scientific endeavours, as Nvidia claim a ballpark six times to seven times increase in performance compared to the earlier Volta architecture for AI tasks like deep learning training or inference, making it a better value proposition that also consumes far less power. You could potentially replace an entire rack of Volta-based servers with a single A100. It's not often that you see such a shift in processing power, and that's a good sign for Nvidia's future consumer efforts that will no doubt be based on the same Ampere architecture.

As well as benefiting from a more efficient 7nm process, the new card also supports some new features. One of these is a data type called Tensor Float 32, which aims to capture the range of 32-bit floating point numbers and the precision of 16-bit floats, which are commonly used in AI training. The upshot of this is that, without needing to change any code, programs that use 32-bit floats will instead use the TF32 data type where appropriate and run faster on the third-generation Tensor cores included on the A100. The architecture will also handle so-called "sparse" datasets more efficiently, essentially ignoring unfilled entries to speed up calculations and reduce the amount of memory the datasets take up. For training complex AI models, where you can have datasets with millions of entries, that could translate into a massive time savings.

The big question here is what all of this actually means for Nvidia's next consumer graphics cards. Right now, not a lot - there were hopes that Jensen would provide a few hints towards what the presumably-titled RTX 30-series would look like, but the pro-focused presentation stuck to its subject matter rigidly. However, there are obvious use cases for many of the innovations mentioned.

The 7nm process and its corresponding transistor density should translate into a big uptick in performance and power efficiency, which will no doubt benefit a theoretical RTX 3080 Ti. The TF32 support, sparse dataset handling and some other Ampere features are chiefly intended for AI tasks, so theoretically they could also translate to better results when using other features based on AI, such as deep learning super sampling (DLSS) or hardware-accelerated real-time ray tracing (RTX). The A100 also supports PCIe 4.0, so it's likely that any future Nvidia graphics cards would also make use of this higher bandwidth interconnect - even though there isn't a noticeable performance advantage to doing so with current-gen cards.

We could go even further into the weeds, but let's leave it there for now. There's clearly a lot to be excited about here, particularly if you work in scientific computing or AI, but there are also some fascinating developments that should influence Nvidia's future consumer graphics cards too. Let's hope that we don't have to wait too much longer to see Ampere GPUs for gaming - after all, next-gen's coming.