Nvidia GeForce GTX 970 Revisited

Does the cut-back spec and RAM set-up actually impact game performance?

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvidia's new hardware. Indeed, we called it "the GPU that nukes almost the entire high-end graphics card market from orbit". It beat the R9 290 and R9 290X, forcing AMD to instigate major price-cuts, while still providing the lion's share of the performance of the much more expensive GTX 980. But recent events have taken the sheen off this remarkable product. Nvidia released inaccurate specs to the press, resulting in a class action lawsuit for "deceptive conduct".

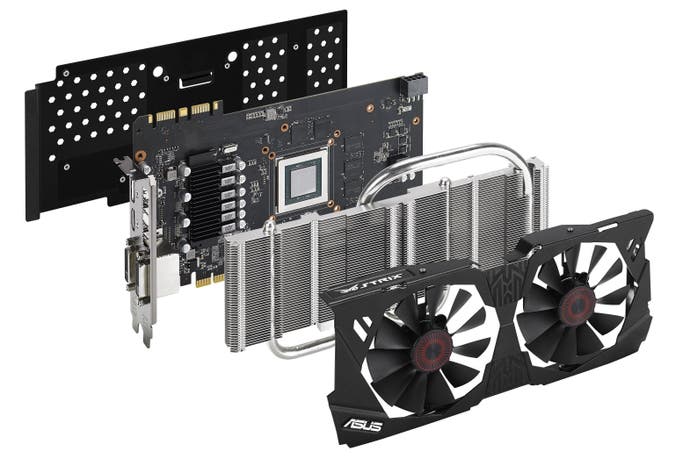

Let's quickly recap what went wrong here. Nvidia's reviewers' guide painted a picture of the GTX 970 as a modestly cut-down version of its more expensive sibling, the GTX 980. It's based on the same architecture, uses the same GM204 silicon, but sees CUDA cores reduced from 2048 to 1664, while clock speeds are pared back from a maximum 1216MHz on the GTX 980 to 1178MHz on the cheaper card. Otherwise, it's the same tech - or so we were told. Anandtech's article goes into more depth on this, but other changes came to light some months later. The GTX 970 had 56 ROPs, not 64, while its L2 cache was 1.75MB, not 2MB.

However, the major issue concerns the onboard memory. The GTX 980 has 4GB of GDDR5 in one physical block, rated for 224GB/s. The GTX 970 has 3.5GB in one partition, operating at 196GB/s, with 512MB of much slower 28GB/s RAM in a second partition. Nvidia's driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM.

Regardless of the techniques used to allocate memory, what's clear is that, by and large, the driver is successful in managing resources. As far as we are aware, not a single review picked up on any performance issues arising from the partitioned memory. Even the most meticulous form of performance analysis - frame-time readings using FCAT (that we use for all of our tests) showed no problems. There was no reason to question the incorrect specs from Nvidia because the product performed exactly as expected, with no additional micro-stutter or other artefacts. Did we miss something? Anything? Now we know about the GTX 970's peculiar hardware set-up, is it possible to break it?

- Order the GeForce GTX 970 4GB from Amazon with free shipping

Before we went into our tests, we consulted a number of top-tier developers - including a number of architects working on their next next-gen engines, and others who've collaborated with Nvidia in the past. One prominent developer with a strong PC background dismissed the issue, saying that the general consensus among his team was that there was "more smoke than fire". Another contact went into a little more depth:

"The primary consumers of VRAM are usually textures, with draw buffers (vertex, index, uniform buffers etc.) coming close behind," The Astronauts' graphics programmer Leszek Godlewski tells us. "I wouldn't worry about performance degradation, at least in the short term: 3.5GB of VRAM is actually quite a lot of space, and there simply aren't many games out there yet that can place that much data on the GPU. Even when such games arrive, Nvidia engineers will surely go out of their way to adapt their driver (like they always do with high-profile games) - you'd be surprised to see how much latency can be hidden with smart scheduling."

The question of what ends up in the slower partition of RAM is crucial to whether the GTX 970's curious set-up will work over the longer term. While we're accustomed to getting better performance from faster RAM, the fact is that not all GPU use-case scenarios require anything like the massive amounts of bandwidth top-end GPUs offer.

Constant buffers or shaders (read-only, not read-modify-write like framebuffers) will happily live in slower memory, since they are small to read and shared across a wide range of cache-friendly GPU resources. Compute-heavy, but data-light tasks could also sit happily in the slower partition without causing issues. These elements should be discoverable by Nvidia's driver, and could automatically sit in the smaller partition. On the flipside, we wouldn't like to see what would happen should a game using virtual texturing - like Rage of Wolfenstein - split assets between slow and fast RAM. It wouldn't be pretty. As it happens though, Wolfenstein worked just fine maxed out at 1440p during our testing.

Going into our testing, we checked out a lot of the comments posted to various forums, discussing games where GTX 970 apparently has trouble coping. Games like Watch Dogs and Far Cry 4 are often mentioned as exhibiting stutter - in our tests, they do so whether you're running GTX 970, GTX 980 or even the 6GB Titan. To this day, Watch Dogs still hasn't been fixed, while the only path to stutter-free Far Cry 4 gameplay is to disable the highest quality texture mip-maps via the .ini file. Call of Duty Advanced Warfare and Ryse also check out - they do use a lot of VRAM, but mostly as a texture cache. As a result, these games look just as good on a 2GB card as they do on a 3GB card - there's just more background streaming going on when there's less VRAM available.

Actually breaking 3.5GB of RAM on most current titles is rather challenging in itself. Doing so involves using multi-sampling anti-aliasing, downsampling from higher resolutions or even both. On advanced rendering engines, both are a surefire way of bringing your GPU to its knees. Traditional MSAA is sometimes shoe-horned into recent titles, but even 2x MSAA can see a good 20-30 per cent hit to frame-rates - modern game engines based on deferred shading aren't really compatible with MSAA, to the point where many games now don't support it at all while others struggle. Take Far Cry 4, for example. During our Face-Off, we ramped up MSAA in order to show the PC version at its best. What we discovered was that foliage aliasing was far worse than the console versions (a state of affairs that persisted using Nvidia's proprietary TXAA) and the best results actually came from post-process SMAA, which barely impacts frame-rate at all - unlike the multi-sampling alternatives.

Looking to another title supporting MSAA - Assassin's Creed Unity - the table below illustrates starkly why multi-sampling is on the way out in favour of post-process anti-aliasing alternatives. Here, we're using GTX Titan to measure memory consumption and performance, the idea being to measure VRAM utilisation in an environment where GPU memory is effectively limitless - only it isn't. Even at 1080p, ACU hits 4.6GB of memory utilisation at 8x MSAA, while the same settings at 1440p actually see the Titan's prodigious VRAM allocation totally tapped out. The performance figures speak for themselves - at 1440p, only post-process anti-aliasing provides playable frame-rates, but even then, performance can drop as low as 20fps in our benchmark sample. In contrast, a recent presentation on Far Cry 4's excellent HRAA technique - which combines a number of AA techniques including SMAA and temporal super-sampling - provides stunning results with just 1.65ms of total rendering time at 1080p.

| AC Unity/Ultra High/GTX Titan | FXAA | 2x MSAA | 4x MSAA | 8x MSAA |

|---|---|---|---|---|

| 1080p: VRAM Utilisation | 3517MB | 3691MB | 4065MB | 4660MB |

| 1080p Min FPS | 28.0 | 24.7 | 20.0 | 12.9 |

| 1080p Avg FPS | 46.1 | 40.2 | 33.6 | 21.2 |

| 1440p: VRAM Utilisation | 3977MB | 4343MB | 4929MB | 6069MB |

| 1440p Min FPS | 20.0 | 16.0 | 12.9 | 7.5 |

| 1440p Avg FPS | 30.3 | 25.6 | 21.5 | 13.0 |

To actually get game-breaking stutter that does show a clear difference between the GTX 970 and the higher-end GTX 980 required extraordinary measures on our part. We ran two cards in SLI - in order to remove the compute bottleneck as much as possible - then we ran Assassin's Creed Unity on ultra high settings at 1440p, with 4x MSAA. As you can see in the video at the top of this page, this produces very noticeable stutter that isn't as pronounced on the GTX 980. But we're really pushing matters here, effectively hobbling our frame-rate for a relatively small image quality boost. Post-process FXAA gives you something close to a locked 1440p60 presentation on this game in high-end SLI configurations - and it looks sensational.

The testing also revealed much lower memory utilisation than the Titan, suggesting that the game's resource management system adjusts what assets are loaded into memory according to the amount of VRAM you have. Based on the Titan figures, 2x MSAA should have maxed out both GTX 970 and GTX 980 VRAM, yet curiously it didn't. Only pushing on to 4x MSAA caused problems.

Finding intrusive stutter elsewhere was equally difficult, but we managed it - albeit with extreme settings that neither we - nor the developer of the game in question - would recommend. Running Shadows of Mordor at ultra settings at 1440p, while downscaling from an internal 4K resolution with ultra textures engaged, showed a clear difference between the GTX 970 and the GTX 980 - something which must be caused by the different memory set-ups. To be honest, this produced a sub-optimal experience on both cards, but there were areas that saw noticeable stutter on the 970 that were far less of an issue on the 980. But the fact is that the developer doesn't recommend ultra textures on anything other than a 6GB card - at 1080p no less. Dropping down to the recommended high level textures eliminates the stutter and produces a decent experience.

In conclusion, we went out of our way to break the GTX 970 and couldn't do so in a single card configuration without hitting compute or bandwidth limitations that hobble gaming performance to unplayable levels. We didn't notice any stutter in any of our more reasonable gaming tests that we didn't also see on the GTX 980, though any artefacts of this kind may not be quite as noticeable on the higher-end card - simply because it's faster. In short, we stand by our original review, and believe that the GTX 970 remains the best buy in the £250 category - in the here and now, at least. The only question is whether games will come along that break the 3.5GB barrier - and then to what extent Nvidia's drivers hold up in ensuring the slower VRAM partition is used effectively. Certainly, Nvidia's drivers team is a force to be reckoned with. One contact tells us that their game optimisation efforts include swapping out computationally expensive shaders with hand-written replacements, boosting performance at the expense of the ever-increasing driver download size. When there's that level of effort put into the drivers, it's not an amazing stretch of the imagination to see that major titles at least get the attention they deserve on GTX 970.

The future: how much VRAM do you need?

Nvidia has used partitioned VRAM before on a number of graphics cards, going all the way back to the GTX 550 Ti, but there's never been quite so much concern about the set-up in the enthusiast community as there is now with the GTX 970. Part of this is because of the way in which the partitioned RAM was discovered, and the lack of up-front disclosure from Nvidia. But perhaps more important is the full impact that the unified memory of the consoles will have on PC games development, which is still split into discrete system and video RAM partitions. Just how much memory is required to fully future-proof a GPU purchase - and how fast should it be?

The future of graphics hardware is leaning towards the notion of removing memory bandwidth as a bottleneck by using stacked memory modules, but one highly respected developer can see things moving in another direction based on the way games are now made.

"I can totally imagine a GPU with 1GB of ultra-fast DDR6 and 10GB of 'slow' DDR3," he says. "Most rendering operations are actually heavily cache dependent, and due to that, most top-tier developers nowadays try to optimise for cache access patterns... with correct access patterns, correct data preloading and swapping, you can likely stay in your L1/L2 cache all the time."

While the unified RAM set-up of the current-gen consoles could cause headaches for PC gamers, bandwidth limitations in their APU processors are necessitating a drive for optimisation that keeps critical code running within memory directly attached to the GPU itself, making the need for masses of high-speed RAM less important. And that's part of the reason why the Nvidia Maxwell architecture that powers GTX 970 performs so well - it's built around a much larger L2 cache partition than its predecessor.

But looking towards the future, the truth is that we just can't be entirely sure how much video RAM we'll need on a GPU to sustain us through the current console generation, while maintaining support for all the bonus PC goodies like higher resolution textures, enhanced effects and support for higher resolutions. What has become clear is that 2GB cards are the bare minimum for 1080p gaming with equivalent quality to the eighth gen consoles, while 3GB is recommended. Today's high-end GPUs seem to cope easily enough for now, but the game engines of the future could see the requirement increase beyond the 4GB seen in today's top-tier cards. As for the GTX 970, could there come a time when 3.5GB of fast RAM isn't enough? The truth is, we don't know - but the more the current-gen consoles are pushed, the more critical the amount of memory available on your graphics card becomes.

"The harder we push the hardware and the higher quality and the higher res the assets, the more memory we'll need and the faster we'll want it to be," a well-placed source in the development community tells us. "Our games currently in development are hitting memory limits on the consoles left, right and centre now - so memory optimisation is on my list pretty much constantly."