Digital Foundry vs extreme frame-rate gaming

PC gameplay at 144fps - what's it like and what kit do you need to run the latest games?

One of the most compelling aspects of PC gaming is its sheer scalability: the idea of playing games to your own specific tastes, using the hardware you've chosen. The quality of a given PC experience is often defined to a certain extent by the pixel-count of the display, but refresh rate is clearly important too. Most displays run at 60Hz, meaning that 60fps is the limit for consistent, tear-free gameplay. However, a growing number of 120Hz and 144Hz screens are arriving, accompanied by exciting technologies like Nvidia's G-Sync and AMD's FreeSync. The question is: just how much of an improvement is an extreme frame-rate experience, and what kit do you need to achieve it?

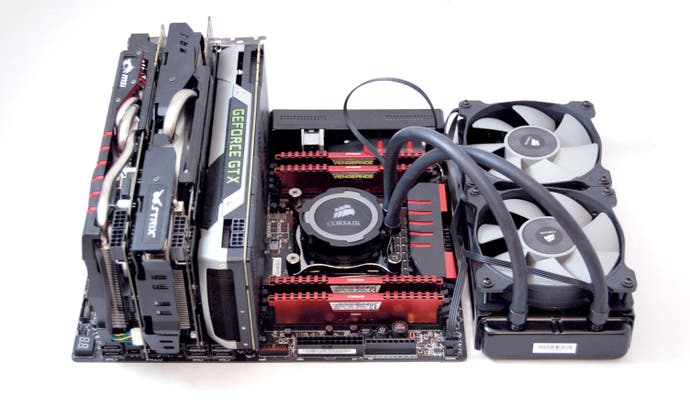

Our objective was simple. We wanted to play the latest games at 144fps on max settings or as close to it as possible on a 2560x1440 display. To ensure that we'd be getting the best possible results, we put together the most powerful PC we could muster. MSI supplied us with its rather splendid Gaming 9 AC X99 motherboard (more on that in the sidebar), while we still retained the 16GB of 2666MHz DDR4 RAM Corsair sent us for our Core i7 5960X review. That remarkable chip - eight cores, 16 threads, overclocked to 4.4GHz - would be the brains of our system, offering the raw computational horsepower of two mainstream Core i7 quad-core processor on one piece of silicon.

All of which just leaves us with the question of which graphics cards to use. Asus helpfully supplied us with its excellent Strix GTX 980 to help us investigate the GTX 970 memory set-up last month, while access to MSI's remarkable GS30 gaming laptop with Gaming Dock (with another GTX 980 mounted inside) gave us access to a matching GPU. On top of that we retained the standard reference card from Nvidia, giving us the potential for a three-way GPU set-up.

So the opportunities here are enticing - not only do we have the horsepower to hit the limits of our display and to experience 144fps gameplay, but we can also evaluate the gains brought by extreme PC set-ups, almost on a sliding scale. Just how effective is running two or three graphics cards in parallel?

Well, to begin with, let's talk about how 144Hz gameplay actually feels - what is the actual prize on offer for all of this effort and the potential expense? On our extreme set-up, DICE's Battlefield 4 runs at 2560x1440 at a locked 144fps, with settings ramped all the way to the max - with the exception of anti-aliasing, where we turned off MSAA. This changed a variable 120-144fps performance read-out into the absolute lock we were looking for.

The result? Well, the boost to performance is there - obviously - but on visual inspection, there isn't the night and day difference you see compared to jumping from the usual console-standard 30fps to the preferred 60fps format. The biggest boost to visual quality comes from the lower frame-times of each, with LCD motion blur nullified by the increased refresh. The big gain in terms of actual gameplay comes from ditching muggy joypad controls and going back to the mouse - here, the one-to-one feeling you have with the controls marries up beautifully with the faster refresh, producing an immensely satisfying level of control. It's at this point you can see why FPS players in particular favour higher refresh monitors: the difference is palpable. In fact, if you own a high frequency display, just the feeling of using the mouse on the desktop feels different... better.

Moving on to Crystal Dynamics' Tomb Raider, we found that third person titles benefit a little less from that enhanced interface. It's a game designed for the joypad really, and the only real benefit comes from the visual benefits of the higher refresh. After 30 minutes of play, we swapped back to 60Hz and continued playing. At that point, we'd grown accustomed to the smoother refresh and while the overall experience was barely diluted by dropping down to 60fps, the faster frame-rate was settled with us, and we could feel the difference. Our set-up locked at 144fps for gameplay, thought there were drops to 120fps during some cut-scenes. It was hard to tell the difference here - but it was noticeable in Battlefield 4, where sharp mouse movements produced discernible judder, until we dialled down anti-aliasing and got the 144fps lock we were looking for.

We continued testing some of the most demanding games in our library, with mixed results. Crysis 3's most processing-intensive areas saw jarring dips down to 100fps at maxed-out settings, and we needed to drop down to the medium quality preset to smooth things out at 120-144fps. Frame-rate perception is a very personal thing - the point where the drops become noticeable will change for each player, but generally we prefer consistency and on more demanding games, we'd prefer a solid lock at a lower frame-rate: 100fps territory still has much of the advantage over the standard 60fps, and while judder is still noticeable in places (camera pans, for example), the boost to response is still there. Looking at other demanding games, Assassin's Creed Unity operated in a 70-100fps window depending on scene complexity - and would go no further. Ryse: Son of Rome lurked in the 90-100fps area, while moving down to the normal quality preset got us close to our locked 144fps target. Returning to standard 60Hz territory was a little disconcerting after a while: say what you like about Ryse's poor gameplay - but all aspects of the visual presentation are first-class. We were struck by the fluidity of the animation in particular at the higher frame-rate, but the occasional stutter was quite off-putting - there have been complaints about micro-stutter on the X99 platform, which apparently are not an issue on the mainstream Z97 used by the likes of the Core i7 4790K.

| 1920x1080 (Lowest/Average FPS) | GTX 980 x1 | GTX 980 SLI x2 | GTX 980 SLI x3 |

|---|---|---|---|

| Battlefield 4, Ultra, 4x MSAA | 69.0/87.7 | 120.0/144.4 | 171.0/220.0 |

| Crysis 3, Very High, SMAA | 59.0/82.0 | 83.0/113.9 | 83.0/158.9 |

| Assassin's Creed Unity, Ultra High, FXAA | 46.0/58.0 | 78.0/96.6 | 88.0/128.1 |

| Far Cry 4, Ultra, SMAA | 68.0/83.6 | 61.0/93.2 | 60.0/91.4 |

| COD Advanced Warfare, Extra, FSMAA | 115.0/139.9 | 86.0/118.0 | 81.0/113.0 |

| Ryse: Son of Rome, High, SMAA | 61.0/75.2 | 84.0/125.9 | 88.0/144.3 |

| Shadow of Mordor, Ultra, High Textures, FXAA | 69.0/91.7 | 107.0/141.9 | 109.0/154.0 |

| Tomb Raider, Ultimate, FXAA | 90.0/117.3 | 158.6/204.7 | 222.9/292.0 |

| Metro Last Light Redux, Max, Post-AA | 57.0/89.7 | 92.0/142.9 | 83.0/152.4 |

And that was the major problem we encountered during our testing: running a game at 60fps involves a lot of work on the part of the CPU - it has to simulate the game world and prepare the instructions for the GPU, which in turn has to render the scene. All of this needs to occur in a tight 16.67ms window. At 120fps, that window drops to just 8.33ms, while 144Hz offers up a miniscule 6.94ms processing budget. As you'll see in our benchmarks, we can attain some remarkable scaling with SLI in test conditions, but the lowest reported frame-rates do not rise in line with the averages - meaning that we're hitting either driver or CPU bottlenecks (and probably both). Sometimes moving from 2x to 3x SLI can actually see minimum frame-rates drop rather than rise - perhaps because of the increased CPU burden in running an extra GPU.

When gaming at extreme frame-rates, it's not really the average, or even the highest possible performance levels that are important - it's the sudden drops that you feel the most. We've got some benchmarks on the page here where the difference between minimum and average frame-rates are substantial, but even this doesn't truly highlight the issue. In any benchmark, you're looking at a single moment in time. During actual gameplay, the complexity of the work carried out by your system changes all the time, and the hits to frame-rate can be more pronounced.

Now, in theory, you might wonder why this is an issue when we are using the fastest consumer-level CPU on the market - Intel's Core i7 5960X, a gargantuan leap in processing power compared to the mainstream i5s and i7s. There are several factors in play here: firstly, not all game engines scale across all available cores. Secondly, the nature of DirectX 11 is that it relies somewhat on a single, very fast thread feeding jobs to other threads. That in itself presents bottlenecks and it's the biggest reason why a dual-core Intel i3 can outperform an eight-core AMD processor on the same game. Bottom line: doubling available CPU power doesn't mean that you can remove the chip as a potential bottleneck. Indeed, the way things are set-up right now, the chances are that much of the chip remains dormant - even on games that do scale across multiple cores. Remarkably, even with the 4.4GHz overclock in place, some titles actually seem to run a touch slower than the quad-core i7 4790K.

| 2560x1440 (Lowest/Average FPS) | GTX 980 x1 | GTX 980 SLI x2 | GTX 980 SLI x3 |

|---|---|---|---|

| Battlefield 4, Ultra, 4x MSAA | 45.0/57.7 | 82.0/101.2 | 109.0/135.6 |

| Crysis 3, Very High, SMAA | 38.0/50.1 | 53.0/72.7 | 79.0/105.6 |

| Assassin's Creed Unity, Ultra High, FXAA | 31.0/37.8 | 54.0/66.2 | 76.0/92.3 |

| Far Cry 4, Ultra, SMAA | 51.0/59.6 | 75.0/94.0 | 59.0/90.7 |

| COD Advanced Warfare, Extra, FSMAA | 87.0/103.6 | 67.0/92.7 | 64.0/90.1 |

| Ryse: Son of Rome, High, SMAA | 43.0/53.9 | 69.0/92.9 | 77.0/132.4 |

| Shadow of Mordor, Ultra, High Textures, FXAA | 52.0/65.7 | 82.0/103.4 | 89.0/122.1 |

| Tomb Raider, Ultimate, FXAA | 60.0/77.7 | 102.4/136.1 | 153.3/194.6 |

| Metro Last Light Redux, Max, Post-AA | 39.0/56.0 | 64.0/92.3 | 64.0/111.2 |

Other bottlenecks can also appear elsewhere in the system too. We strongly suspect that a lot of the occasional stutter that manifests in our benchmark runs comes from background streaming from storage - we use a fast SSD there, but as fast as it is, it's going to cause hold-ups in the system when we run benchmarks fully unlocked. This Crysis 3 image is enlightening - by regulating frame-rate with a 30fps cap, stutter is completely eliminated in our benchmark sequence, here running on a Core i3 system paired with Nvidia's GTX 750 Ti. Disabling the cap increases frame-rates but produces the highest frame-times. Consistency in frame-rate isn't just good for your eyes, there's a strong argument that in regulating frame-times, the system's components have more room to 'breathe'.

When it works, when the consistency is there, the effectiveness of extreme frame-rate gaming varies from 'a nice thing to have' to a fundamental improvement to the interface between player and game - depending on the title you're playing. For most it will be the thin end of the wedge when it comes to the law of diminishing returns, a colossal investment in return for a fairly subtle improvement to the game experience. Drop down from an £800 eight-core processor to a £250 quad, and lose one of those GTX 980s and on most titles, you'll still get the lion's share of the performance - especially if you're prepared to make minor concessions on graphical settings.

It's fair to say that extreme frame-rate gaming is mostly enjoyed by a very, very small niche of PC gamers, to the point where engineering software to take advantage of 144Hz displays may be something of an indulgence for a developer. However, the importance of a very high, consistent frame-rates is set to become a big issue as we move into the upcoming virtual reality era. Low persistence and sustained performance are key in overcoming nausea issues, with 90fps frame-rates the minimum in producing a good VR experience. Our tests suggest that while the brute force rendering horsepower is there (even on single GPU systems - hello, Titan X), the background architecture needs to see radical improvement if AAA gameplay is to transfer over effectively.

Based on promising early benchmarks, DirectX 12 should resolve the CPU or driver bottleneck issues we encountered during our testing, while both AMD and Nvidia are busy working on technologies to keep GPU latencies low. While VR may be the focus, if the end result is an improved showing for 144Hz gaming on enthusiast displays, based on what we've experienced with Battlefield 4 and Tomb Raider, that can only be a good thing.