What the hell is a teraflop anyway?

And to what extent does it define gaming power? Digital Foundry explains all.

When the first specs for PlayStation 4 and Xbox One leaked, an apparent disparity in their graphical performance was highlighted with one stark comparison. The Microsoft console - initially at least, before its specs tweak - featured 1.23 teraflops of GPU power, while the Sony machine trumped it with significantly higher 1.84TF. On paper, the comparison looked vast. Before a single console had been sold, Microsoft looked to be in trouble. The raw figures suggested that PS4 had an additional 50 per cent of graphical power - a hugely important metric for gamers eager to see the next generation of consoles deliver the most powerful hardware possible.

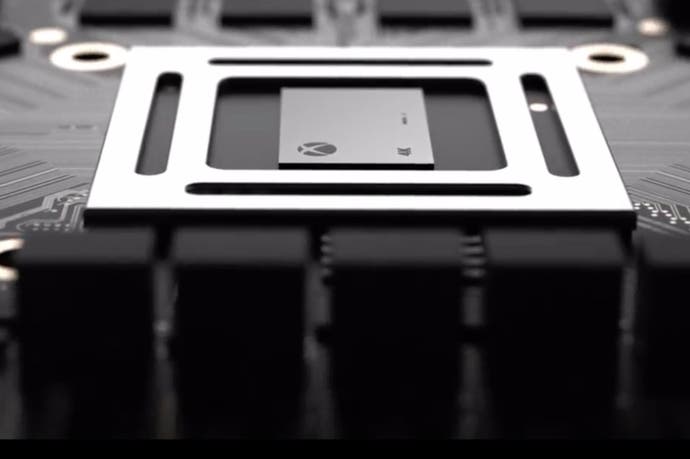

Now it seems the position of specs superiority has changed hands with the reveal of their successors. PlayStation 4K Neo currently has a mooted 4.2TF while Microsoft's Project Scorpio trumps it with around 6TF - a 43 per cent advantage. All of which is fascinating of course, but the question is, what is a teraflop and to what extent are these seemingly vast spec differentials actually indicative of performance and indeed the gameplay experience?

At a basic level, a 'flop' is a floating point operation - a basic unit of computational power. When applied to the AMD graphics technology at the heart of the Microsoft and Sony consoles, the calculation is really simple. You multiply the amount of shader cores in the GPU by its clock-speed. With AMD hardware, you'll find 64 shaders per compute unit. Xbox One has 12 CUs, PS4 has 18, giving us a total of 768 and 1152 shaders. The clock-speeds of the two consoles are 853MHz and 800MHz respectively.

It's pretty basic maths then, but after that, you multiply the result by two - and that's because for each clock, two different types of instruction (one multiply, one accumulate operation) are run simultaneously. This produces some insanely large numbers, so similar to the way the vast amount of storage in a typical hard drive is rationalised down into a digestible figure, the same thing happens with flops. So our final figure is divided by one million, giving us the final teraflop figure [UPDATE: Examples below corrected, flops should be megaflops].

So here's how the maths works out for Xbox One, PlayStation 4 and the current PlayStation 4K Neo spec:

- Xbox One: 768 shaders x 853MHz x 2 instructions per clock = 1,310,208 megaflops/1.31TF

- PlayStation 4: 1280 shaders x 800MHz x 2 instructions per clock = 1,843,200 megaflops/1.84TF

- Neo: 2304 shaders x 911MHz x 2 instructions per clock = 4,197,888 megaflops/4.2TF

Microsoft recently revealed that its new Project Scorpio console features a graphics core with around 6TF of computational power, but revealed little else about the GPU spec. In effect, we have the final part of the equation, but no other information in terms of clock-speeds or shader count. In our recent Scorpio spec analysis, we used a combination of existing knowledge about console design along with leaked information about AMD's upcoming GPU, codenamed Vega, to come up with some best guesses about the hardware make-up of Microsoft's new hardware.

- Scorpio Scenario #1: 3584 shaders x 840MHz x 2 instructions per clock = 6,021,120 megaflops/6.02TF

- Scorpio Scenario #2: 3840 shaders x 800MHz x 2 instructions per clock = 6,144,000 megaflops/6.13TF

Our speculation here assumes a lower clock speed for Scorpio, as its processor is likely to be significantly larger than PlayStation Neo's (larger processors generate more heat, generally seeing them run at lower clocks). And we are assuming that Microsoft is using the upcoming AMD Radeon Vega part, confirmed via a leak to be using 64 compute units or 4096 shaders, because hitting 6TF using the same Polaris technology as PlayStation Neo would require an extreme clock-speed on the processor.

- Scorpio Scenario #3: 2304 shaders x 1302MHz x 2 instructions per clock = 5,999,616 megaflops/6TF

In fact, it would require the processor to run at faster clock-speeds than the original Radeon RX 480 PC hardware on which the Neo GPU is based, something which - realistically - is not going to happen. In short, unless Microsoft has access to a next-gen AMD GPU part that's not on the Radeon roadmap, we must be looking at a cut-back version of Vega.

To what extent do teraflops define system performance?

Teraflops are a very basic measure of computational power, separate and distinct from all other aspects of GPU design. For example, AMD's GCN (graphics core next) architecture has always been heavy on raw computational power compared to its Nvidia rivals - one reason why Radeon GPUs did so well back in the cryptocurrency gold rush era. However, it is important to stress that this does not necessarily translate into games performance.

A good example of this is to compare Nvidia's GTX 1080 with its 9TF vs AMD's Radeon R9 Fury X with 8.6TF. Based on computational power alone, you'd expect these two graphics cards to offer broadly equivalent performance, but as the 4K benchmarks below demonstrate, the GTX 1080 isn't just a bit faster, it's a lot faster in all but one title we tested. Indeed, even the slower GTX 1070 - with a rated 6.5TF of power - can comfortably outperform Fury X in many games. Further, in terms of teraflops, the GTX 1070 even lags behind Nvidia's own Titan X (7TF) and again, it manages to match or even outperform it.

Drivers - the software powering the hardware - are important, but more crucially, so is the design of the graphics architecture itself, not to mention other aspects of the hardware, such as memory bandwidth and on-chip memory caches. However, comparisons between console parts can be broadly indicative for two reasons: firstly, because the surrounding elements of the GPU tend to scale as required in line with compute performance and secondly, because both PS4 and Xbox One GPUs come from the same family and share very similar core designs.

| 3840x2160 (4K) | GTX 1070 | GTX 1080 | Titan X | R9 Fury X |

|---|---|---|---|---|

| Assassin's Creed Unity, Ultra High, FXAA | 25.4 | 32.5 | 25.6 | 22.6 |

| Ashes of the Singularity, Extreme, 0x MSAA, DX12 | 43.1 | 53.3 | 40.9 | 49.0 |

| Crysis 3, Very High, SMAA T2x | 31.5 | 40.1 | 31.3 | 31.4 |

| The Division, Ultra, SMAA | 31.0 | 37.3 | 30.7 | 31.1 |

| Far Cry Primal, Ultra, SMAA | 33.5 | 42.4 | 33.5 | 34.5 |

| Rise of the Tomb Raider, Ultra, SMAA, DX12 | 35.6 | 45.8 | 35.9 | 29.6 |

| The Witcher 3, Ultra, Post AA, No HairWorks | 37.3 | 43.7 | 34.0 | 32.8 |

| Project Cars, Ultra, SMAA | 33.6 | 38.5 | 29.8 | 20.9 |

However, inter-generational comparisons between Xbox One and Scorpio, along with PS4 vs Neo may not be quite so indicative. Although AMD is supplying graphical components with complete compatibility with the older generation, the new wave of GPU hardware also features architectural improvements. In short, we would hope to see more efficient performance. It's something we can test when AMD releases its upcoming RX 460 graphics card. This features the same 16 compute units as the Radeon HD 7850, but based on its newer Polaris design. Once we equalise clock-speeds and memory bandwidth between the two, benchmarking the pair of them should in theory give us a good idea of what efficiency improvements AMD's fourth-gen GCN offers.

The bottom line is this. On paper, Scorpio has 4.6x the compute power of Xbox One, while Neo has 2.3x the power of PS4. However, the improved designs offered by AMD in its newer technology may actually result in a higher boost to performance than we might think, based on teraflops ratings alone. However, it's important to stress that a 40 per cent increase in compute won't translate into a 40 per cent increase in actual performance.

GPU performance doesn't scale completely in line with computational power

Generally speaking, cramming more AMD compute units into a GPU produces a higher level of performance. But, to use a crude example, 2x the teraflops doesn't translate into 2x frame-rates. There are a multitude of potential reasons for this, but generally speaking, memory bandwidth constraints are a major factor here.

Take the Radeon R9 380X graphics card, for example. It is a fully enabled 32 compute unit version of AMD's Tonga chip design. When it's benchmarked against the cut-down R9 380 (using the same chip with 28 compute units) we see only a 10 to 15 per cent increase in gaming frame-rates. This is despite higher GPU core clocks and faster memory, in addition to more shaders. The disparity between actual performance and rated teraflops only tends to increase as the gap widens between GPU specs. Microsoft is doing the best it can with Scorpio, backing its 6TF GPU with memory offering 320GB/s of bandwidth vs the 218GB/s found in Neo.

The disparity between teraflops and in-game performance is seen to a certain extent on PS4 and Xbox One too. Raw benchmarks for GPU performance on the consoles are almost non-existent - games tend to run with frame-rate caps, plus developers adjust quality settings on various rendering components or indeed change native rendering resolution to bring performance more closely into line. But there is an exception: Io Interactive's Hitman. This appears to run at the same 1080p resolution on both PS4 and Xbox One, with identical visuals - and it runs with an unlocked frame-rate, pretty close to ideal benchmarking conditions.

The end result is that in areas of the game where the GPU is the primary limiting factor, PlayStation 4 offers a 30 per cent increase in performance, despite having a 40 per cent compute advantage (1.84TF vs 1.31TF) - not to mention advantages in memory bandwidth too (depending on how Io is using Xbox One's embedded ESRAM). And here's the thing: Hitman can actually run faster on Xbox One in some scenarios, which brings us on to our next point.

Gaming performance can be CPU-limited too

Hitman is an interesting example of how game performance can be CPU-limited as well as GPU-bound. The game's Paris stage is rich in NPC characters, all of which need to have their behaviours simulated, along with their animations processed. This work is better suited to the CPU and both PS4 and Xbox One both use the same relatively weak AMD Jaguar processor cores - a CPU architecture originally designed for mobile devices. To make up for their weak performance, both platform holders doubled up on core count - a bit of a fudge, to be honest. The difference is that the Microsoft console runs the CPU cores at 1.75GHz vs PS4's 1.6GHz - that's a 9.4 per cent advantage which you can actually see on the screen in some areas of Hitman.

Sony has attempted to address this problem with Neo. It seems that AMD's next-gen 'Zen' CPU technology is not available for integration along with the GPU into a single processor yet, so the platform holder has done the next best thing - it is running the same eight-core cluster at a higher clock-speed: 2.1GHz on Neo vs 1.6GHz on PS4 - a 31.3 per cent boost to performance. We don't know exactly what CPU architecture Project Scorpio uses, but based on the balance of probabilities, there's a very good chance that Microsoft is using the same strategy of up-clocking the existing Jaguar cores, or a variation of them.

This is our primary concern with Neo and Scorpio: we are game-changing increases to graphics power with more powerful GPUs and some insane flop-counts, but it is not being matched by a similar boost to CPU power. This may explain why we are seeing a focus on 4K resolutions as opposed to significantly higher frame-rates - doubling performance from 30fps to 60fps (something we would much prefer) also requires asking much more from the CPU. And on Neo at least, where we know the spec, we aren't seeing anything like the doubling of performance required.

The bottom line: teraflops don't fully define a console's graphical power

There is no direct, linear relationship between in-game performance and computational power as measured by teraflops, and while we can get some idea of relative GPU performance, this only really works when comparing different graphics hardware based on the same core architecture. And even then, a GPU with, say, a 40 per cent advantage over another will not see that advantage scale in a linear fashion in terms of pure performance. The capabilities of a graphics processor also rely on more than just computational power too - memory bandwidth in particular is of key importance.

However, what is clear is that Xbox One's 12 compute unit, 1.31TF GPU has seen a disadvantage against PS4's 18 compute unit, 1.84TF graphics core - a situation often exacerbated by the Microsoft console's lower memory bandwidth. However, bearing in mind the big divide in specs, developers have coped admirably in bringing broadly equivalent experiences in many multi-platform titles, the big differentiator being in-game resolution (1080p vs 900p, for example) - though in general terms, PS4 has often handed in more stable frame-rates too.

All the signs point to a similar spec divide between PlayStation 4K Neo and Project Scorpio, with the cards stacked in Microsoft's favour this time - but how the spec differential translates into the game experience remains to be seen. Microsoft itself seems intent on targeting native 4K gameplay. It's a strategy that Sony is considering too, though upscaling from lower resolutions is more likely. However, faced with more powerful rival hardware, maybe Sony, and indeed games developers, will re-consider and aim to get more out of the full HD, 1080p experience? As we mentioned recently, there are other options for the new consoles beyond higher resolutions...