Just how good are the new wave of AI image generation tools?

Examining DALL-E, Midjourney and Stable Diffusion - and the implications for games development.

AI-generated imagery is here. Type a simple description of what you want to see into a computer and beautiful illustrations, sketches, or photographs pop up a few seconds later. By harnessing the power of machine learning, high-end graphics hardware is now capable of creating impressive, professional-grade artwork with minimal human input. But how could this affect video games? Modern titles are extremely art-intensive, requiring countless pieces of texture and concept art. If developers could harness this tech, perhaps the speed and quality of asset generation could radically increase.

However, as with any groundbreaking technology, there's plenty of controversy too: what role does the artist play if machine learning can generate high quality imagery so quickly and so easily? And what of the data used to train these AIs - is there an argument that machine learning-generated images are created by effectively passing off the work of human artists? There are major ethical questions to grapple with once these technologies reach a certain degree of effectiveness - and based on the rapid pace of improvement I've seen, the questions may need to be addressed sooner rather than later.

In the meantime, the focus of this piece is to see just how effective these technologies are right now. I tried three of the leading AI generators: DALL-E 2, Stable Diffusion, and Midjourney. You can see the results of these technologies in the embedded video below (and indeed in the collage at the top of this page) but to be clear, I generated all of them, either by using their web portals or else running them directly on local hardware.

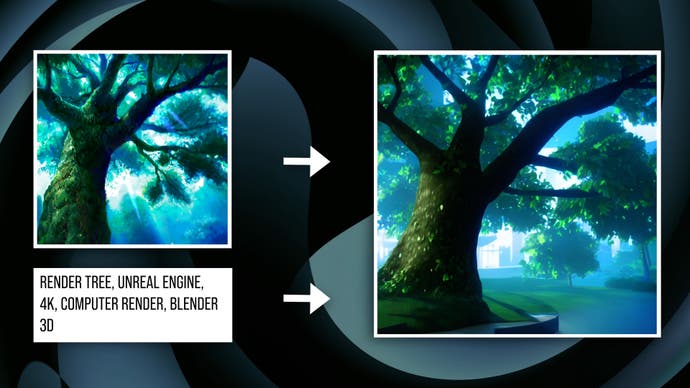

At the moment, the default way of using AI image generators is through something called 'prompting'. Essentially, you simply write what you'd like the AI to generate and it does its best to create it for you. Using DALL-E 2, for example, the best way to prompt it seems to be to use a combination of a simple description, plus some sort of stylisation, or indication of how you'd like the image to look. Attaching a lot of descriptors at the end of a prompt often helps the AI deliver a high quality result.

There's another form of prompting that involves giving the software a base image to work with, along with a verbal prompt that essentially guides the software to create a new image. Right now this is only available in Stable Diffusion. Like many other AI techniques, AI image generation works by sampling a large variety of inputs - in this case, databases of images - and coming up with parameters based on that work. In broad strokes, it's similar to the way that DLSS or XeSS work, or other machine learning applications like the text generator GPT-3. On some level, the AI is 'learning' how to create art with superhuman versatility and speed.

Conceptually at least, AI art generation should be limited by its dataset - the collection of billions of images and keywords that it was trained on. In practice, there are so many inputs that these tools have been trained on that they end up being very flexible. At their best, they demonstrate human-like creativity when subjected to complex or abstract prompts, as the AI has, in a sense, 'learned' how we generally understand and categorise visual information. Plus, image generators produce outputs based on random seeds - meaning that the same set of keywords can produce different interesting new results each time you run it.

The positive implications for the video game industry are numerous. For example, remasters are becoming ever-more common. However, older titles come saddled with technical baggage. Some problems are easy to overcome, but updating the source artwork - in particular, the textures - used for those games often takes an enormous amount of effort and time. That being the case, it was no surprise that when AI upscaling techniques became popular starting around 2020, they immediately saw use across a wide variety of remastering efforts. Games like Chrono Cross: The Radical Dreamers Edition, Mass Effect Legendary Edition, and the Definitive Edition Grand Theft Auto titles all used AI upscaling to mixed effect. AI upscaling works very well when working with relatively high-quality source artwork with simpler kinds of detail but current AI upscaling models really struggle with lower resolution art, producing artifact-ridden results.

But what if we generated all-new assets instead of merely trying to add detail? That's where AI image generation comes in. Take the Chrono Cross remaster, for example. The original game's artwork is pretty low resolution and the AI upscaling work does a reasonable job but ends up looking a bit messy. However, if we feed the source imagery into Stable Diffusion and add appropriate prompt material, we can generate all-new high quality artwork that maintains similar visual compositions. We can redraw this cave area with the same fungal shapes and rocks, just at a much higher level of fidelity. By modifying some parameters, we can generate something very close to the original, or pieces that rework the scene by reinterpreting certain areas, like the pathway near the centre. There are other examples in the video above.

Traditional textures in 3D games are a good target as well. Resident Evil 4 runs on most modern platforms nowadays but its sixth-gen era texture work looks quite messy. Modern games try to depict more complex details in texture work, so simply upscaling or upsampling the original textures doesn't work very well. Again, by using original texture assets as an input we can generate high-quality artwork with much more natural looking detail. The software reinterprets the original work with our verbal prompt as a guide, producing high fidelity results in seconds.

You could, of course, apply the same techniques to creating original assets for games. Provide a source image, like a photograph or an illustration, and generate a new texture asset or piece of artwork for your game. Alternatively, you could just provide a prompt and allow the AI system to generate brand new art without an image to directly guide it. The possibilities here seem virtually endless. Asset creation in the game industry is a huge constraint on development resources, and these sorts of tools have the potential to massively speed up workflows.

Potentially, Stable Diffusion seems quite powerful for these sorts of applications, as you can easily queue up hundreds of images at once on your computer for free and cherry-pick the best results. DALL-E 2 and Midjourney also don't currently allow you to work from a specific source image, so trying to match a piece of existing art is much more challenging. Stable Diffusion also has an option to generate tileable images, which should help with creating textures.

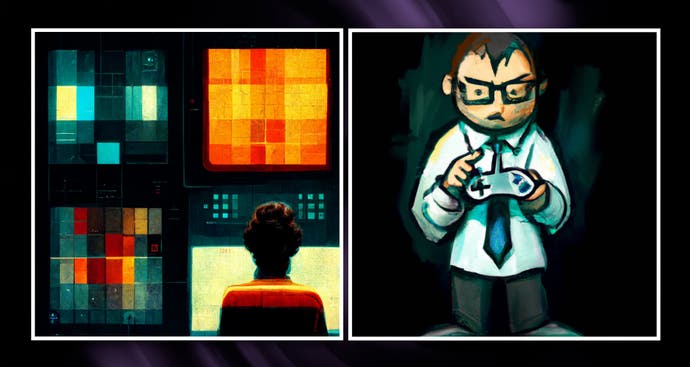

I can see these tools being used earlier in the production process as well. During development, studios need countless pieces of concept art. This artwork tends to guide the look of the game and provides reference for the game's models and textures. At the moment, this is done by hand using digital tools, like graphics tablets, and is very labour-intensive - but AI art tools are capable of generating artwork extremely quickly. Plug in a few parameters and you can easily generate hundreds of examples to work from. Characters, environments, surfaces - it's all trivial to generate with some decent prompting and a few moments of processing time.

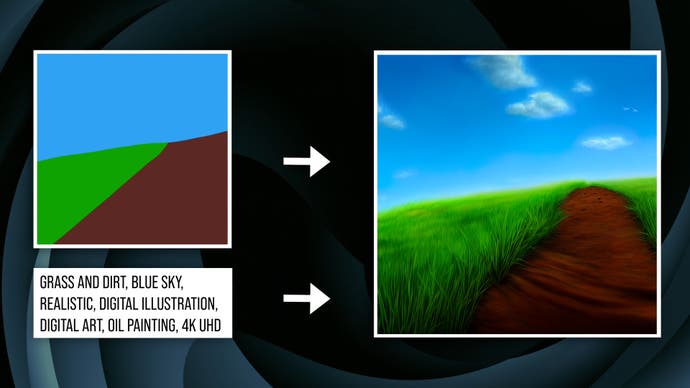

Key concept art techniques translate to these AI workflows too. A lot of concept art is made by looking at a 3D model or rough sketch and doing a 'paintover', which is when an artist draws detail on a simplified representation of a scene. By feeding the AI a base image to guide composition, we can do the exact same thing. We can provide it with a basic sketch, a 3D model, or even the simplest of compositional drawings, and it will work from that to create a high-quality piece of concept art. Just block out the most basic visual shape, combine it with a verbal prompt and you can get a great result that matches what you need from the composition.

Impressive results are achievable but it is important to stress that current AI models are hardly infallible. Actually working out a coherent aesthetic across multiple pieces of artwork can be tricky, as even an identical set of descriptive keywords produce quite different results depending on what you ask it to depict. Different subject areas in commercial artwork tend to use different techniques and this gets reflected in the AI outputs. To generate consistent looking imagery, you need to carefully engineer your prompts. And even still, getting something like what you're looking for requires some cherry-picking. AI art does seem like a very useful tool, but it does have its limits at the moment.

In the past, I have worked on digital art, as well as motion graphics that made heavy use of my own illustrations and graphic art. AI image generation tools seem uniquely well-suited to this sort of work, as they require high volumes of art. You could also imagine a future AI that was capable of generating these results for the entire picture in real time. Right now these techniques take seconds of processing, even on fast GPUs, but perhaps a combination of new hardware and optimisation could produce results good enough for use at runtime.

It's also very easy of course to simply take the generated images and plug them into conventional image editing programs to correct any mistakes, or to add or remove elements. A few minor touch-ups can eliminate any distracting AI artifacts or errors. Keep in mind as well that future AI image generation software is likely to be even more impressive than this - while these aren't first-generation projects exactly, research and product development in this field has been somewhat limited until recently. I'd expect a potential 'DALL-E 3' or 'Stabler Diffusion' to deliver more compelling and consistent results.

Clearly these products work well right now though, so which is the best option? In terms of quality, DALL-E 2 is very capable of interpreting abstract inputs and generating creative results. If you want to be specific, you can, but the AI often works perfectly well when given a vague prompt and left to its own devices. It's very creative - DALL-E is able to associate and pull concepts together sensibly based on loose ideas and themes. It's also generally very good at creating coherent images, for instance consistently generating humans that have the correct number of limbs and in the correct proportions.

Stable Diffusion tends to require much more hand-holding. At the moment, it struggles to understand more general concepts, but if you feed it plenty of keywords, it can deliver very good results as well. The big advantage of Stable Diffusion is its image prompting mode, which is very powerful. And if you turn up the settings, you can get some extremely high-quality results - probably the best of the current AI generators.

Midjourney is quite good at stylisation - taking an existing concept and rendering it like a certain type of painting or illustration, for instance. It also works very well with simple prompts and can deliver very high-quality results - but it's perhaps a bit less 'creative'. Midjourney also tends to exhibit more AI artifacts than the other two generators and often has issues maintaining correct proportions. In my opinion, it's the worst of the three.

| DALL-E | Stable Diffusion | Midjourney | |

|---|---|---|---|

| Price (USD) | $0.10 per image generated | Free (when running locally) | Free tier, $30 per month sub for unlimited images |

| Availability | Invitation only | Open | Open |

| Access | Website | Website/Local Computer | Website |

| Source | Closed | Open | Closed |

DALL-E 2 and Midjourney are both commercial and web-based, but have relatively slick web interfaces that are easy to use. DALL-E 2 unfortunately has been invite-only since its launch in April, though you can apply to a waitlist if you like. Stable Diffusion on the other hand is totally free and open-source. The real upside is that Stable Diffusion can run on local hardware and can be integrated into existing workflows very easily.

This wouldn't be Digital Foundry without some performance analysis. DALL-E 2 is quite a bit faster than Midjourney, though as both run through web portals your personal hardware doesn't matter. DALL-E 2 usually takes about 10 seconds for a basic image generation at the moment, while Midjourney takes a minute or so. Running Stable Diffusion locally produces variable results, depending on your hardware and the quality level of the output.

At 512x512 resolution with a low detail step count, it takes only three or four seconds to create an image on my laptop with a mobile RTX 3080. However, ramp up the level of detail, and increase the resolution, and each image takes 30 or 40 seconds to resolve. Using more advanced samplers can also drive up the generation time. There are many other implementations of Stable Diffusion available for download, some of which may differ significantly from the simple GUI version I was running, though I expect performance characteristics should be similar.

To run Stable Diffusion properly, you'll need a 10-series or later Nvidia GPU with as much VRAM as possible. With 8GB on my mobile 3080 I can generate images up to a maximum of just 640x640, though of course you can AI upscale those images afterwards for a cleaner result. There are other ways to get Stable Diffusion up and running, including workarounds to get it running on AMD GPUs as well as on Apple Silicon-based Mac computers but using a fast Nvidia GPU is the most straightforward option at the moment.

Based on my experiences, AI image generation is a stunning, disruptive technology. Type some words in, and get a picture out. It's the stuff of science fiction but it's here today and it works remarkably well - and remember, this is just the beginning. Use-cases for this tech are already abundant but I do feel like we are just seeing the tip of the iceberg. High quality AI image generation has only been widely available for a short time, and new and interesting integrations are popping up every day. Gaming in particular seems like an area with a lot of potential, especially as the technology becomes more broadly understood.

The most significant barrier at this point is pricing. DALL-E 2 is fairly costly to use and Stable Diffusion essentially demands a reasonably fast Nvidia GPU if you want to run it locally. Getting a high-quality image often requires discarding large numbers of bad ones, so AI tools can be expensive - either in money or in time. Exactly how far will these tools go? For the last half-decade or so, AI art was nothing more than an amusing novelty, producing crude and vague imagery with no commercial purpose. However, in the last year - specifically, the last four or so months - we've seen the release of several seriously high-quality AI solutions. It remains to be seen whether AI inference for art will continue to progress at a rapid pace or whether there may be unforeseen limits ahead. Ultimately though, a powerful new tool for asset creation is emerging - and I'll be intrigued to see just how prevalent its use becomes in the games we play.