The Last of Us Part 1 is much improved on PC - but big issues remain unaddressed

Texture quality for 8GB GPUs is fixed, but this is still a challenging game to run well.

The Last of Us Part 1 on PC arrived towards the end of March in a pretty desperate state - a good experience only available to those with more powerful hardware, with profound issues facing gamers on more mainstream components. Progress has been made, however, across a series of patches and we thought it might be a good time to check in on the port to deliver an update on the game's current quality level. There's a lot of good news to impart here, but also some lingering issues - and we're concerned that they may never be addressed.

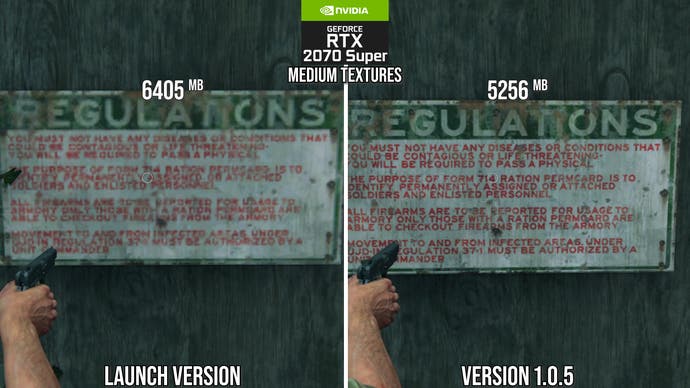

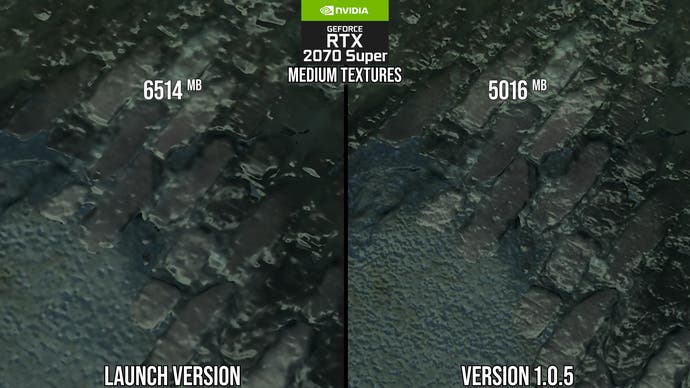

But let's address the good news first. At launch, there were profound issues with texture quality on 8GB graphics cards - which is highly problematic bearing in mind how many of them are in the market. Setting texture quality to high bust through the GPU's VRAM limit, resulting in tremendous stuttering issues. The alternative was to drop back to medium or even low quality textures, depending on your resolution - the problem being that the quality level looked more like 'ultra low'. In fact, textures from the PS3 original were more highly detailed.

I'd venture to suggest that this issue is now resolved. Medium textures now look absolutely fine, with only a minimal hit to quality compared to the high preset. Optimisations to memory management now mean that 8GB GPU owners can actually use the high texture preset instead if they wish, which gives a look very close indeed to the PlayStation 5 version. Naughty Dog deserves praise for delivering this. It demonstrates how PC scaling should work, and why we should not blindly assert that 8GB GPUs are now obsolete.

So, how was this achieved? Based on the difference in medium textures, it looks like there are completely different mipmaps showing up when the game is set to medium. It's as if the game had an art pass carried out for the medium textures to keep resolution higher and to retain more detail - so textures that may not have even existed before. On top of this, there's also a change in how texture streaming works, to accommodate GPUs with different memory sizes. This is controlled by a new option called 'texture streaming rate', which is a brilliant addition.

This option defaults to 'normal' on medium and high presets. When set to normal, the texture cache size is smaller, thus freeing up more VRAM but keeping the texture quality the same in the view frustum. However, if the camera moves rapidly, some environmental textures can stream in later. At the fast or fastest setting, texture popping is virtually gone, but this comes at the expense of more VRAM utilisation. If you are playing the game on an 8GB GPU, I recommend high textures with the normal or fast setting, which looks absolutely fine.

In the video embedded on this page, you'll see that there have been iterative improvements to GPU and CPU utilisation, but make no mistake - The Last of Us Part 1 is still very heavy for PC users and looking at unlocked performance based on the VRR mode of the PS5 rendition of the game, the console version is 55 percent faster in a GPU-limited scenario compared to an RTX 2070 Super, which is typically a match for PS5 in most other rasterised games.

On the CPU side, Naughty Dog is still heavily employing the CPU to carry out background streaming, which makes life very difficult for users on more modest mainstream processors, like the Ryzen 5 3600, for example. I'd love to see more work carried out here - such as the implementation of DirectStorage - but it's clear that for now at least, Naughty Dog's priorities lie elsewhere.

An area where there is good improvement - but at a cost - comes from the game's shader compilation strategy. In short, to avoid stutter, The Last of Us Part 1 has a lengthy compilation step at the beginning of the game. At launch, this was around 41 minutes on a Ryzen 5 3600. It's 25 minutes now for the core game, with an additional four minutes for the Left Behind DLC.

That's good news, except that my playthrough suggests that the compilation step is incomplete - I'm witnessing obvious shader compilation stutter that was not there before. We know this is the problem as it disappears on a second playthrough, then returns again when I nuke the GPU drivers and shader caches. Hopefully this can be sorted once and for all as I simply shouldn't expect to see any stuttering problems like this on a major triple-A release from a studio of Naughty Dog's pedigree.

Other good news? The game's egregious loading time problems have improved. At launch, just starting the game from the main menu incurred a near-minute long load on a Ryzen 5 3600 with a 3.5GB/s NVME drive. This length of loading was excessive in an era when games like Marvel's Spider-Man on PC move from menu to game in just six seconds. With the latest 1.05 patch, that initial load is now 30 seconds. This carries over to in-game loads - for example, skipping a cutscene and loading the next chapter took nearly 27 seconds when shown off in our original coverage of the game. That same load now is achieved in just under 12 seconds on the same hardware at the same settings.

The Last of Us Part 1 is in better shape then, but still far from the optimal experience I was hoping for. And other issues still need addressing. DLSS and FSR2 both manage to impact shadow map quality, which shouldn't really be happening. And of course, there are still bugs to contend with: in my playthrough, an enemy died and the key required to progress spawned under his body, meaning I couldn't pick it up. There's the lingering sense that PC users remain second-class citizens, putting up with issues Naughty Dog would never foist upon its console audience.

Ultimately though, The Last of Us Part 1 on PC is in a better state than it was at launch. Texture quality is much improved, a good experience is possible on 8GB GPUs and the game runs a little better overall. However, many issues remain and new ones have been added. If Naughty Dog and Iron Galaxy can further mitigate background streaming CPU limitations, further increase GPU performance, and totally eliminate shader compilation stutter, the port would be in a good position. But right now at least, I still think it needs a good deal more work.