The Last of Us Part 1 on PC: what we think so far

Performance problems across the board make this one to miss for now.

The Last of Us Part 1 on PC puts us in a somewhat difficult situation at Digital Foundry. How can you produce a technical review for a game that is essentially unfinished and - at the time of writing - has already received two hotfixes? We're told that a further patch is coming next week that should have more significant improvements, so the best we can provide is a snapshot of how the game looks right now, based on testing conducted last Friday. We'll return to the game if there's a noticeable improvement, but in the short term, we've got to move on to other projects.

Our snapshot - taken on Friday, March 31st - is embedded on the page below, taking the form of a limited playthrough of the game carried out on three systems. At the forefront sits the game as it plays on what is close to best-of-the-best PC hardware. That's my own test kit, featuring a Core i9 12900K, 6000MT/s DDR5 and Nvidia's RTX 4090. Alex Battaglia plays through simultaneously using a more mainstream-level PC system based on a Ryzen 5 3600, with 3200MT/s DDR4 and an Nvidia RTX 2070 Super. Meanwhile, sitting pretty on PlayStation 5 in performance mode is our #FriendAndColleague, John Linneman.

We've got an hour of real-time comparisons, CPU tests, GPU analysis and much more, in the embedded video below, but what I'm going to do in this piece is attempt to summarise how the port is right now and what needs to happen to get the code into shape, comparable with some of Sony's most impressive ports - including Marvel's Spider-Man and Days Gone.

What's clear is that there is a long road ahead because there's the sense right now that the PC version is effectively a beta - that is, feature complete but in need of a profound level of optimisation and bug-fixing. The key problem here is that while the game basically works, the hardware requirement is unexpectedly large - something that is explainable (though perhaps not justified) in some areas, while a complete mystery in others.

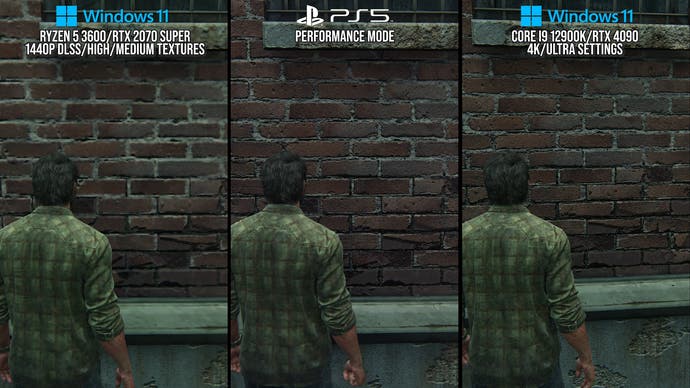

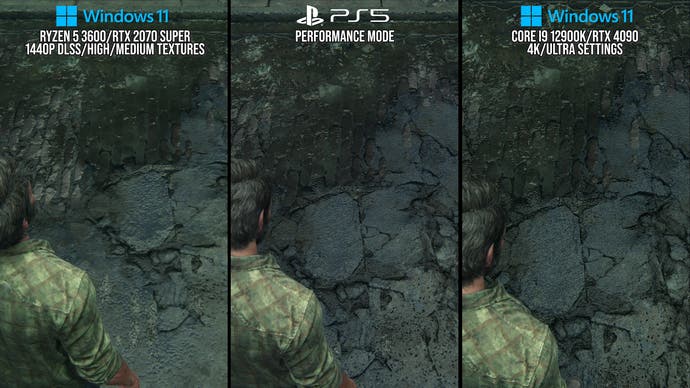

We'll start with memory management, where The Last of Us Part 1 drastically falls short. This is a game rich in high quality artwork but how those assets are streamed from system to video memory is clearly sub-optimal. The big takeaway from our simultaneous playthrough is the extent to which owners of 8GB graphics cards are disadvantaged by this conversion. The high quality texture option is broadly equivalent to the PS5 version and requires around 10-11GB of VRAM depending on the surrounding settings. However, medium is drastically cut down in environment artwork quality to the point where the game almost looks as if it's been vandalised, while the low setting offers only a broad approximation of the art.

Yes, we expect VRAM requirements to rise as we shift away from cross-gen development (TLOU Part 1 is a PS5 exclusive, built around a console with around 12.5GB of memory available to developers) but the bottom line is that 8GB is the sweet spot for the GPU market right now and the results for so many users will disappoint. Ultra textures require even more VRAM,but the end result is barely any different to the high setting, which in turn is very close indeed to the PS5 experience. Looking to use high textures on an 8GB card? You can do it, but expect a highly compromised experience plagued with stutter as assets streaming in and out of VRAM into system memory.

The most obviously noticeable issue with the port can be overcome simply by possessing by a GPU that has 10-11GB of memory. The RTX 3070 8GB and RTX 2080 Ti 11GB are broadly comparable in performance, but the 2080 Ti produces a far superior experience simply because the art in the game is displayed the way it was intended - the high setting works without issue. However, there's every reason you may still experience poor results, because the port's demands on the CPU are frankly astonishing.

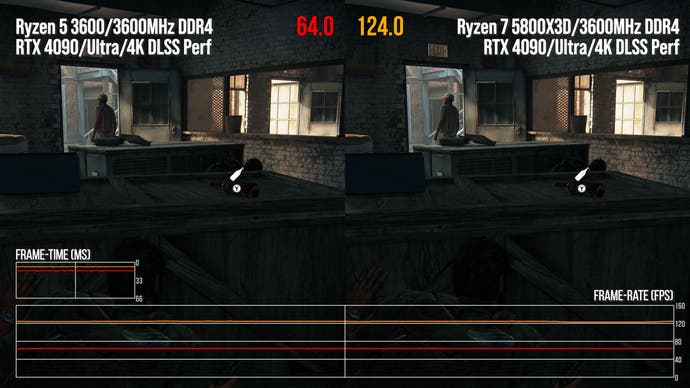

Having tested scores of ports, we're pretty confident at this point that the mainstream Ryzen 5 3600 performs in a similar ballpark to the PS5's CPU. However, the PC version of The Last of Us is especially onerous on your processor, saturating all cores in general play. On the one hand, maxing out the CPU is a good thing when compared to titles like The Callisto Protocol and Gotham Knights, which leave a lot of resources untapped. However, even with blanket utilisation of resources, TLOU still has profound CPU limitations, meaning that even with a reasonably balanced system, you can easily hit CPU limitations - and that's before background streaming (loading of assets) is added to the load.

The Last of Us Part 1 works its way around loading screens by streaming in data in the background as you play, but on the PC version, this adds additional strain to an already over-burdened processor. In the case of the Ryzen 5 3600, this can result in a hit to frame-rate that can be anything from 10-20fps. The GPU requirements are onerous too. PlayStation 5 seems to be broadly equivalent in terms of rasterisation performance to something like an RTX 2070 Super - the GPU used by Alex in our testing - but attempting to get similar performance to the PS5 at the same 1440p resolution is not possible. Thankfully PC owners can lean into DLSS or FSR2 upscaling, but the bottom line is that you'll need a significantly more powerful graphics card even to match console output.

So, to recap - you'll need a GPU with a lot of VRAM to match the console experience visually, while a far more powerful CPU is needed to match PS5 and consistently deliver 60fps (we found that the Core i9 12900K and Ryzen 7 5800X3D got the job done with headroom to spare). Graphics-wise, DLSS and FSR2 can help with the heavy lifting, but you're going to need proportionately higher graphics performance than expected to deliver the console experience.

That's why in the video above, you'll find that it's only the high-spec rig costing untold thousands that actually scales beyond the console experience - and even then, the gains aren't exactly dramatic. There's not a huge degree of difference between the high and ultra experience, while the enormous CPU requirements mean that at its worst, you're still well off the 100-120fps required for a good high frame-rate experience on 120Hz+ displays. You'll also note in our playthrough that at one point, my high-end system actually seemed to be missing features enjoyed on the other two streams. And that's the next challenge this port needs to address: bugs. Social media is highlighting many issues, though our testing showed just a crash and missing lighting at ultra.

Ultimately, this PC port is a profound disappointment and it's hard to believe that the developers and publishers were unaware that there were big issues before putting this out for sale. Hotfixes have already come out addressing some issues (though we've yet to see any dramatic changes, bar a modest reduction in shader compilation times and loading times) but we'd venture to suggest that there's foundational, systemic problems that need looking at - and that's going to require a lot of time. Firstly, the texture management issue is of urgent concern because right now, only a minority of PC users will actually get to see this gorgeous game in the way it was intended to be seen. It can be done - the Marvel's Spider-Man ports prove it.

Secondly, addressing the exceptionally high CPU requirement is also a priority. It seems likely that the hardware decompression of assets used on PS5 is replaced with an entirely CPU-based system on the PC port. This would explain the remarkably long loading times and a massively prolonged shader compilation step on first boot, not to mention the depressed performance in-game when new areas stream in as you play. Can PS5-like loading be achieved on PC without wiping out CPU performance? Again, Marvel's Spider-Man suggests it can be done.

The bottom line is that the requirement for top-tier CPU simply to achieve a consistent 60 frames per second puts even a console-like experience out of reach for the majority of PC users. Finally, GPU utilisation is also very, very high to a degree we find hard to explain and we shouldn't need to rely on upscaling to achieve 60fps on graphics hardware like an RTX 2070 Super.

There's a lot of work to be done then - and it's hard to imagine that there are any easy fixes that can be delivered in a short amount of time, but let's see how the first big patch looks. Right now at least, this port is hard to recommend. Hard, but not impossible, because it is possible to get a perfectly good experience - it's just that the multiple requirements to do so limits smooth, attractive gameplay to users with a high-end CPU and a performant GPU with a lot of VRAM. If your kit fits the bill, you're good to go unless you encounter problematic bugs. For everyone else though, it would be wise to pass on this release for now.