Why VRR is not a magic bullet for fixing poor performance

Variable refresh rate is a game-changer - but it has its limits.

It began in 2013 with the arrival of Nvidia G-Sync - the first form of variable refresh rate (VRR) display technology. Rather than attempt to synchronise, or not synchronise GPU output with your screen, the host hardware took control - kicking off a new display refresh when the GPU was ready with a new grame. V-sync judder didn't happen, screen-tearing would (by and large!) disappear. FreeSync and HDMI VRR would follow, but essentially they all did the same thing - smoothing off variable performance levels and delivering a superior gameplay experience. But let's be clear: VRR is not a cure-all. It's not a saviour for poor game performance. It has its limits and it's important to understand them and in the process, we'll gain a better understanding of performance more generally - and why frame-rate isn't that important compared to other, more granular metrics.

Let's talk about VRR basics. Displays have a native refresh rate - whether it's 60Hz, 120Hz, 165Hz or whatever. Without VRR you have limited options for smooth, consistent play. First of all, there's the idea of matching game frame-rate to the screen's refresh rate. Every display refresh gets a new frame. The most popular example of this is the 'locked 60 frames per second' concept, where a new frame is generated every 16.7ms to match the refresh rate of a 60Hz screen - a truly tricky thing to deliver on consoles while maxing out their capabilities.

Secondly, you can ask your hardware to offer a clean divider of the refresh rate - the classic example being a 30fps game running on a 60Hz screen. In this case, every other refresh receives a new frame from the source hardware. There are issues with this, like ghosting, for example, but this is the classic compromise for maintaining consistency when unable to match the refresh rate.

Finally, there's another, less desirable option: to completely disregard the refresh rate of the display and to just pump out as many frames as you can by turning off v-sync. As new frames are delivered as the screen is in the process of refreshing, you get screen-tearing - partial images presenting on any given display refresh.

Variable refresh rate (VRR) solves all of these problems as the display gives up control of its refresh rate to the GPU. When a new frame completes rendering on the GPU, it triggers a refresh on the monitor. Assuming the time taken to calculate the frame is similar in duration to the last one, you can effectively run at arbitrary frame-rates and the perception of the user is of smooth, consistent, tear-free gaming without being married to any particular performance target.

I say, "tear-free gaming" - but there are limitations and caveats. Every display has a VRR range: 48Hz to 60Hz or 48Hz to 120Hz are common examples. If game performance is above or below the range, things get a little tricky. If game performance exceeds the top-end, screen-tearing returns - so activating v-sync at this point limits frame-rates to the top-end range of the display. If a game dips below the lower bounds, LFC (or low frame-rate compensation) kicks in, doubling, tripling (or more) the existing frame to keep the refresh rate within the VRR window.

If LFC is not supported - as seen on 60Hz system-level VRR on PlayStation 5 - v-sync returns, and so does the judder and this is not great. And even if LFC is working, you may notice ghosting at low frame-rates due to the same image projected over multiple screen refreshes. Other issues? Some displays exhibit flicker when rapidly moving through variable refresh rates. Ultimately, VRR works best when performance is capped to remain below the upper bounds of the VRR range with game optimisation ensuring that LFC is a method of last resort.

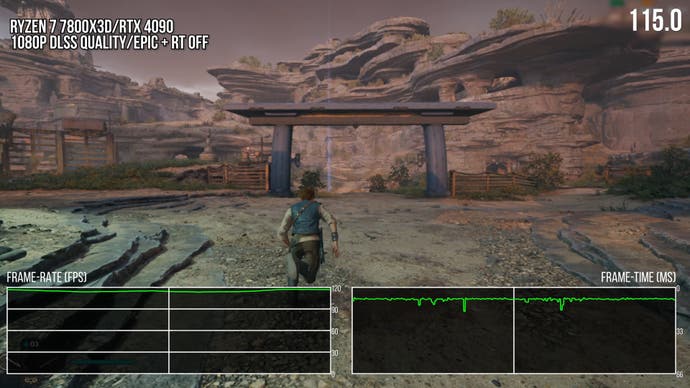

And even then, you may face issues because the concept of frame-rate and refresh rate are broad, umbrella-like terms that don't do a particularly great job of encompassing the granular variability of game performance. Let's put it this way - in theory, a 30fps game delivers a new frame every 33.3ms for consistent gameplay. However, 29 frames at 16.7ms followed by a massive 518ms stutter is still 30fps - but it presents like 60fps with continual pockets of stuttering. That's an exaggerated example perhaps, but consider this: if a screen's VRR window is 48Hz to 60Hz, VRR only works with frames delivered within a 20.8ms to 16.7ms window. That's actually pretty tight - and suffice to say, it's very, very easy for a traversal stutter or a shader compilation stutter to persist for longer than 20.8ms. Much longer. In these scenarios, VRR cannot deliver good, smooth, consistent performance. VRR cannot 'fix' the kind of egregious stuttering we often talk about in Digital Foundry articles and videos.

Displays with 48Hz to 60Hz variable refresh rate windows dominated during the early days of VRR technology, but these days we're at 120Hz and upwards, to 240Hz and beyond. And while GPU and even CPU performance can provide the kind of brute force horsepower to deliver such high frame-rates, this only serves to make those massive stutters even more noticeable: the higher your performance level, the more noticeable the stuttering. We often see stutters in the 30ms range and upwards. Running flat out at 120Hz, you're at 8.3ms. At 240Hz, it's 4.17ms per frame. You can't fail to notice those longer frames.

Stuttering can also manifest in less problematic - but still noticeable - ways. If you're running nicely in a 200-240fps window, that's a variation of around 0.8ms per frame. 160-240fps? That'll be a 2.1ms variation. Despite what looks like a vast chasm in frame-rate, VRR can still work well. However, variations can widen and reach a point where they are noticeable, even if you're remaining within the variable refresh rate of the screen. It's especially noticeable if you're constantly shifting between frame-times as it'll present in a non-consistent, stuttering manner. This can happen with animation errors, or if the CPU is maxed out - often a major cause of stutter.

I talk about various examples in the embedded video but the bottom line is that this is why I disagree with the idea that VRR can "fix a game's performance". It has limits. If a game has a frame-rate that is not too variable and frame-times that are very consistent and only gradually change, VRR will make it look smoother than it would be under a fixed refresh rate. A great example of that is in Dragon's Dogma 2 with its latest patch, where console frame-times are generally consistent and the low variability of the frame-rate generally keeps it smooth looking with VRR, up against an unsatisfactory experience on a non-VRR screen.

However, VRR cannot fix a game that has issues with frame-times or dramatic swings in frame-rate depending on the scene. Star Wars Jedi: Survivor and numerous UE5 games spring to mind along with the Dead Space remake on PC. The utility of VRR lessens dramatically here as the technology cannot smooth over relatively large stuttering effects.

So what does this all mean? For me, this means VRR is not a magic bullet. We cannot excuse a game's issues now that VRR screens are becoming ever more common. VRR cannot fix a game. However, VRR remains a crucial technology in that you no longer need to doggedly pursue a fixed performance target for consistent gaming - and in turn this radically diminishes the importance of frame-rate as a metric. The vast majority of PC displays feature VRR technology, so I'd say that frame-time consistency along with subjective descriptions of image persistence matter far more when it comes to characterising game performance. The challenge is in establishing metrics that are readily understandable by an audience that's come to accept FPS as the default measurement of performance for decades now - but this is important enough for us to put considerable time and effort into getting right, and change has to come, sooner or later.