Kinect Hacking: The Story So Far

From console hit to homebrew platform.

It's not been a particularly good week for hackers, with the process of opening up a hardware platform under the spotlight for its links to piracy. However, the recent reverse-engineering of Microsoft's Kinect has showcased effectively how hacking can lead to an outpouring of homebrew innovation without any impact whatsoever on the livelihoods of those working in the games business.

The Kinect hardware was reverse-engineered to work on PC within days of its release, with hacker Hector Martin (a member of the Fail0verflow team now being targeted by Sony litigation) revealing that Microsoft had done nothing to protect the hardware from running on any platform that possesses the requisite USB connection (a PlayStation 3 hack is surely incoming at any moment). Kinect was effectively an open platform from day one, with the creation of the interfacing drivers the only task facing the "hackers".

Microsoft has acknowledged its own plans to bring Kinect to PC, but the homebrew credentials of the platform received a major shot in the arm when the technological architects of the core hardware - Israel-based PrimeSense - released official Kinect drivers along with an integration path to their own NITE middleware system: the so-called OpenNI initiative. At this point, it could be argued that Kinect "hacking" as such had become obsolete in favour of a new platform with public tools provided by the originators of the technology.

The provided tools certainly gave a massive boost to the community. The NITE software, for example, interprets the raw Kinect depth data and allows for skeletal recognition, amongst other things. In the space of mere weeks, Kinect operating on PC went from being a somewhat basic hack to something much, much more - the tools are there for homebrew developers to create their own "natural user interface" applications and games.

One of the first truly impressive demos to showcase the potential of the tech was Oliver Kreylos' 3D video camera demo. In this groundbreaking experiment he combined the image from the conventional RGB camera with the depth data to produce a depth-based 3D webcam, that could also be used to accurately measure objects. Just about the only limitation we could see is the fact that there was only one set of depth data - so one object behind another could not be seen, for example. Despite misgivings that it wouldn't work, Kreylos enhanced his hack to work with two Kinect sensors, and the notion of an almost fully virtualised 3D space became a reality.

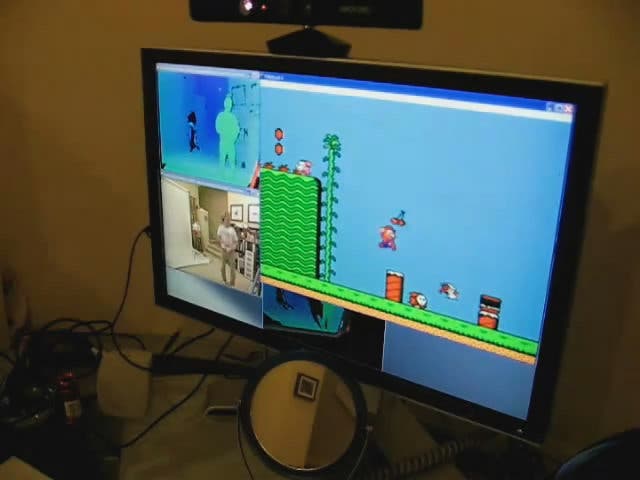

With the release of the official Kinect drivers and the OpenNI initiative, it wasn't long before homebrew coders started to experiment with the camera's suitability for integration into existing PC titles. To that end, FAAST (Flexible Action and Articulated Skeleton Toolkit) was released - a software suite that allows just about anyone with a small amount of technological knowledge to remap Kinect skeletal data to the more traditional mouse and keyboard inputs.

Already we have witnessed a range of games adapted: we've seen Super Mario Bros running with Kinect functionality and World of Warcraft control enabled with a puzzling and rather limited series of gesture-based controls. Demize2010 took the concept a stage further, combining Kinect and Wiimote PC implementations to allow for shooting games to operate more effectively.

Of all the Kinect hacks we've seen so far, the gameplay implementations are perhaps the weakest. Games are so intrinsically built around their control schemes that the notion of shoehorning in a completely alien system based on body-tracking or gestures just doesn't work. Only so much can be achieved by literally remapping existing controls to the interpretation of Kinect motion data, and the lag issues are painfully apparent.

The demos also serve to illustrate a couple of other things. Firstly, games really need to be built from the ground up around the Kinect hardware's capabilities - and of course, its limitations. Secondly, the accomplishments of Xbox 360 developers in getting their games to work so well may be something that we have taken for granted (though perhaps sometimes their code offers too much of a helping hand).

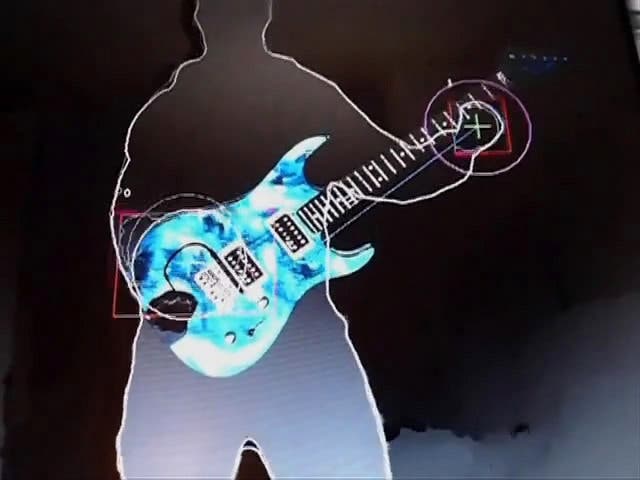

However, where the homebrew community has scored some massive wins has been in experimentation with virtual musical instruments.

The Kinect-powered PC representation of the Tom Hanks/Big piano rightly gained massive plaudits for its sheer ingenuity and crowd-pleasing effect, but another more low-key demo shows us how a homebrew concept could actually end up in a forthcoming game. This Air Guitar demo is seriously impressive stuff.

Music and dance titles have an inherent advantage over other styles of gameplay. Since the motions you're being asked to replicate can be anticipated, lag can be completely factored out (as it is in Dance Central, for example), so developers have all the time in the world to process your movements accurately. The only question marks really apply to Kinect's sensitivity and accuracy in measuring finger locations and the orientation of the player's hands, but as the Eurogamer news team discovered, Microsoft is looking to increase Kinect accuracy through refining USB throughput. Currently, Kinect titles only use a 320x240 depth map, whereas the hardware itself is capable of four times as much resolution.

Away from gameplay implementations, the concept work surrounding the release of the Kinect drivers has thrown up some great practical, if rather niche, uses for the tech. As we discussed in our initial blog on the open source drivers, the camera has a great deal of value to amateur robotics enthusiasts.

Attaching a camera to a robot can you give a remote "robot's eye view" but there is very little real world data that can be derived from a basic image like this. Sony's old AIBO robotic dogs incorporated additional sensors built into the snout to stop the mutt from colliding with things and indeed falling down stairs. Adding a depth camera like Kinect allows robots to detect obstacles at range and avoid them much more naturally. The robots can also lock onto humans and accept gestures as commands, or even use skeletal data to mimic body movement.

Over and above practical applications like this, there has also been a great deal of homebrew work on replicating something approaching the fabled Minority Report interface, or at least in manipulating objects literally by hand. We've seen demos that can track individual fingers, generating a multi-touch style effect that eclipses anything we've seen in terms of precision from Xbox 360 Kinect titles. So is there anything that Microsoft can actually learn from this area of the homebrew scene?