God of War AA coming to PC/360?

Can new MLAA match Sony's quality?

Custom anti-aliasing techniques are becoming increasingly popular among leading games developers. Last week LucasArts talked to Digital Foundry about the new AA techniques used in the forthcoming Star Wars: The Force Unleashed II. But the yardstick remains the quality level set by Sony's morphological anti-aliasing (MLAA) tech as seen in the phenomenal God of War III. Last week, AMD has released its own GPU-based MLAA implementation for PC and other developers are working on GPU solutions for Xbox 360. MLAA is going cross-platform.

Available now for the new Radeon HD 68x0 series of graphics cards (though available to 58x0 owners too via an unofficial hack), AMD's tech has received plenty of plaudits from the PC tech blogs, garnering particular praise for its excellent edge-smoothing capabilities along with the relatively unnoticeable impact on frame-rates. In good conditions MLAA can match the quality of 8x multi-sampling anti-aliasing (MSAA) but requires far less in the way of system resources. On the PS3, Sony's MLAA is parallelised over five SPUs and takes around 4ms of processing time - freeing up precious RSX resources.

AMD's solution has much in common with Sony's, but is fundamentally different in many ways. The fact that the God of War III MLAA operates on SPU has some very specific advantages - the Cell's satellite processors are far more flexible in terms of how they can be programmed, leading some to believe that GPU implementations will struggle to match the quality level.

More obvious to the end user, AMD's approach is a post-process filter that works through the entire completed frame, including the HUD and any on-screen text. This results in exactly the same kind of artifacting on text as seen with The Saboteur on PS3. As the MLAA algorithm works on the whole screen, it simply doesn't know the difference between a genuine edge and text, resulting in a noticeable impact to quality, along with occasional dot-crawl on HUD elements.

Artifacts can be minimised by running at higher resolutions, and the cards that the MLAA mode runs on should be able to cope with most - if not all - games at 1080p and higher anyway. However, the effects can never be eliminated with the current implementation. Sony's MLAA tech, in contrast, works on the frame before the HUD and text are added, producing a noticeably cleaner result.

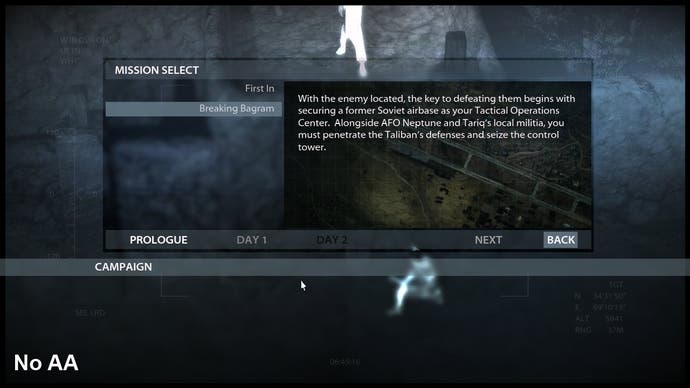

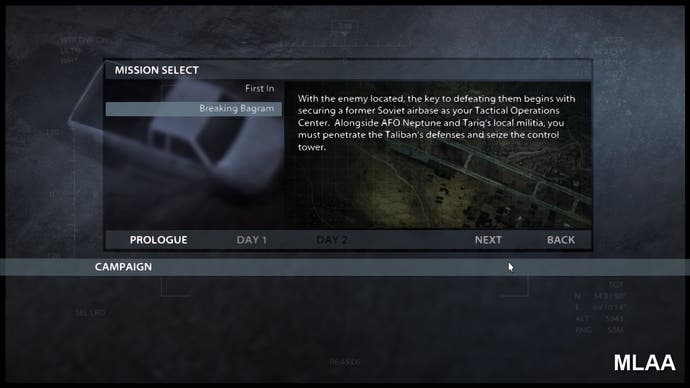

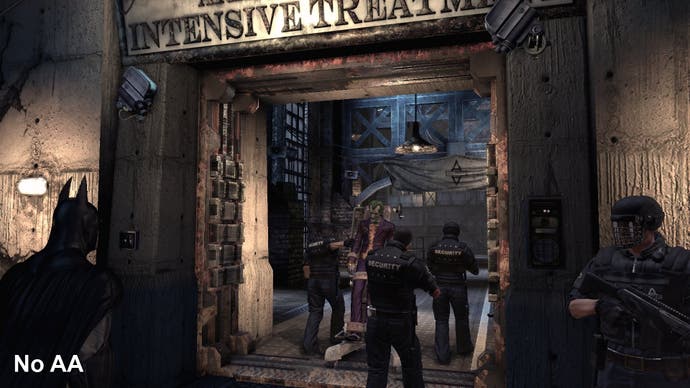

So just how good is the image quality from AMD's version? Images like this don't exactly inspire confidence, but screenshots dotted around the internet show plenty of promise. Here are a couple of comparison shots. Apparently, AMD's MLAA can't be picked up by the usual range of PC grabbing tools, but it's no problem for us since we take our data losslessly direct from the DVI port of the GPU via our TrueHD capture card. What your monitor displays, our capture tech records.

Based on the quality of the shots, MLAA in this guise comes across phenomenally well, but having witnessed it in motion, there's a definite sense that the implementation is closer to the original Intel proof of concept as opposed to Sony's refined version. We know a lot about this, having worked with a compiled version of the sample code and processed a number of different games with it.

At the time we were hugely impressed with the quality of the still shots, the quality of the games in motion was far less impressive, and DICE's rendering architect, Johan Andersson, shared his thoughts on the drawbacks of MLAA.

"On still pictures it looks amazing but on moving pictures it is more difficult as it is still just a post-process. So you get things like pixel popping when an anti-aliased line moves one pixel to the side instead of smoothly moving on a sub-pixel basis," he said.

"Another artifact, which was one of the most annoying is that aliasing on small-scale objects like alpha-tested fences can't (of course) be solved by this algorithm and quite often turns out to look worse as instead of getting small pixel-sized aliasing you get the same, but blurry and larger, aliasing which is often even more visible."

Andersson's comments, based on his own experiments with MLAA, appear to mirror closely the issues we see in AMD's tech. To illustrate, here's a 720p comparison of the PC versions of Danger Close's Medal of Honor (somewhat notorious for its "jaggies" and the inadequacies of its built-in AA option) and Rocksteady's Batman: Arkham Asylum.

In the Batman scene we can see both the good and bad of MLAA. Prominent foreground edges are effectively smoothed off, but far off geometry exhibits exactly the kind of pixel-popping that Andersson warned against. The blur effect is constant regardless of depth, making sub-pixel edges actually much more prominent than they are when there is no anti-aliasing in effect.

With the Medal of Honor clips, we see another perfect example of what Andersson describes - alpha-tested fences do indeed suffer because of the filtering, plus we see the effects of HUD artifacting and more pixel-popping.