The Secret Developers: what next-gen hardware balance means for gaming

Digital Foundry's new series where game-makers discuss the topics they are passionate about.

Have you ever wondered what developers really think about the latest gaming news and controversies? In this new series of Digital Foundry articles, it's game creators themselves who take centre-stage, offering a fresh, unique perspective on the issues of the day, free to write what they want about the subjects that they are passionate about, with a rock-solid assurance from us that their anonymity will be protected. In short, freshly served, informed opinion direct from the people creating the software we care about, with zero involvement from marketing or PR.

In this first piece, a seasoned multi-platform developer offers up his view on hardware balance - not just in terms of the current Xbox One/PlayStation 4 bunfight, but more importantly on how the technological make-up of both consoles will define the games we play over the next few years. If you're a game-maker that would like to contribute to the Secret Developers series, please feel free to contact us through digitalfoundry@eurogamer.net and be assured that any discussions will be dealt with in the strictest confidence.

With just weeks to go before the arrival of the PlayStation 4 and Xbox One, there seems to be a particular type of mania surrounding the technical capabilities of these two very similar machines. The raw specs reveal numbers seemingly light years apart, which clearly favour one console platform over the other, but it seems to me that at a more global level, people can't quite see the wood for the trees. Spec differences are relevant of course, but of far larger importance is the core design - the balance of the hardware - and how that defines, and limits, the "next-gen" games we will be playing over the next eight to ten years.

At this point I should probably introduce myself. I'm a games developer who has worked over the years across a variety of game genres and consoles, shipping over 35 million units in total on a range of games, including some major triple-A titles I'm sure you've played. I've worked on PlayStation 2, Xbox, PlayStation 3, Xbox 360, PC, PS Vita, Nintendo DS, iPhone, Wii U, PlayStation 4 and Xbox One. I'm currently working on a major next-gen title.

Over my time in the industry I've seen a wide variety of game engines, development approaches, console reveals and behind-the-scenes briefings from the console providers - all of which gives me a particular perspective on the current state of next-gen and how game development has adapted to suit the consoles that are delivered to us by the platform holders.

I was spurred into writing this article after reading a couple of recent quotes that caught my attention:

"For designing a good, well-balanced console you really need to be considering all the aspects of software and hardware. It's really about combining the two to achieve a good balance in terms of performance... The goal of a 'balanced' system is by definition not to be consistently bottlenecked on any one area. In general with a balanced system there should rarely be a single bottleneck over the course of any given frame." - Microsoft technical fellow Andrew Goossen

Dismissed by many as a PR explanation for technical deficiencies when compared to PlayStation 4, the reality is that balance is of crucial importance - indeed, when you are developing a game, getting to a solid frame-rate is the ultimate goal. It doesn't matter how pretty your game looks, or how many players you have on screen, if the frame-rate continually drops, it knocks the player out of the experience and back to the real world, ultimately driving them away from your game if it persists.

Maintaining this solid frame-rate drives a lot of the design and technical decisions made during the early phases of a game project. Sometimes features are cut not because they cannot be done, but because they cannot be done within the desired frame-rate.

"If the frame-rate continually drops, it knocks the player out of the experience and back to the real world, ultimately driving them away from your game if it persists."

In most games the major contributors to the frame-rate are:

- Can you simulate all of the action that's happening on the screen - physics, animation, HUD, AI, gameplay etc?

- Can you render all of the action that's happening on the screen - objects, people, environment, visual effects, post effects etc?

The first point relates to all of the things that are usually handled by the CPU and the second point relates to things that are traditionally processed by the GPU. Over the successive platform generations the underlying technology has changed, with each generation throwing up its own unique blend of issues:

- Gen1: The original PlayStation had an underpowered CPU and could draw a small number of simple shaded objects.

- Gen2: PlayStation 2 had a relatively underpowered CPU but could fill the standard-definition screen with tens of thousands of transparent triangles.

- Gen3: Xbox 360 and PlayStation 3 had the move to high definition to contend with, but while the CPUs (especially the SPUs) were fast, the GPUs were underpowered in terms of supporting HD resolutions with the kind of effects we wanted to produce.

In all of these generations it was difficult to maintain a steady frame-rate as the amount happening on-screen would cause either the CPU or GPU to be a bottleneck and the game would drop frames. The way that most developers addressed these issues was to alter the way that games appeared, or played, to compensate for the lack of power in one area or another and maintain the all-important frame-rate.

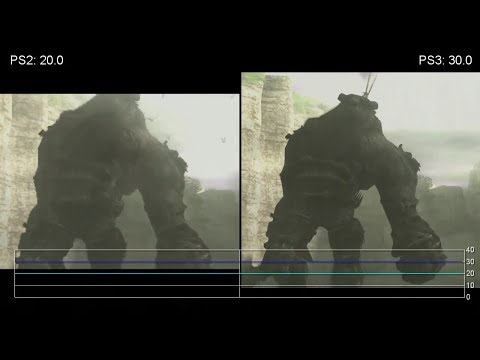

This shift started towards the end of Gen2 when developers realised that they could not simulate the world to the level of fidelity that their designers wanted, as the CPUs were not fast enough - but they could spend more time rendering it. This shift in focus can clearly be seen around 2005/2006 when games such as God of War, Fight Night Round 2 and Shadow of the Colossus arrived. These games were graphically great, but the gameplay was limited in scope and usually used tightly cropped camera positions to restrict the amount of simulation required.

Then, as we progressed into Gen3 the situation started to reverse. The move to HD took its toll on the GPU as there were now more than four times the number of pixels to render on the screen. So unless the new graphics chips were over four times faster than the previous generation, we weren't going to see any great visual improvements on the screen, other than sharper-looking objects.

"Towards the end of Gen2 when developers realised that they could not simulate the world to the level of fidelity that their designers wanted... but they could spend more time rendering it."

Again, developers started to realise this and refined the way that games were made, which influenced the overall design. They started to understand how to get the most out of the architecture of the machines and added more layers of simulation to make the games more complicated and simulation-heavy using the CPU power, but this meant that they were very limited as to what they could draw, especially at 60fps. If you wanted high visual fidelity in your game, you had to make a drastic fundamental change to the game architecture and switch to 30fps.

Dropping a game to 30fps was seen as an admission of failure by a lot of the developers and the general gaming public at the time. If your game couldn't maintain 60fps, it reflected badly on your development team, or maybe your engine technology just wasn't up to the job. Nobody outside the industry at that time really understood the significance of the change, and what it would mean for games; they could only see that it was a sign of defeat. But was it?

Switching to 30fps doesn't necessarily mean that the game becomes much more sluggish or that there is less going on. It actually means that while the game simulation might well still be running at 60fps to maintain responsiveness, the lower frame-rate allows for extra rendering time and raises the visual quality significantly. This switch frees up a lot of titles to push the visual quality and not worry about hitting the 60fps mark. Without this change we wouldn't have hit the visual bar that we have on the final batch of Gen3 games - a level of attainment that is still remarkable if you think that the GPU powering these games was released over seven years ago. Now if you tell the gaming press, or indeed hardcore gamers, that your game runs at 30fps, nobody bats an eyelid; they all understand the trade-off and what this means for a game.

Speaking of GPUs, I remember early in the console lifecycle that Microsoft made it known that the graphics technology in the Xbox 360 was "better" than PS3's and they had the specs to prove it - something that sounds very familiar in relation to recent Xbox One/PS4 discussions. This little fact was then picked up and repeated in many articles and became part of the standard console argument that occurred at the time:

- "PS3 is better than Xbox 360 because of the SPUs."

- "Xbox 360 has a better graphics chip."

- "PS3 has a better d-pad controller compared to the Xbox 360."

- "Xbox Live is better for party chat."

The problem with these facts, taken in isolation, is that they are true but they don't paint an accurate picture of what it is like developing current-gen software.

"As a developer, you cannot be driven by the most powerful console, but rather the high middle ground that allows your game to shine and perform across multiple machines."

One of the first things that you have to address when developing a game is, what is your intended target platform? If the answer to that question is "multiple", you are effectively locking yourself in to compromising certain aspects of the game to ensure that it runs well on all of them. It's no good having a game that runs well on PS3 but chugs on Xbox 360, so you have to look at the overall balance of the hardware. As a developer, you cannot be driven by the most powerful console, but rather the high middle ground that allows your game to shine and perform across multiple machines.

While one console might have a better GPU, the chances are that this performance increase will then be offset by bottlenecks in other parts of the game engine. Maybe these are related to memory transfer speeds, CPU speeds or raw connectivity bus throughputs. Ultimately it doesn't matter where the bottlenecks occur, it's just the fact that they do occur. Let's look at a quote from a studio that is well known for its highly successful cross-platform approach:

"The vast majority of our code is completely identical. Very very little bespoke code. You pick a balance point and then you tailor each one accordingly. You'll find that one upside will counter another downside..." - Alex Fry, Criterion Games

And another recent one from the statesman of video game development:

"It's almost amazing how close they are in capabilities, how common they are... And that the capabilities that they give are essentially the same." - John Carmack on the Xbox One and PS4

The key part in that statement is that they have similar capabilities. Not performance, but capabilities. I read that as Carmack acknowledging that the differences in power between the two are negligible when considering cross-platform development. Neither one of them are way ahead of the other, and that they both deliver the same type of experience to the end user with minimal compromise.

"In this console generation it appears that the CPUs haven't kept pace... which means that we might have to make compromises again in the game design to maintain frame-rate."

With the new consoles coming out in November, the balance has shifted again. It looks like we will have much better GPUs, as they have improved significantly in the last seven years, while the target HD resolution has shifted upwards from 720p and 1080p - a far smaller increase. Although these GPUs are not as fast on paper as the top PC cards, we do get some benefit from being able to talk directly to the GPUs with ultra-quick interconnects. But in this console generation it appears that the CPUs haven't kept pace. While they are faster than the previous generation, they are not an order of magnitude faster, which means that we might have to make compromises again in the game design to maintain frame-rate.

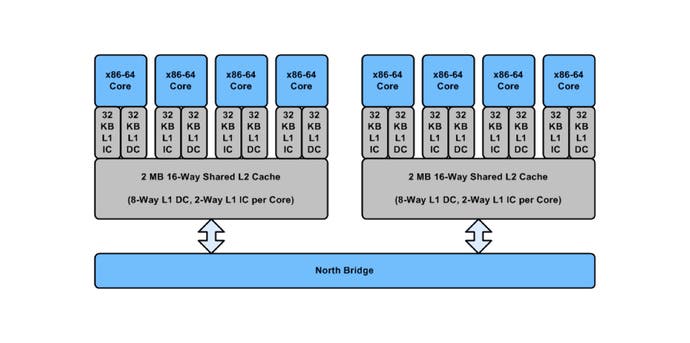

Both the consoles have Jaguar-based CPUs with some being reserved for the OS and others available for the game developers to use. These cores, on paper, are slower than previous console generations but they have some major advantages. The biggest is that they now support Out of Order Execution (OOE), which means that the compiler can reschedule work to happen while the CPU is waiting on an operation, like a fetch from memory.

Removing these "bubbles" in the CPU pipeline combined with removing some nasty previous-gen issues like load-hit stores means that the CPUs Instruction Per Cycle (IPC) count will be much higher. A higher IPC number means that the CPU is effectively doing more work for a given clock cycle, so it doesn't need to run as fast to do the same amount of work as a previous generation CPU. But let's not kid ourselves here - both of the new consoles are effectively matching low-power CPUs with desktop-class graphics cores.

So how will all of this impact the first games for the new consoles? Well, I think that the first round of games will likely be trying to be graphically impressive (it is "next-gen" after all) but in some cases, this might be at the expense of game complexity. The initial difficulty is going to be using the CPU power effectively to prevent simulation frame drops and until studios actually work out how best to use these new machines, the games won't excel. They will need to start finding that sweet spot where they have a balanced game engine that can support the required game complexity across all target consoles. This applies equally to both Xbox One and PlayStation 4, though the balance points will be different, just as they are with 360 and PS3.

"The first round of games will likely be trying to be graphically impressive (it is 'next-gen' after all) but in some cases, this might be at the expense of game complexity."

One area of growth we will probably see is in the use of GPGPU (effectively offloading CPU tasks onto the graphics core), especially in studios that haven't developed on PC before and haven't had exposure to the approach. All of the current-gen consoles have quite underpowered GPUs compared to PCs, so a lot of time and effort was spent trying to move tasks off the GPU and onto CPU (or SPUs in the case of PS3). This would then free up valuable time on the GPU to render the world. And I should point out that for all of Xbox 360's GPU advantage over PS3's RSX, at the end of the day, the balance of the hardware was still much the same at the global level. Occlusion culling, backface culling, shader patching, post-process effects - you've heard all about the process of moving graphics work from GPU to CPU on PlayStation 3, but the reality is that - yes - we did it on Xbox 360 too, despite its famously stronger graphics core.

So, where does this leave us in the short term? To summarise:

Will the overall low-level hardware speed of the console technology affect the games that are created on the consoles?

Most studios, especially third-party studios, will not be pushing the consoles that hard in their release titles. This will be due to a mix of reasons relating to time, hardware access (it typically takes over two years to make a game and we got next-gen hardware back in February) and maintaining parity between different console versions of the game.

Yes, parity matters and we do design around it. Looking back to the early days of the Xbox 360/PS3 era, one of the key advantages Microsoft had was a year's headstart, so we had more time with the development environment. Parity between SKUs increased not just because we grew familiar with the PS3's hardware, but also because we actively factored it in the design - exactly in the way Criterion's Alex Fry mentioned earlier. With next-gen consoles arriving simultaneously, that way of thinking will continue.

Will we see a lot of games using a lower framebuffer size?

Yes, we will probably see a lot of sub-1080p games (with hardware upscale) on one or both of the next-gen platforms, but this is probably because there is not enough time to learn the GPU when the development environment, and sometimes clock speeds, are changing underneath you. If a studio releases a sub-1080p game, is it because they can't make it run at 1080p? Is it because they don't possess the skills or experience in-house? Or is it a design choice to make their game run at a stable frame-rate for launch?

This choice mirrors the situation we previously had with the 60fps vs. 30fps discussion. It might not be what the company wants for the back of the box, but it is the right decision for getting the game to run at the required frame-rate. Again, it is very easy to point out this fact and extrapolate from there on the perceived 'power' of the consoles, but this doesn't take all the design decisions and the release schedule into account.

"Screen resolution is an easy change to make that has a dramatic effect on the frame-rate. 900p, for example, is only 70 per cent of the number of pixels in a 1080p screen."

Understanding why this decision was made and what impact it has on a game when it is released is not there yet in the gaming public's psyche. People are still too focused on numbers, but as more games start to arrive for the consoles and people start to experience the games, I think that opinions will change. The actual back-buffer resolution will become far less important in discussions compared to the overall gaming experience itself, and quite rightly so.

So why are studios rushing out games when they know that they could do better given more time?

When it comes to console choice, most gamers will purchase based on factors such as previous ownership, the opinions of the gaming press (to some extent), which consoles their friends buy to play multiplayer games, and in some cases, which exclusives are being released (Halo, Uncharted etc). This means that studios are under a lot of pressure to release games with new consoles, as they help drive hardware sales. Also, if a studio releases a game at launch, they are likely to sell more copies, as console purchasers require games in order to show off their shiny new consoles to their friends.

So, with limited time, limited resources and limited access to development hardware before the retail consoles arrive, studios have to make a decision. Do they want their game to look good, play well and maintain a solid frame-rate? If so, compromises have to be made and screen resolution is an easy change to make that has a dramatic effect on the frame-rate (900p, for example, is only 70 per cent of the number of pixels in a 1080p screen). This will likely be the main driving reason behind the resolution choice for the launch titles and won't be any indicator of console "power" - compare Project Gotham Racing 3's sub-native presentation with its sequel, for example.

As a developer I feel that I am overly critical of other people's games and this has tainted the appeal of playing new games. Instead of enjoying them as the developers hoped, I am too busy mentally pointing out issues with rendering, or physics simulation, or objects clipping through the environment. It's hard to ignore some of these things, as it is, after all, what I am trained to spot and eliminate before a game ships. But I hope with the first round of games that I will be able to see past these minor things and enjoy the games as they were intended.

However, I doubt that I will be playing a next-gen game and saying to myself, "Hmm, you can see the difference that the front-side bus speed makes on this game," or, "If only they had slightly faster memory speeds then this would have been a great game." Let's just wait for the consoles to be released, enjoy the first wave of next-gen games and see where it leads us. You might be surprised where we end up.