Noddy's Guide To Graphics Card Jargon

Graphics card jargon explained

The last couple of weeks has seen a frenzy of graphics card announcements and previews, with 3dfx, ATI and NVIDIA all claiming to have produced the best thing since sliced bread. To allay your confusion, EuroGamer has cut through the jargon and hype to help you pick out the contenders from the also-rans.

To start off we will be explaining some of the most common terms that you will come across when comparing the new generation of graphics cards, and then next week we will be bringing you all the latest information on the cards themselves.

So, without any further ado...

Full-Scene Anti-Aliasing

"Full-Scene Anti-Aliasing" (or FSAA for short) is a term that first came into common use towards the end of last year, when 3dfx were hyping their next graphics chip, now known as the "VSA-100".

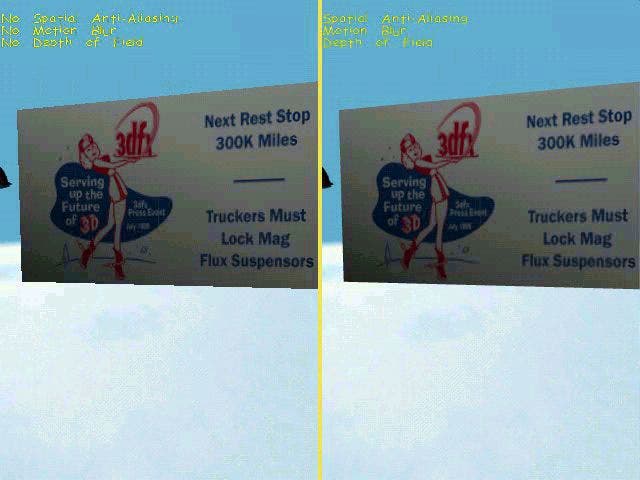

So what's all the fuss about? Well, if you take a look at the illustration above, you should be able to see that there appear to be steps (known as "jaggies") at the edges of the sign on the left. What FSAA does is to remove or reduce the appearance of these jagged edges, as can be seen on the sign on the right.

Jaggies are particularly a problem at low resolutions, but even at higher resolutions there are visible jagged edges, as well as other aliasing problems. For example, if you look at a thin object, such as a lamp post, from a long way off in a typical 3D game, bits of it may appear to pop in and out of view as you move from side to side.

Obviously lamp posts don't behave like this in the real world, so what's going wrong? Well, the easiest way to look at it is with fonts. So here we have a pair of zeros, no doubt a familiar sight to all our German readers.

But in this case the one on the left is normal, while the one on the right is anti-aliased. As you can see, each pixel on the normal zero is either black or white (on or off), whereas anti-aliasing introduces shades of grey where only part of a pixel is covered.

Your high-octane 3D games are effectively doing the same thing, but with texture mapped polygons instead of digits. A polygon either covers a pixel or it doesn't - if only a little bit of the pixel is filled by the polygon, then it is ignored completely when that pixel is displayed. So if you have a slightly slanting line that is almost horizontal, you will see steps along each edge where the next row of pixels up your screen is being covered. And if you have a very thin object in the distance, parts of it may not show up at all.

Since 3dfx announced that their new cards would support FSAA, both ATI and NVIDIA have followed suit. The big question is, are all the various ways of implementing FSAA equal, or are some more equal than others? 3dfx uses "spatial" FSAA, whereas ATI and NVIDIA both use "super-sampling" FSAA.

3dfx's approach uses their "T-buffer" to render either two or four slightly different versions of a scene at once, and then average the results. The "super-sampling" FSAA used by ATI and NVIDIA renders the scene at a higher resolution and then samples it down. The bottom line is that 3dfx's system is more sophisticated and generally looks marginally better, although both will give you a very noticeable improvement in visual quality.

Check this comparison shot, taken in Quake 3 Arena using an NVIDIA GeForce running at 640x480. There are very obvious jaggies all over the place in the shot on the left, but on the right with FSAA enabled the jaggies have almost all disappeared. Sweet.

Both approaches will chew up a lot of your graphics card's precious "fill rate" though. Games like first person shooters tend to be limited by a card's fill rate, especially at higher resolutions, and so using FSAA with them could cause your all-important frame rate to drop markedly. On the bright side, in most first person shooters you aren't going to be standing around long enough to notice the jagged edges anyway.

Where FSAA should really shine is in 3D adventure games, RPGs, flight sims and driving games. These tend to put less stress on the graphics card, so you have enough fill rate to spare on FSAA, and the jagged edges and poly popping it is intended to cure tend to be more visible in these types of game anyway.

Texture Compression

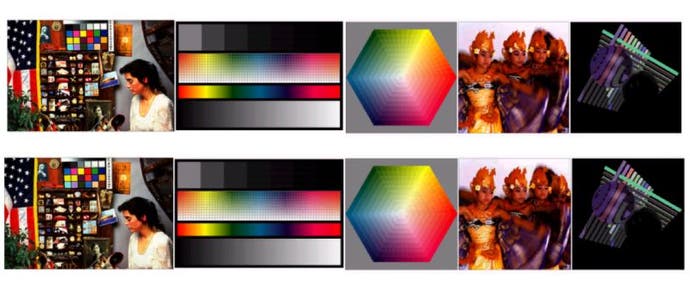

Texture compression is a method of reducing the file size of textures without noticeably reducing their quality. This has a number of advantages...

Most obviously, compressed textures take up less space on your hard drive, as well as on the CD-Rom you installed the game from. This lets designers pack more high resolution textures into a game without needing to put them on a second CD.

They also take up less space in memory, meaning you can cram more textures on to your graphics card's limited supply of RAM. When your graphics card runs out of memory to store textures, it has to load them up from your much slower system memory or, even worse, your hard drive. And it has to discard some of the textures it already has in memory to make room for the new ones. The result is "texture thrashing", which can cause jerkiness, and reduce your frame rate.

Of course, because your textures are now taking up less memory space, that also means that when your card does need to load them up through your AGP slot, it can do so more quickly. The end result is that developers can use more detailed and more numerous textures in their games without causing a big performance hit.

The standard form of texture compression was originally developed by S3, and is called "S3TC" (short for "S3 Texture Compression", surprisingly enough). It has also been built into Microsoft's DirectX code as "DXTC", and support for this is now widespread. Both Quake III Arena and Unreal Tournament use S3TC in one of its forms, and most new graphics cards fully support it as well.

Meanwhile, 3dfx have developed their own form of texture compression called "FXT1", which is being used by their new range of graphics cards. It claims to offer better quality compression than S3TC, although the difference is debatable at best. The one real advantage it does have though is that 3dfx have released the code as "open source", allowing other manufacturers and developers to use it free of charge. This means that FXT1 is supported on Linux and the Macintosh, whereas DXTC is only available in Windows.

Memory

Memory is an important (if rather boring) part of your graphics card. Both how much memory your card has and how fast it is can be vital to getting the most out of games.

As we have just explained, if your graphics card runs out of memory during a game it will be forced to load data from your hard drive or system memory, which will cause slowdowns. So obviously the amount of memory you have on the card is important. Today all cards have at least 16Mb of RAM, and most have 32Mb or more.

Getting a 64Mb graphics card is generally a waste of money for now though, because at the moment few games actually need more than 32Mb, especially with the introduction of texture compression.

The exception is 3dfx's new range of graphics cards. Their Voodoo 5 cards have two or even four processors on them, and each of those chips needs its own supply of texture memory. If you buy a 32Mb Voodoo 5 5000, each of the two chips on it effectively only has access to about 20Mb of the memory.

The type of memory is also important, and at the moment most graphics cards use either SDRAM or DDR memory. The difference is that SDRAM transfers data only once per clock cycle, whereas DDR (short for "Double Data Rate") memory can transfer twice per cycle, effectively doubling the amount of "memory bandwidth" available to your graphics card. In other words, it can move data around twice as fast.

When you see the memory clock rate of a graphics card listed, if it uses DDR it will often show the effective clock rate rather than the true one. In other words, the memory may only be running at 150MHz internally, but because it can transfer data twice as fast as SDRAM it is listed as being 300MHz memory.

But why is the type and speed of memory used so important? Well, as the speed of cards increases, we are getting to the point where the frame rate in your games is often limited by how fast the card can move data around in its memory rather than how fast it can process that data once it gets to the right place. The GeForce was a good example of this - the original SDRAM version was a little disappointing, and only when versions using DDR memory were released did we see the true performance of the card unleashed.

Transform & Lighting

One of the biggest buzzwords in the graphics industry at the moment is "T&L", short for "Transform And Lighting".

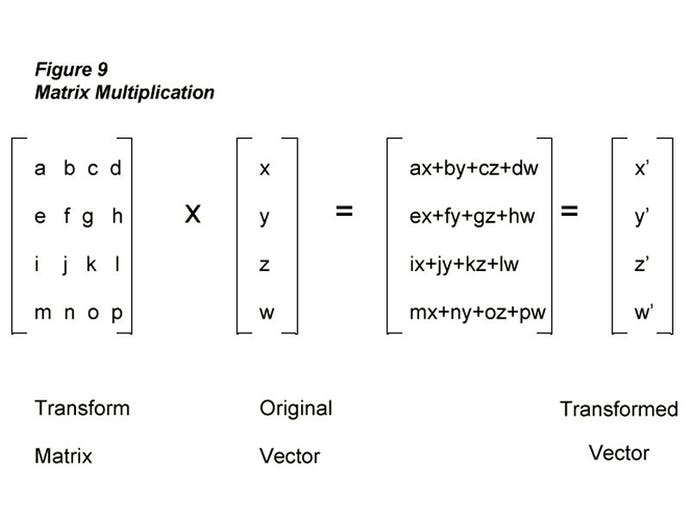

The "transform" part carries out mathematical operations on a set of data, in this case taking the co-ordinates which tell you where the triangles that make up a 3D scene are, and working out where on your screen they should be drawn based on their positions within the game world.

The "lighting" part (rather obviously) is where real time light effects are calculated. As these calculations must be carried out many times for every frame rendered, the faster you can process them the faster your game can run.

Hardware acceleration for T&L means that your graphics card does the hard work instead of your CPU, leaving your computer with more processor time to spend on other tasks, such as AI and physics. And as the graphics card is specifically designed with this task in mind, it can do it faster than current CPUs, allowing for more detailed scenes and faster frame rates, particularly on slower computers.

Some games already support hardware T&L acceleration, such as id Software's Quake 3 Arena, and T&L is definitely the big feature of the future. A whole host of top games are already queueing up to support it, including Black & White, Evolva, Halo, Giants, and Tribes 2.

Vertex Skinning

Games like Half-Life use "skeletal animation", which means that your model is animated by moving a skeleton beneath the surface. The way the "bones" move then controls how the model (effectively the "skin" of the character) behaves, and this in a nutshell is what vertex skinning does.

The movements of the skin are controlled by giving each point ("vertex") on the model's surface a series of weightings, telling it which bones should effect its movement and how. Once you have done this you then only need to move the bones and the computer will move the surface for you.

Storing the animations this way uses less hard drive space and memory than the old method, which relied on moving the surface itself and storing the positions of every vertex for every frame of animation. For example, by adding skeletal animation to the Quake 3 Arena engine, Ritual managed to reduce the amount of memory consumed by the lead character in their new game FAKK2 from 32Mb to just 2Mb!

Vertex skinning requires a lot of processor power to do properly though. As with T&L, doing the necessary calculations on a dedicated piece of graphics hardware instead of on your CPU can allow you to do the same calculations faster, or to do more complex calculations, giving you more life-like characters.

How accurately the card can do vertex skinning is measured by the number of matrices that it can calculate for each vertex .. in other words, how many bones can effect the movement of each point on the skin. If you want more bones to effect a given vertex than your hardware can support, you have to do all the calculations on your CPU instead, so obviously the more your card supports the better!

Keyframe Interpolation

One thing that skeletal animation isn't particularly good at is facial expressions, and that's where Keyframe Interpolation comes in.

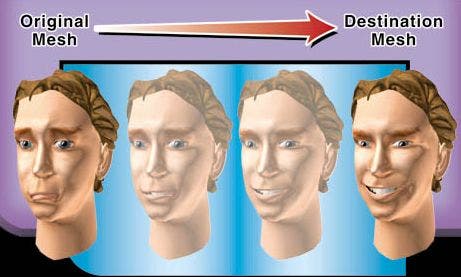

The idea is that, instead of storing all the dozens of frames that make up an animation, you just store a few key frames and then your graphics card fills in all the intermediate stages for you. An example can be seen in the picture above, where the first and last stages are pre-defined, and the two in the middle have been filled in by the computer.

In theory this should allow for smoother, more life-like facial animations, as developers can put in a range of different facial expressions and then let the graphics card do the animations for morphing between them. So far only ATI's newly announced Radeon card is supporting this feature though, so it's uncertain how many developers will actually use it at first.

Bump Mapping

Bump mapping is simply a way of making surfaces look bumpy without actually changing the shape of them or using more polygons to make up your scene.

There are three main ways of bump-mapping - emboss, dot product 3, and environment mapped bump mapping (EMBM). The details of how they all work isn't tremendously important, what matters is that they all make surfaces look more realistic, but that embossing is the least realistic of the three.

Most of the latest generation of graphics cards will support one or more of these methods. The most popular so far is EMBM, which was championed by Matrox with their G400 graphics cards. Games such as Slave Zero, Expendable and Battlezone II already support EMBM, and future titles such as Black & White, Dark Reign II and Grand Prix 3 will do. More games are likely to follow suit as the level of hardware support for the feature improves.

Fill Rate

Graphics card companies quote lots of big numbers whenever they are hyping a new product, and often they have little or no relation to reality. The most important of these numbers though is the fill rate of the card, which is measured in "pixels per second" or "texels per second".

The Pixel/sec rate is simply how fast the card can process pixels ready to slap them up on your monitor, and is equal to the clock rate of your graphics card multiplied by the number of rendering pipelines it has. In other words, how fast the card is carrying out operations multiplied by how many it can do at once. For example, the GeForce 2 has four rendering pipelines and runs at 200MHz, so the fill rate is 800MegaPixels/sec.

The Texel/sec rate is measuring the rate at which the card can prepare textured pixels for your screen. This is simply the Pixel/sec fill rate multiplied by the number of texture units per rendering pipeline. As the GeForce 2 has two of them, this gives it an impressive fill rate of 1600MegaTexels/sec, or 1.6GigaTexels/sec.

Generally the higher your fill rate, the faster your games should run on the card, and the higher resolutions it will be able to handle. This isn't always true though, as other factors such as driver quality, advanced features, and memory bandwidth can effect real world performance.

3dfx, ATI and NVIDIA are all claiming they have the fastest card in the world at the moment, but just looking at the numbers won't necessarily give you the whole picture... So come back next week, when we will be taking a look at their latest offerings, and seeing which is likely to give you the most bang for your buck (or euro).

John "Gestalt" Bye

-