GeForce 3

Review - the long-awaited GeForce 3 is almost upon us, and Mugwum has taken a look at what will be one of the first cards to hit store shelves

New horizons

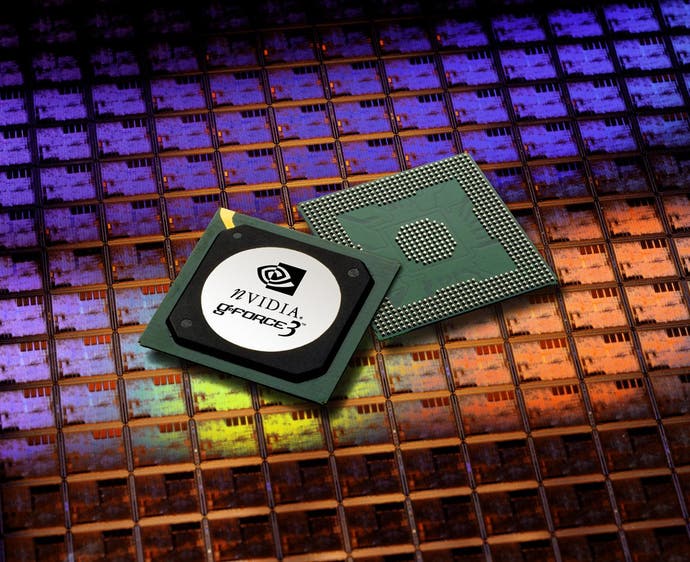

Coming up with ways to reinvent the graphics card market is something NVIDIA pride themselves on. Ever since the TNT2 really started giving 3Dfx (big 'D') a run for its money many moons ago, they've been on the up with every new release. The GeForce 256, the GeForce 2 GTS - even the humble GeForce 2 MX broke down barriers in the budget graphics card market. Now they are poised to do the same thing again, thanks to the many charms of the new GeForce 3, the first graphics card to boast a programmable processing unit, improved anti-aliasing support and a million and one other buzzword features. This is arguably the most complicated product ever reviewed on these pages. For starters, the GeForce 3 is made up of 57 million transistors, manufactured on a new 0.15-micron process that helps to lower power consumption and enable higher clock rates. It shares a few common characteristics with the GeForce 2 - most notably its four pixel pipelines that can apply two simultaneous textures per pixel. The actual clock speed of the chip varies depending on the board reseller, but our ELSA Gladiac 920 uses a 200MHz clock speed with 480MHz DDR memory, very similar to the GeForce 2 Ultra. That, however, is where the trickle of similarities runs dry. In this review we will obviously be demonstrating the abilities of the GeForce 3 as present on our test card, but thanks to a lot of technical NVIDIA documentation and the patience of its press spokespeople, we're able to bring you a fairly decent explanation of why the GeForce 3 is so new and improved, as well as what it can do with current games and applications.

Vertex Shader

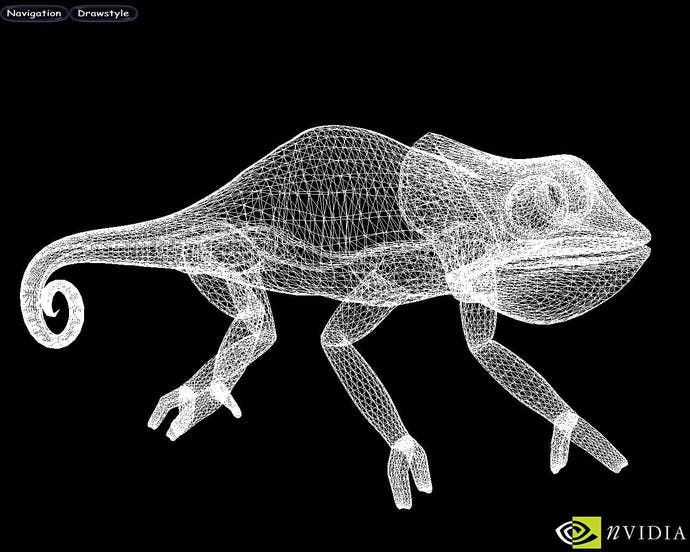

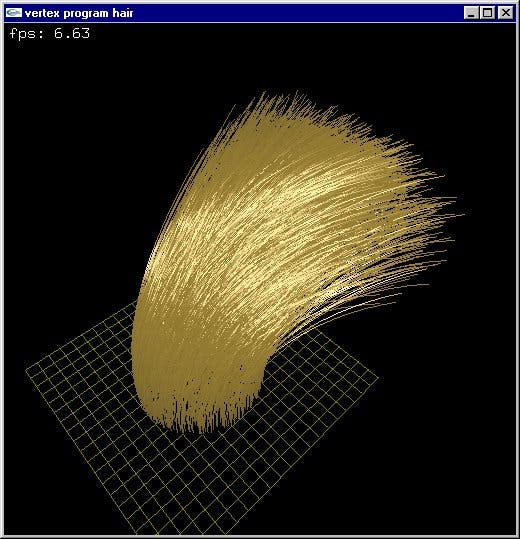

The first big difference of note is the so-called Vertex Shader, which you will recall from our preview makes up one half of the named features in NVIDIA's "nfiniteFX Engine". The Vertex Shader is the real workhorse of the GeForce 3, executing within the GPU special programs for each vertex of an object or a complete frame. If you're wondering what a vertex actually is, think of the fairly logical way 3D scenes appear in, say, Quake III Arena. Everything is made up of tiny triangles, and vertices are the corners of those triangles. Each vertex carries data about its position within the scene, colour, texture coordinates and various other pieces of information. All of this is pumped into and out of the GeForce 3's GPU, where the Vertex Shader does its work. Unlike previous GPUs, which weren't capable of meddling with vertex information before the 3D-pipeline applies Transform & Lighting calculations, the GeForce 3 actually allows programmers to alter the information contained within the vertices. Although a fairly simple step, it is one that opens countless doors to new technological breakthroughs. The Vertex Shader allows developers to take advantage of all sorts of visual effects that will usher in the "photo-realistic" gaming age. Take for example layered fog. Because fog data is stored in vertices, like so much of the data that has previously forced developers down narrow visual avenues, it can be manipulated to determine the intensity of the fog in various places. Now think about what else can be fiddled using the Vertex Shader. How about per pixel bump mapping, reflection and refraction to name but a few? And the effects that can be produced by the Shader are, as Steve Jobs put it at MacWorld, amazing. Motion blur and more human-like movement are barely the start of it. Technically speaking, only 128 instructions can be actioned per program within the vertex shader (hardly "nfinite"), but the changes are certainly enough to revolutionize the way developers deal with this sort of information. This is one reason why a lot of people are very excited about the Xbox, which will have an updated GeForce 3 GPU built into it from the get go.

Pixel Shader

The Pixel Shader is the other main component in the nfiniteFX Engine. Just like its partner in crime, the Vertex Shader, the Pixel Shader is capable of taking data and applying custom programs to create new and exciting effects. Interestingly, the Vertex Shader is getting a lot more press from NVIDIA, but as anybody who has witnessed the Pixel Shading routines in 3D Mark 2001 will testify, this stuff is dynamite as well. The Pixel Shader kicks in after the Vertex Shader has done its work with transformed and lit vertices, taking the pixels provided by the Triangle Setup and Rasterization unit directly before it in the 3D pipeline, and performing its magic upon them. Put simply, pixel rendering is achieved by combining the colour and lighting information with texture data to pick the correct colour for each pixel. This is how things have been done since the year dot, but unlike its predecessors the GeForce 3 with its Pixel Shader can take things one step further, running custom texture address operations on up to four textures at a time, then eight custom texture blending operations, combining the colour information of the pixel with data from up to four different textures. Following this, a further combiner adds specular lighting and fog effects, before the pixel is alpha-blended, defining its opacity. The GeForce 3 also performs lookup operations on the z-value of where the pixel is meant to appear to determine whether or not it needs to be drawn or not. This is a crude method of culling unnecessary pixels, similar in certain ways to the z-buffer manipulation techniques used by ATI in its Radeon line of graphics cards.

Passed out?

Fillrate is a topic that many people have brought up in days gone by with regard to the GeForce 3, because in actual fact its raw fillrate numbers are nigh-on identical to those of the GeForce 2 GTS. The card can deal with two texels per clock cycle, so obviously when more than two are used it requires extra clock cycles. With a clock frequency of 200MHz multiplied by four pixel shaders, you have a fill rate of 800MPixel/s for two texture units, or 400MPixel/s if three or four are used. The texel fillrate is 1600MTexel/s in the case of two, and 800MTexel/s for one texture per pixel, and 1200MTexel/s for three. These obvious similarities with the GeForce 2's NVIDIA Shading Rasterizer were cause for some concern. As if to demonstrate how disingenuous this sort of shock data can be though, NVIDIA's Pixel Shader can actually allow up to four textures per pass, despite its two texels per clock cycle limit. So whereas the GeForce 2 requires multiple passes for three or four textures per pixel, and each pass is done in one clock cycle, the GeForce 3's Pixel Shader may require another clock cycle to allow for three or four textures, but it still only needs one pass. A techy distinction, and one that's difficult to spot if you only count clock cycles or check theoretical fill rates. The good news is that the GeForce 3 technique saves on memory bandwidth, something that is very important these days. The Pixel Shader can also be programmed, although unlike the Vertex Shader the Pixel Shader can only apply 12 instructions, four of them texture address operations and the other eight blending operations. These can be helped along by the Vertex Shader, which of course deals with data prior to the Pixel Shader. Like the Vertex Shader, the Pixel Shader helps to open doors for programmers. The question of when we'll see anything pass through them is a bit of a moot point, but our guess would be Christmas. Expect to hear a lot more from the likes of John Carmack about the GeForce 3's feature set and the relevance of it in upcoming 3D games.

What use is it now?

This really is the question that deserves answering. When the GeForce 256 first came along, T&L was the future, and everything would use it in a year's time. Obviously this isn't quite how things turned out; in fact a lot of new games still don't use T&L, although support for it is becoming increasingly common. So if you've got a bit of cash to spare and badly need to get rid of that shocking TNT2 Ultra or Voodoo 3 3500, just what does the GeForce 3 offer you now that you wouldn't be better off waiting to buy in a year's time while using a Kyro II or GeForce 2 Ultra in the interim? The most obvious point is its performance in current 3D games and how it shapes up against other cards. Obviously, if performance is out there and above what we currently have, it'll certainly be worth considering as a lasting performance leader. Cue obligatory Quake III benchmarks? Sure, but we'll digress a bit as well. As a footnote, throughout our benchmarking we are using drivers that NVIDIA have confirmed are release-quality. We were also given permission to flash our card's BIOS to the latest up to date version. Although your mileage may vary, our results are damn near what you will end up with if you purchase a GeForce 3.

By the by, we're not using the classic demo001, we're using a more hardcore Quake III Arena 1.27g optimised demo, which pushes video cards further. The GeForce 3 is a couple of frames behind, but this is obviously unnoticeable in general play. Ironically though, we reckon the programmable GPU is actually accountable for the loss in framerate, since Quake III does pretty well with the "classic" GeForce cards' hardwired T&L unit. The Radeon, for all its splendour, can't really keep up. Lets bump up the resolution.

The improvement on the part of the GeForce 3 over its closest rival the GeForce 2 Ultra, is largely down to the efficiency with which is handles its memory bandwidth. Both cards have pretty much the same amount of bandwidth to play with, but the GeForce 3 has a very refined, very well thought-out way of dealing with it.

This is the most impressive result I have ever seen in this benchmark. This isn't even indicative of general Quake III Arena gameplay, but it is a very difficult benchmark to excel in. The GeForce 3 stomps all over its predecessors, and the Radeon might as well not feature at all.

Fillrate testing

Quake III may be an excellent OpenGL benchmark, but Serious Sam is an equally good fillrate benchmark too. All right, so in theory we already know that the GeForce 3's fillrate should not exceed that of the GeForce 2 Ultra by more than a handful of MPixel/s, but as these benchmarks demonstrate, and as I've enthused above, the GeForce 3 simply handles its memory bandwidth better than anything else on the market.

The difference between last year's technology, the GeForce 2 GTS and the GeForce 3 is almost a 100% improvement. The Ultra is also stamped firmly out by the GeForce 3's impressive ability to marshal its resources. The Radeon also puts up a strong performance, arguably because of its ability to cull unnecessary overdraw. The Kyro II should also be a strong performer in this category, although at the time of writing we didn't have one available to test.

Over a 100% improvement on the GeForce 2 GTS. Changing from single to multitexture fillrate doesn't affect performance much on the GeForce line, and again it's the efficiency of the GeForce 3 that helps it win through.

Anti-aliasing

Now, the other thing the GeForce 3 does pretty well from the get go is anti-aliasing. In previous graphics generations, the technology to use Full-Scene Anti-Aliasing has been hidden away in menus, despite its prevalence in advertising. The main reason for this is the performance hit involved. Moving from no FSAA even to 2xFSAA is an incredibly bold step, and 4xFSAA, for all its beauty, is simply too much for some cards, whatever your CPU and the state of your system. With the GeForce 3 NVIDIA aims to put pay to that, as ATI ever so nearly managed to do with the Radeon. Anti-aliasing has been an enormous topic in the 3D gaming world since 3dfx (at that point, little 'd') started to introduce it with its ill-fated Voodoo 4/5 line. Typical aliasing effects have been given the crude nickname "jaggies" - the sharp, unpleasant edges found on every surface in most 3D games. Jaggies show up when two triangles intersect without the same surface angle, and spoil the image. With bilinear and trilinear filtering, you can at least minimise this effect, but applying a filter to an entire frame is a bit wasteful when only certain areas are affected. Although the GeForce 3 does filter the entire frame for quite a performance hit, its already impressive performance more than makes up for it, and anti-aliasing is finally something of a reality. The most common way of dealing with anti-aliasing to ensure sub-pixel level accuracy (the most difficult part of the illusion) is super-sampling. The idea is simple, effective, but also very performance degrading. How it works, is by rendering each frame at a certain number of times its actual resolution. So at 2xFSAA it's rendering each frame twice as large, and 4xFSAA four times as large. As a result, each pixel is initially rendered as two or four, each half or a quarter of the size of the final on-screen pixel. The filtering of those pixels generates an anti-alised look. Imagine the performance hit of rendering double the number of on-screen pixels to achieve this though, let alone four! The alternative to super-sampling is multi-sampling, the technique used by GeForce 3. Multi-sampling works by rendering multiple samples of a frame, combining them at the sub-pixel level and then filtering them for an anti-aliased look. It sounds complex, but all you really need to know is that the effect is more or less the same as super-sampling, and because the card doesn't waste its time creating detail for higher resolutions, the performance hit isn't so great. Multi-sampling encompasses the general FSAA modes found on the GeForce 3. However, also onboard is the HRAA, or High-Resolution Anti-Aliasing engine. The GeForce 3 actually has an entire technology unit for dealing with AA now, which creates samples of a frame, stores them in a certain area of frame buffer and filters the samples before putting them in the back buffer. This makes the anti-aliased frame fully software accessible. Phew. Quincunx, although it sounds somewhat lewd, is actually something you'll want to give serious thought to as well. It's a super-sampling trick which generates the final anti-aliased pixel by filtering five pixels for only two samples. This one had me stumped to start with, but the logic is unsurprisingly sharp. Bottom line is, it comes close to the quality of 4xFSAA but requires the generation of only two samples. The 3D scene is rendered normally, but the Pixel Shader stores each pixel twice, which saves on rendering power but uses twice the memory bandwidth. Anyway, the last pixel of the frame is rendered and the HRAA-engine of the GeForce 3 pokes one sample buffer half a pixel in each direction, which has the effect of surrounding the first sample by four pixels of the second sample, diagonally a minute distance away. The HRAA-engine filters over those five pixels to create the anti-aliased one. The result looks almost as perfect as 4xFSAA, but doesn't cost as much in terms of performance. Genius? Pretty much!

Anti-aliasing benchmarks

So you've read the theory, how about the practice? Although it's difficult to benchmark appearance, I'd say the effect from testing is exactly as NVIDIA claim it to be. In the meantime, here are some benchmarks taken with Serious Sam to observe real world Full-Scene Anti-Aliasing performance on the GeForce 3. Enjoy, I need some more coffee.

Because of the differences between the performance of Quincunx AA on a GeForce 3 and 2xFSAA on a GeForce 2 Ultra, we thought we would first demonstrate 4xFSAA in a lowish resolution. The results here are more than likely down to the programmable GPU again. Things get better as usual if we up the resolution.

You'll notice firstly that there's no Radeon result here. Things were literally unplayable so I saw no point in continuing the test. The GeForce 2 GTS takes a beating, and the GeForce 2 Ultra demonstrates that you can overclock the GeForce 2 core all you want, but unless you improve your AA and memory bandwidth management, you'll never keep up with something as powerful as the GeForce 3. Quincunx benchmarks were more interesting. Of course, no other card supports Quincunx, so benchmarking against itself in 4xFSAA seemed like a good idea. Here are the results:

A clear demonstration that despite the barely perceptible difference in visual quality, Quincunx packs quite a punch. 60 frames per second is a perfectly reasonable framerate, and 1024x768 is a perfectly reasonable resolution. And remember, this is a very tough benchmark. In general gameplay, expect even higher scores.

Lightspeed Memory Architecture

Before we come to any conclusions, let's first consider perhaps the biggest and most important feature of the GeForce 3, it's "Lightspeed Memory Architecture". This little buzzword feature helps the GeForce 3 overcome its memory bandwidth bottlenecks and achieve amazing fill and frame rates. Hold on to your hats, it's another difficult and technically minded solution to a common problem. I don't envy the job of NVIDIA's PR machine in marketing this stuff to the mainstream, I really don't. The ins and outs, located in the deepest, darkest technical document I could locate, go something like this... Memory bandwidth is often quoted on the packaging of graphics cards, and those values are always the very peak bandwidth possible. The same is true of, to quote a recent example, DDR system memory. In reality, although PC2100 is capable of shovelling data at 2.1Gb/s, it does nothing of the sort in current applications. For 3D games, and 3D rendering in general, it seems that memory latency is of more importance that peak bandwidth. The reason for this is that under a lot of applications, pixel rendering reads from memory areas that are quite a distance apart, and all sorts of memory paging has to take place to get the two to meet, which wastes valuable bandwidth. As geometric detail in 3D scenes is obviously on the increase, more triangles per scene means smaller ones, and the smaller the triangle, the less efficiently the memory controller handles it. So, peak bandwidth is largely irrelevant if only a fraction of it is being used properly. To combat this, NVIDIA dreamt up the "crossbar memory controller", which consists of four single 64-bit wide memory sub-controllers. All of these can interact with one another to minimise the above issues and make memory access as efficient as possible. As demonstrated in our fillrate benchmarks, the level of efficiency in the GeForce 3's memory controller makes the GeForce 2 Ultra's theoretical fillrate unimportant. The GeForce 3 can still surpass it under many circumstances. The crossbar memory controller makes up the first part of the GeForce 3's Lightspeed Memory Architecture. The second part helps to reduce z-buffer reads. Now, obviously this is useful because the high impact of z-buffer reads and the waste of bandwidth on hidden pixels is daft. As mentioned previously, in the Pixel Shader part of the GeForce 3's 3D pipeline, there is a degree of hidden pixel removal, which helps to reduce overdraw, but it goes further than that. For starters, the z-buffer is the most commonly read frame buffer of all, so reducing access to it is more important than anything. Firstly they use lossless compression to reduce access by up to 75% depending on how much data is changing hands, and by manipulating the z-buffer as ATI did with the Radeon's Hyper-Z technology, NVIDIA can reduce memory bandwidth consumption again, by using something they call Z-Occlusion Culling (which takes place between Triangle Setup/Rasterizing) to determine if a pixel needs to be drawn at all.

Visual Quality

Ultimately, there's a lot of spiel and fancy technical know-how involved in unravelling the mystery of what drives the GeForce 3 and what makes it better, but there is very little if any involved in determining whether it looks good. The best benchmark for whether the GeForce 3 looks as good as its competitors is you. Having used the GeForce 3 for quite a while now, along with a similar system using a Radeon 64Mb DDR, I can quite honestly say that I have noticed a difference, and disappointingly, it's in the Radeon's favour. Although the GeForce 3 definitely handles matters a lot better than the Radeon (and is the first graphics card on our testbed to run 3D action monster Tribes 2 flawlessly at high resolutions), its competitor from ATI just looks slightly sharper at times, and that sort of distinction is important. Using identical settings (1024x768x32 with 4xFSAA) and running Quake III Arena as a common example, the definition of the scene was simply superior on the ATI Radeon. Of course performance was in the GeForce 3's favour (the Radeon was almost unusable with those settings in Quake III). Whether this makes a difference will have to be decided on a personal level, but if you need a more common benchmark (after all, most potential GeForce 3 buyers will already own NVIDIA cards), the visuals of the GeForce 2 GTS are pretty much of the same quality under certain circumstances as the GeForce 3. In terms of performance though as we have seen, the GeForce 2 gets beaten black and blue.

Pricing and Conclusion

The pricing of the GeForce 3 has been almost as hotly contested as its feature set, but like Intel, NVIDIA came to the conclusion that pricing high in a market where high end CPUs can be had for less than £100 would be disastrous. Like the 1.7GHz Pentium 4, the GeForce 3 has undergone several price reductions prior to launch, and now sits at an RRP of £399.99, with the card we used for testing in this review, the ELSA Gladiac 920, retailing at £349.99, and in practice, as little as £299.99 (from Dabs.com, who deserve a namedrop for the friendly reduction). At £299.99, the card is basically as good value as the GeForce 2 Ultra was in its heyday, and considering its performance and features it's as worthy of your money as anything else. The Radeon 64Mb DDR is arguably the GeForce 3's most worthy competitor, but in a year's time I seriously doubt it will be able to compete with the GeForce 3 for buyers. Granted, in a year's time we'll probably have a GeForce 3 Ultra or something similar, but wouldn't you rather be wowed off your feet in the meantime? I know I would. The problem is that by recommending the GeForce 3, we are in danger of doing what our predecessors did when the original GeForce 256 was released - recommending a new card based on its future capabilities. So our advice is to glance at the score below, give it due consideration, but also to read over what we've said and have a big think about what you want from gaming, visually, within the next year. With the GeForce 3 as it is, finding a common conclusion is impossible.