Xbox says it acted on 7.3m community violations in first six months of this year

As per inaugural Digital Transparency Report.

Xbox has released its first-ever Digital Transparency Report, offering insight into the work its community and moderation teams have achieved over the last six months in the name of player safety - including the news it's taken action on 7.3m community violations during that time.

Microsoft says it's publishing its inaugural Digital Transparency Report as part of its "long-standing commitment to online safety", with the aim being to release an updated report every six months so it can address key learnings and do more to "help people understand how to play a positive role in the Xbox community."

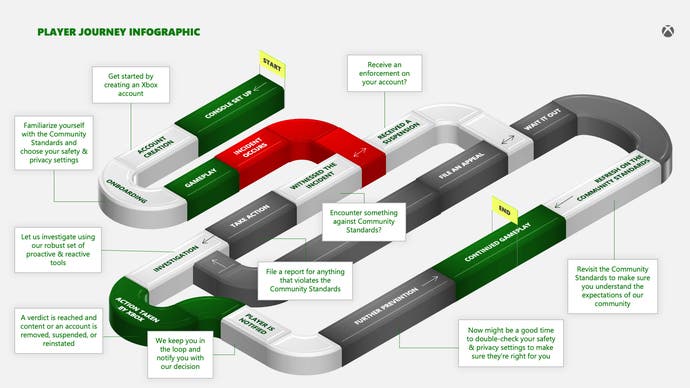

Much of the document is spent reiterating the various tools and processes Microsoft and Xbox players have at their disposal to ensure users remain safe and community guidelines are adhered to, ranging from parental controls to reporting.

However, the latter half of Microsoft's report goes into more specific details about its actions over the last six months, breaking down the number of player reports versus the number of enforcements (that is, instances where content is removed, accounts are suspended, or both) made on policy areas including cheating, inauthentic accounts, adult sexual content, fraud, harassment and bullying, profanity, and phishing during that time.

In total, Microsoft says it received 33m reports from players between 1st January and 30th June this year, with 14.16m (43 percent) related to player conduct, 15.23m (46 percent) focused on communications, and 3.68m (11 percent) based on user-generated content.

Of those reports, 2.53m resulted in what Microsoft calls reactive enforcements, and an additional 4.78m enforcements were made proactively (that is, were made using "protective technologies and processes" before an issue was raised by a player), bringing the total number of enforcements up to 7.31m. Notably, 4.33m (57 percent of all enforcements) were related to cheating and inauthentic accounts, based on activity detected prior to reports from players.

The full transparency report provides an interesting glimpse at the moderation challenges a platform like Xbox faces, as well as the steps Microsoft has so far taken to combat them.