In Theory: Can the Xbox One cloud transform next-gen gaming?

Or has Microsoft got its head in the clouds?

Xbox One will sit in your living room and burn through not the paltry 1.2 teraflops of computing power as was rumoured, but almost five teraflops - as much as the flagship £800 NVIDIA Titan graphics card. And then it will get faster and faster over time. At least, that's if the latest Microsoft PR campaign is to be believed. But how realistic is it to move game processing to "the cloud" and keep adding to the resources developers have available? Do Microsoft's claims have any basis in reality? Is this a tactical play to compete against the tangibly superior fixed-spec of the PlayStation 4 or is this really just wishful thinking?

Prior to Xbox One's reveal, gamers were already well-informed of the hardware specs thanks primarily to the Microsoft whitepaper leaks found on VGLeaks. Next to PS4, Durango, as it was known, looked like a significantly less capable machine - the same CPU but with lower memory bandwidth and an inferior graphics core. But anyone watching the reveal and subsequent architecture interviews with Microsoft's Larry Hryb will have seen a concerted effort by the firm to address this with numerous references to "the power of the cloud".

Marc Whitten, chief product officer, described the upgrading of Live to 300,000 servers and put the computational power into perspective by suggesting that this would have enough CPU power to match the potential of every computer in the world, judged by 1999 standards. Matt Booty, general manager of Redmond Game Studios and Platforms, recently told Ars Technica, "a rule of thumb we like to use is that [for] every Xbox One available in your living room we'll have three of those devices in the cloud available," suggesting some 5TF of processing power for your games, a sentiment echoing Australian Microsoft spokesperon Adam Pollington's assertion that Xbox One was 40 times more powerful than the Xbox 360.

With such phenomenal amounts of power available, Xbox One is potentially some two-and-a-half times more powerful than PS4 and able to go toe to toe with expensive top-end gaming PCs. However, there are numerous technical barriers to realising this that brings into question the validity of Microsoft's statements.

Let's start with a quick look at what they mean by 'cloud computing'. Before PR and marketing people brought their fancy buzzwords into the equation, 'cloud computing' was known as 'distributed computing'. This means taking a computational workload and distributing it over a network across available processors.

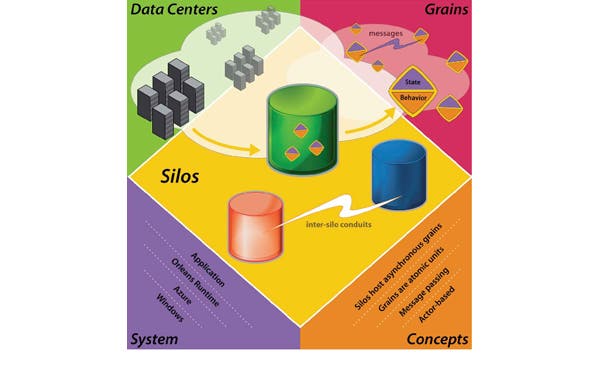

The idea of cloud computing is to create large server farms full of generic processing power and to turn them from one job to another as needed. Microsoft's existing cloud platform is called Azure, started in 2010, and it's been steadily growing in market share against rival products from Amazon and Google. For developers to be able to use cloud processing, they need both a structure for turning game code into jobs and a means to interface with the servers. This game code model has already been pioneered with this generation's multi-core processors. Developers quickly learned to break their games into jobs on PS3's Cell processor and use a scheduling program to prioritise and distribute jobs across available resources. As for the server interface, Microsoft has developed 'Orleans' and we know it's being used for Halo, but we don't know how.

"A rule of thumb we like to use is that [for] every Xbox One available in your living room we'll have three of those devices in the cloud available." - Matt Booty, general manager of Redmond Game Studios and Platforms

With those pieces in place, it's certainly possible that developers could send computational requests to servers and access potentially limitless processing power, depending on how much Microsoft wants to spend on its servers. However, there are another two significant pieces of the puzzle: latency and bandwidth. Microsoft has acknowledged the challenge of the former, but hasn't commented at all on the latter and it's a fundamental bottleneck to the concept.

The two challenges - latency and bandwidth

Latency is going to affect how immediate the computational requests of the cloud can be. When a game needs something processed, it sends a request to a server and waits for the reply. Even assuming instantaneous processing thanks to the power of the servers, the internet is incredibly slow in terms of real-time computing. A request from your console has to find its way through numerous routers and servers until it reaches its destination, and the results have the same labyrinthine journey back. To put this in perspective, when the logic circuits of a CPU want some data, they have to wait a few nanoseconds (billionths of a second) to retrieve it from its cache. If not in cache, the CPU has to wait as much as a few hundred nanoseconds to fetch the data from main RAM - and this is considered bad news for processor efficiency. If the CPU were to ask the cloud to calculate something, the answer won't be available for potentially 100ms or more, depending on internet latency - some 100,000 nanoseconds!

As a game has only 33 milliseconds to render a frame at 30FPS, such long delays mean the cloud cannot be relied upon for real-time, immediate results per frame. If you crash your Forza car into a wall, you don't want to see your vehicle continuing through to the other side of the scenery for the next three or four frames (even longer on those inevitable internet hiccups) until the physics running on the cloud return with the information that you've crashed.

The latency issue is something Microsoft recognises, with Matt Booty saying, "Things that I would call latency-sensitive would be reactions to animations in a shooter, reactions to hits and shots in a racing game, reactions to collisions. Those things you need to have happen immediately and on frame and in sync with your controller. There are some things in a video game world, though, that don't necessarily need to be updated every frame or don't change that much in reaction to what's going on."

With latency an issue, the scope for cloud computation is limited to a subset of game tasks. Okay, we can work with that, but we still have the last great consideration - bandwidth.

"Wildly variable levels of bandwidth worldwide are another problem, but even the fastest consumer-level internet connections in no way compare to the internal bandwidth of a modern console."

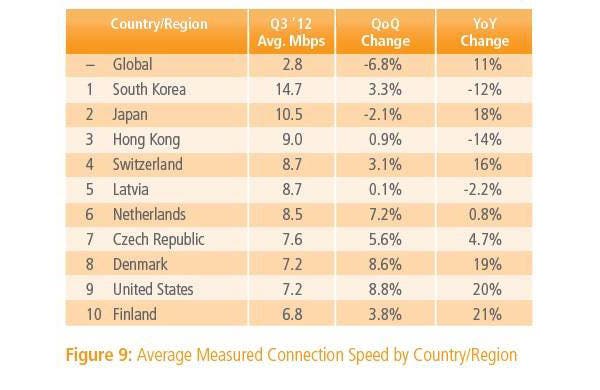

The quarterly Akamai state-of-the-internet report keeps us up to date on bandwidth available on the real-world internet. Average broadband speeds in the developed world struggle to reach over 8mbps as of Q3 last year - that's only one megabyte per second. This means that whatever cloud computing power is available, consoles will have available to them an average of 1MB/s a second of processed data. If we compare that to the sort of bandwidth consoles are used to, the DDR3 of Xbox One is rated at around 68,000MB/s, and even that wasn't enough for the console and had to be augmented with the ESRAM.

The PS4 memory system allocates around 20,000MB/s for the CPU of its total 176,000MB/s. The cloud can provide one twenty-thousandth of the data to the CPU that the PS4's system memory can. You may have an internet connection that's much better than 8mbps of course, but even superfast fibre-optic broadband at 50mbps equates to an anaemic 6MB/s. This represents a significant bottleneck to what can be processed on the cloud, and that's before upload speed is even considered. Upload speed is a small fraction of download speed, and this will greatly reduce how much information a job can send to the cloud to process. Taking the Forza crash example, if the console has to upload both the car collision mesh and scenery mesh to the cloud for it to calculate whether they have collided or not, that's going to take several seconds.

Of course, we wouldn't send data over the internet without compressing it first. It's through compression that OnLive manages to stream high-definition game video over those same slow internet connections. If OnLive can do it with games, why can't it be done with game data for cloud computing? The main issue here is that video can be compressed with lossy algorithms that throw away large amounts of data that the viewer is insensitive to, producing a video that looks largely like the uncompressed source while needing a fraction of the data. This isn't possible with game data like AI or physics states or models.

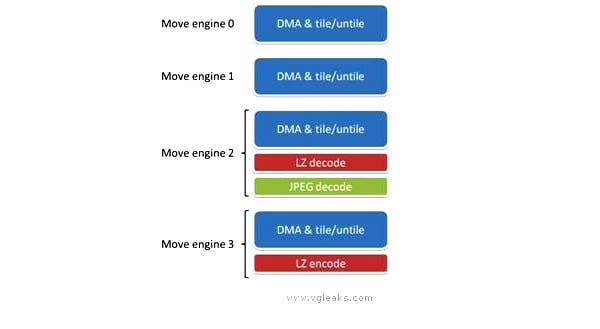

Various less efficient but non-destructive compression techniques can be used such as zip and Lempel-Ziv (LZ), and it's of note that Microsoft has included four dedicated 'Memory Move Engines' on its main processor, two of which have LZ abilities. One has LZ decode and the other LZ encode, meaning Microsoft has such interest in compressing data that it has dedicated silicon to the job instead of leaving it to the CPU. The raw specs of 200MB/s data decoding is certainly enough to handle any amount of data traffic coming from the internet at broadband speeds, but the inclusion of these engines sadly doesn't point conclusively to an intention in streaming compressed assets from the cloud.

"There's very little in the official or unofficial leaked Xbox One specs to suggest that cloud support was integral to the design of the new console hardware."

It has long been faster to load and decompress assets from slow disk drives then to load uncompressed data, and these memory engines may just be present to facilitate such ordinary tasks. With full developer access, there's no reason to think developers won't use the memory engines with downloaded assets, but even then these cannot solve the bandwidth limitation problems of cloud computing. LZ compression typically achieves in the order of a halving of file sizes, effectively doubling the broadband connection speed to still insignificant rates.

What can the cloud actually be used for?

What this means is that cloud computing cannot be used for real-time jobs, something Microsoft has admitted. What it could be used for though are large datasets that are non-time-critical and can be downloaded in advance to the HDD.

Microsoft readily acknowledges this. Matt Booty said, "One example of that might be lighting. Let's say you're looking at a forest scene and you need to calculate the light coming through the trees, or you're going through a battlefield and have very dense volumetric fog that's hugging the terrain. Those things often involve some complicated up-front calculations when you enter that world, but they don't necessarily have to be updated every frame. Those are perfect candidates for the console to offload that to the cloud - the cloud can do the heavy lifting, because you've got the ability to throw multiple devices at the problem in the cloud."

If we look at a typical game's requirements of its processors, we can look for opportunities to utilise the cloud. A typical game engine cycle consists of:

- Game physics (update models)

- Triangle setup and optimisation

- Tessellation

- Texturing

- Shading

- Various render passes

- Lighting calculations

- Post effects

- Immediate AI

- Ambient (world) AI

- Immediate physics (shots, collisions)

- Ambient physics

Of these, only the ambient background tasks and some forms of lighting stand out as candidates for remote processing.

Lighting has been named as a possibility for cloud processing by Microsoft. Lighting has a history of pre-calculation, creating fixed data that is stored on disc and loaded into the game. Early use of this concept was "pre-baked" lightmaps, effectively creating textures with the lighting fixed in placed, which could look fairly realistic but was static and only worked with non-interactive environments. Advances like pre-computed radiance transfer (PRT) have made pre-calculated lighting more dynamic, and the current state-of-the-art is Unreal Engine's Lightmass featured in the next-gen Unreal Engine 4. This pre-computes light volumes instead of the real-time calculations of Epic's former SVOGI technology which was deemed too computationally expensive. Although the cloud could not run SVOGI type dynamic lighting due to latency and bandwidth limits, it does offer the possibility of 'pre-calculating' lighting data for dynamic scenes.

Things like time-of-day can be uploaded to the server, and the relevant rendered lighting for the local area sent back over a few minutes. This data will be saved to HDD and retrieved as the player walks around. Delays in updating such subtle changes won't be apparent, so the problem is highly latency tolerant. Dynamic lights like muzzle flashes cannot be handled this way so the developers would still need to include real-time lighting solutions, but advanced lighting is one area the cloud could definitely contribute.

However, improvements in local rendering power and techniques have made real-time global illumination - realistic without the artifacts and limitations of prebaked lighting - a real possibility without needing servers. Crytek's cascaded light propagation volumes were shown running on a GTX 285 in 2009 and was extremely impressive. A future Battlefield game with destructible environments is going to want an immediate lighting solution as opposed to shadows and lighting updating a few seconds after every change.

"Although the cloud could not run real-time global illumination due to latency and bandwidth limits, it does offer the possibility of 'pre-calculating' lighting data for dynamic scenes."

Another known possibility for cloud computing is AI, not for direct interactions such as determining if an NPC should duck or shoot, but for background AI in living worlds like Grand Theft Auto and Elder Scrolls. The complexity of these games has always been limited to the console's resources, and AI has often been very limited to simple behaviour routines. Cloud computing could run world simulation and just update the player's local world over time, allowing the world to live and respond to player actions. Such complex game worlds could be a significant advance, but they are also constrained to a limited set of game types. Games like Xbox 360's flagships Gears of War and Forza Motorsport have little need for smart NPC AI of this kind.

So what other options are there for cloud computing? In this regard, perhaps it's a bit disconcerting that Microsoft seems stuck for ideas. In the Xbox One architecture panel, Boyd Multerer, director of development, had this to say: "You can start to have bigger worlds. You can start to have lots of players together, but you can also maybe take some of the things that are normally done locally, push them out, and... you know, this generation is about embracing change and growth while still maintaining the predictability the game developer needs."

Marc Whitten, chief product officer, said in a post-event interview: "We can take advantage of that cloud power to create experiences and really pair with the power of the box. So game creators can use raw cloud computation to create bigger multiplayer matches or bigger worlds, more physics, all those things. I think you're going to see unparalleled creativity when you match that power of the new Xbox Live with the power of the new Xbox."

Neither is presenting a clear vision for cloud compute opportunities, while both mention increased numbers of players. We also have recognition of the importance of the local hardware. Nick Baker, console architect, commented on the usefulness of AMD's multitasking GCN architecture, telling us, "The GPU as well is multitasking so you can run several rendering and compute threads so all of the cloud effects and AI and collision detection on the GPU in parallel while you're doing the rendering..."

That's something of a mixed message, although Microsoft does say it is treading new ground here, paving the way into new territory, so it behoves them to provide conventional solutions for games. If we attempt a little more creative thinking than Microsoft, there are a couple of options for the cloud that the firm doesn't seem to have hit upon. Procedural content creation, a big buzz-phrase waved around at the start of this generation but which never got anywhere, could be performed online to generate textures and models, filling a city with varied people and buildings.

"Allegorithmic's Substance Engine creates textures algorithmically using local processing power. The same tech could be used in the cloud to create assets, such as maturing people and environments, creating worlds that age."

Allegorithmic's Substance Engine, available for PS4 and (we would presume) Xbox One, creates textures algorithmically using local processing power. The same technology could be used in the cloud to create assets, such as maturing people and environments and creating worlds that age. Given Peter Molyneux's years-old aspirations for such lifetime world persistence, it seems a good fit to take Fable to the cloud and run it on servers that grow your world, although the remarkable amount of processing power in the consoles (a teraflop is still a lot of processing power) could well be enough for this in itself seeing as such changes are so slow and could be run in parallel with the rest of the game. We can also imagine procedurally generated speech. Maybe with suitable research, servers could use highly demanding speech simulation to create realistic NPC chatter that adapts to the game, instead of endlessly recycled prerecorded sound bites.

Another possible use disregards the processing power and focuses on the virtually limitless storage of the cloud. We already have simple games using the whole world as a gameplay space, such as GeoGuessr using Google Earth. As mapping technology advances, we can expect far more detailed representations in future. Project Gotham Racing was limited to the environments captured by Bizarre that could fit on the disc. Imagine if the data of Google Earth and the modelling techniques of Bizarre could come together in the next Forza, so you could pick any location in the world and start driving. With cloud storage breaking through the limits of disc-based game storage, it's certainly possible. Euclideon's Unlimited Detail tech hints at a future of 3D scanned worlds, and one can't help but wonder how long it'll be before Google takes out Kinect-style 3D cameras to scan the world in all dimensions.

Economic considerations: why multiplayer makes sense

Beyond the technical considerations of what is and isn't possible due to bandwidth and latency constraints, there are of course economic considerations. Running a server to provide a solo player game is extremely expensive. It makes far more sense to use the servers to run multiplayer games, and that was alluded to by the Microsoft spokespeople all suggesting enhanced multiplayer experiences. In this respect, Microsoft's vision doesn't seem far removed from the age-old ideas of MMOs and server-based gaming. A server running a living, breathing Elder Scrolls world with cloud-computed lighting and AI would only make economic sense as a multiplayer experience, at which point it becomes an Elder Scrolls MMO.

However, this implies that any game running on a server with the console as a client can add the server's computational power to the console when really they are two distinct entities working together. When you play Battlefield 3 on your Xbox 360, do you have the equivalent power of a dozen Xbox 360s because the server is notionally that powerful? Microsoft's claims seem pretty wishy-washy against such a comparison, and without the explicit clarification that they are literally installing four teraflops of server power for each and every Xbox One bought, the claims of that power target can only be considered bogus PR hand-waving to try and detract from the performance deficit with their rival.

"Imagine if the data of Google Earth and the modelling techniques of Project Gotham could come together in the next Forza, so you could pick any location in the world and start driving."

Furthermore, there's the issue of how game developers are supposed to target this cloud experience. What if the internet is not available, or running slowly? Games players may find constant high-use data streaming sees their ISP move them onto slower, less reliable connections. And this doesn't even factor into how bandwidth may be utilised in the home - what happens to your Xbox One cloud title if someone else in the home is streaming Super HD Netflix video?

Matt Booty, talking to Ars Technica, addressed this in a less than convincing way, with the same lack of certainty as Microsoft's remarks about how the cloud could be used.

"In the event of a drop-out - and we all know that internet can occasionally drop out, and I do say occasionally because these days it seems we depend on internet as much as we depend on electricity - the game is going to have to intelligently handle that," he suggested, somewhat weakly.

Booty urged us to "stay tuned" for more on precisely how that intelligent handling would work, stressing that "it's new technology and a new frontier for game design, and we're going to see that evolve the way we've seen other technology evolve".

Of course, dealing with the internet's fickle nature is nothing new and plenty of technologies have been developed for online games, but the demands of cloud-based processing on large problems will present new hazards to navigate. Developers may need to have backup systems that aren't reliant on an internet connection, such as a conventional lighting system. Hypothetically, gamers run the risk of artifacts like Rage-style texture blur-in or laggy multiplayer-style NPC updates where data is lost and the game has to use whatever it has available for the lighting until the cloud service catches up.

For cross-platform titles running on PS4 and PC, there's the question of the economic sense in developing Xbox One specific cloud-based augmentations rather than using the same cross-platform algorithms toned down for the Xbox's less powerful GPU. This places Microsoft in a conundrum - if the cloud is unavailable to its rivals, it is unlikely to be used in third-party games and the Xbox One is unlikely to benefit outside of a few exclusives. If Microsoft does extend its cloud service to other platforms, it loses the computing advantage claimed for Xbox One.

What's obvious at this point is that the concept of cloud computing looks uncertain and unlikely, and Microsoft needs to prove its claims with actual software. Yet based on what we've been told, the firm itself isn't sure of what uses to put it to, while the limitations of latency and bandwidth severely impede the benefits of all that computing power. Frequent references to Live and multiplayer gaming suggest a less exciting, though certainly valuable, use for Microsoft's new servers in providing better, conventional, multiplayer experiences. More players, adaptive achievements and intelligent worlds all sound great in theory, but we're certainly not seeing the notional results of a four-fold increase in Xbox One's processing power.

Microsoft needs to prove its position with strong ideas and practical demonstrations. Until then, it's perhaps best not to get too carried away with the idea of a super-powered console, and there's very little evidence that Sony needs to be worried about its PS4 specs advantage being comprehensively wiped out by "the power of the cloud".